In comments, a reader asks me to comment on Tamino’s recent posts about surface temperatures. My first response is that I have been skimming them. The reason is that, while I am interested in the quality of the surface temperature records, I have never been interested in actually doing all the tedious work required to create one myself. I leave that to those motivated to do that.

Due to my interest, I have been reading the various comments about problems that might screw the record up, result in uncertainty or bias, and I do comment from time to time. For example, I commented on why rounding to temperature readings from individual stations to ±1 C should not affect our ability to estimate trends.

So today, I’m going to comment on results posted by two different people: Tamino and Roy Spencer. Each addresses slightly different questions. Both present results that they admit are, in some sense, preliminary. Both are interested in determining whether the loss of stations from 1980-1990 biased the determination of station trends.

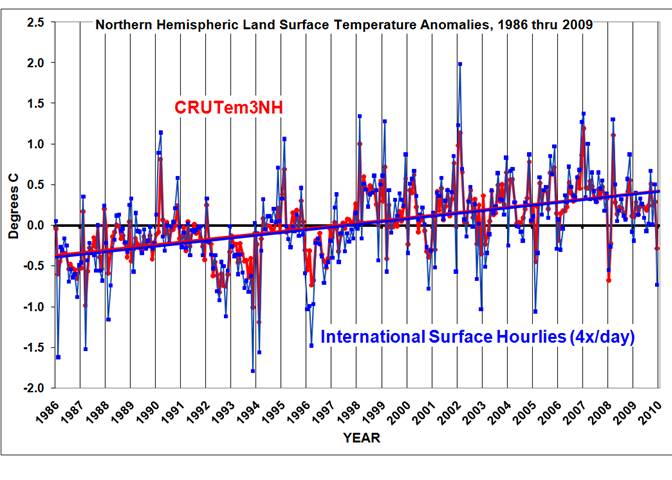

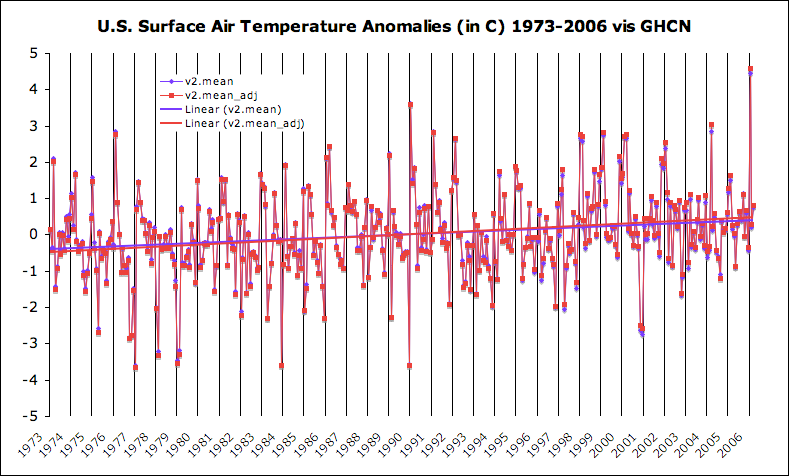

- Roy Spencer replicates CRU since 1986.

If I understand his post correctly, Roy has used the satellite to determine temperatures at the locations sampled by CRU and then averaged to discover whether he replicated CRU. This is a useful test to discover whether the satellite replicates surface readings when the satellite samples in the exact locations where surface thermometers are deployed.Update: 6:51 pm. [If I understand his post correctly, Roy intends to devise a method to use satellite measurements to determine the monthly surface temperatures. In what I suspect is a preparatory step, he is computing a surface temperature series based on thermometers. Owing to the controversy surrounding the reduction in stations from 1980-1990, he compared a series he computed using a constant number of thermometer and compared that to CRU. ]

Doing this, he gets a very good replication of with a correlation coefficient of 0.91 for monthly temperature values.

The agreement is illustrated this figure take from Roy Spencer’s blog:

If I understand correctly think Spencer maintained a constant number of stations throughout his computations. If so, his good agreement tends to indicate the loss of stations in 1980-1990 doesn’t matter. Roy himself reports

But at face value, this plot seems to indicate that the rapid decrease in the number of stations included in the GHCN database in recent years has not caused a spurious warming trend in the Jones dataset — at least not since 1986. Also note that December 2009 was, indeed, a cool month in my analysis.

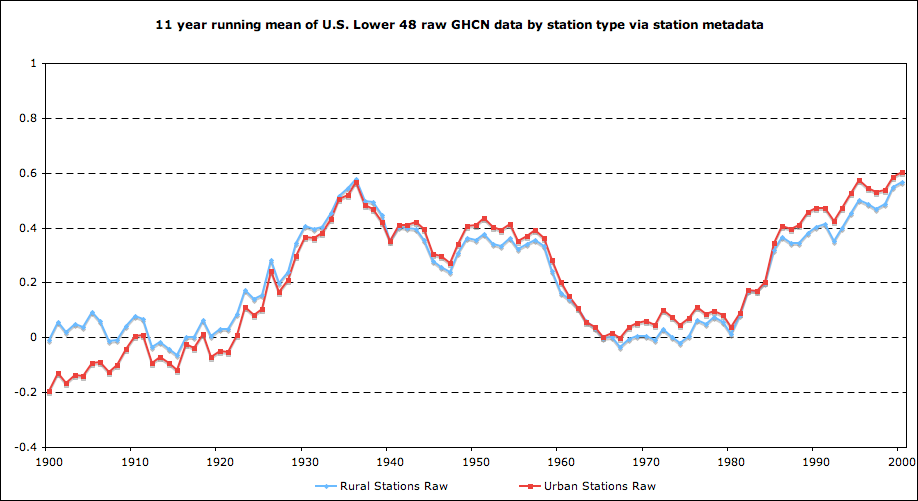

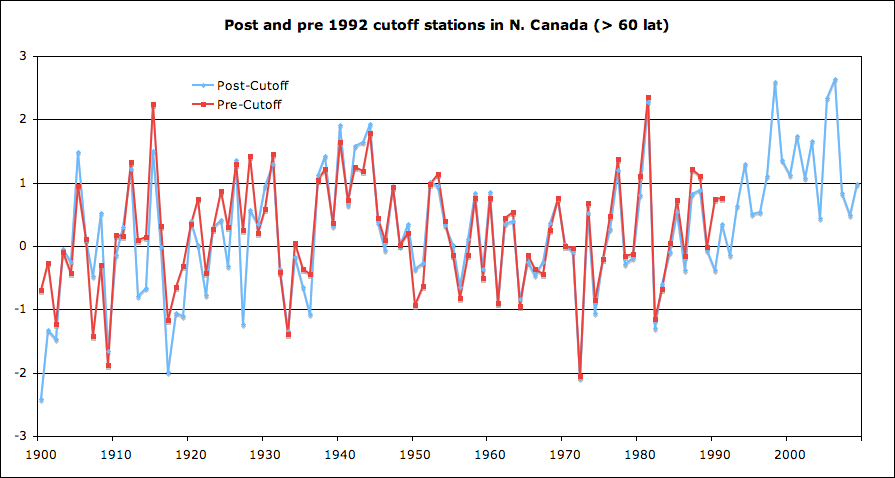

- Tamino appears to have computed pre-1980 trends using the subset of stations retained after 1990 and compared those result to the trends computed using the full complement of stations operating at any time. If removing stations biased the result, you would expect to see these two computed trend diverging.

The two results are compared in this figure taken from Tamino’s blog:

Tamino finds they do not diverge saying:

Of course, I’ll continue processing grids until I cover the entire GHCN, and report the results for the entire globe. But so far, the results flatly contradict the claim that the station dropout has introduced an artificial trend into the global temperature time series. We are not surprised.

I have no particular interest in auditing these results to see whether I would get the same results if I did the exact same thing. What I can note is that we have two completely different people using two approaches, on entirely different points in the “activist-skeptic” spectrum both reporting that loss of thermometers in the 80s and 90s does not appear to have biased the surface temperature record in a way that would bias trends in any significant way.

Am I surprised?

I am not surprised that Tamino discovered dropping out stations did not bias the trends for temperature anomaly. If all other things kept similar (i.e. station quality, measurement system, area averaging, etc.) the first order effect of dropping out samples should be to make an area-averaged temperature anomaly noisy, without introducing any particular bias.

This is not to say that bias cannot possibly be introduced when the number of stations contracts drastically. It was a question worth asking and investigating. However, it is an issue where, betting people should expect to increase noise, not bias.

So even though Tamino reports he is using coarse grids and has not yet analyzed trends for the entire surface of the earth, (as one would expect if the work is being done as an “work at home hobby project on my own personal computer”) the results he is reporting are more-or-less what I would have expect before anyone did all the tedious number crunching. This is not to say that there could never have been any surprise nor that there could not possibly be surprises in the future: Maybe further work will reveal something else.

However, for reasons stated above, I would be surprised if any strong warming or cooling bias had been introduced by station drop out.

I expect that further refinements of grid size, or any other approximation Tamino will continue to find that dropping out the stations did not result in any appreciable bias one way or the other.

I am more surprised that Roy is getting the same results as CRU!

The reason I am a bit surprised is rather subtle: Roy is using an entirely different measurement system. So, in his case, he might have gotten different results because it turned out that the satellite didn’t resolve the temperature at surface stations correctly, or because barbecue grills near the surface thermometers really were affecting the overall trend or for various and sundry reasons.

In addition to suggesting station drop out had little effect on detection of climactic trends, Roy’s close replication of the surface measurements suggests these other issues are also not dramatically affecting the trend since 1986.

Roy reports that he is continuing work and will be reporting more; Tamino says he will also be reporting. Meanwhile Chad seems to be examining station trends as well. We’ll learn more as they each report.

Update: I’m closing comments on this thread. Please continue at:

new thread

The close match with the UAH data is pretty compelling evidence that any UHI effect has not been removed by CRU.

Raven–

Why do you think that? Roy doesn’t think so.

All temperatures measured by the satellite will include any effects from land use change (a.k.a. UHI). If the data that includes UHI effects matches data that claims to not include UHI effects then that must mean that 1) UHI effects don’t exist or 2) The UHI effects were not removed.

BTW: Roy says this:

Of course, an increasing urban heat island effect could still be contaminating both datasets, resulting in a spurious warming trend.

Raven–

Ahh… I see what you mean.

If so, then I imagine when Roy computes surface temperatures without matching CRU stations, we’ll learn a lot about all these UHI arguments. That would be very interesting both from a policy and science perspective.

So…. we’ll see!

Raven (Comment#34949) February 25th, 2010 at 5:49 pm

I would have thought it would lead to the opposite conclusion. The satellites are not ground level readings.

bugs,

Roy is creating ground level measurements from satellite data. It will inlcude UHI.

The analysis was also since 1986, anomolies take care of much of the UHI influences.

Lucia,

Perhaps I’m missing something here or completely misunderstanding, but Roy Spencer says he is creating a surface station data set from the NOAA International Surface Hourly data set –

‘Our new satellite method requires hourly temperature data from surface stations to provide +/- 15 minute time matching between the station and the satellite observations. We are using the NOAA-merged International Surface Hourly (ISH) dataset for this purpose.’

I understand that to mean that this is ground station data that will be used in the future to match against his satellite data, and he is surprised that this matches closely with the CRU dataset when he compares?

Carefully reading Roy’s post, I do not think that this is satellite data at all, but rather the station data which the surface temperature channel would be calibrated with. I note, contrary to Raven’s statement, Roy says:

“Our method will be calibrated once, with no time-dependent changes, using all satellite-surface station data matchups during 2003 through 2007. Using this method, if there is any spurious drift in the surface station temperatures over time (say due to urbanization) this will not cause a drift in the satellite measurements.”

Roy has not yet shown the results of the Aqua analysis compared with the International Surface Hourlies, which of course could still have biases in them as they are stations, not satellites.

So will Watts now apologise for his incompetence and lies about ““SURFACE TEMPERATURE RECORDS: POLICY DRIVEN DECEPTION?—

http://tamino.wordpress.com/2010/02/15/dropouts/

Re: Chuckles (Feb 25 18:27),

Just before the part you quoted, Roy says this:

I have assumed — possibly incorrectly that –the “NOAA-merged International Surface Hourly (ISH) dataset for this purpose.” has something to do with information obtained from “the Aqua satellite AMSU window channels and “dirty-window†channels. These passive microwave estimates of land surface temperature,”

Have I misunderstood?

Andrew_FL & Chuckles.

Yes. I think you are right. He must be doing this in preparation for comparing with his new aqua satellite based method.

He is finding that the change in station numbers doesn’t make much difference. I’ll strike out to reflect this!

[quote bugs (Comment#34976) February 25th, 2010 at 6:33 pm ]

So will Watts now apologise for his incompetence and lies about ““SURFACE TEMPERATURE RECORDS: POLICY DRIVEN DECEPTION?â€â€

[/quote]

.

I believe that Dr. Christy is siding with Watts in the sense that he feels there is concern station siting could be causing bias.

.

If this is the case, it would seem Dr. Christy does not consider Dr. Spencer’s work to be conclusive.

‘Have I misunderstood?’

Lucia,

No idea, but my reading was that he was indeed going to be using that aqua sat data, but that it would require some ground station data to validate/calibrate the sat data, and he was downloading the ISH data in anticipation of that.

The ISH data is apparently from 1901, so if it is a satellite data set, some governments somewhere have REALLY been hiding some stuff from us.

I got the impression that the HADCRU check was simply a sanity check to see that he was getting some sort of real data, and he was surprised at the numbers.

He also made a brief comment –

‘I also recomputed the Jones NH anomalies for the same base period for a more apples-to-apples comparison.’

which might just mean some subsetting rather than subsampling, but who knows?

I just hope as many people read this blog (and other) as watched the prime time TV broadcast where D’Aleo and E.M. Smith said that NOAA “is seriously complicit in data manipulation and fraud†by “creating a strong bias toward warmer temperatures through a system that dramatically trimmed the number and cherry-picked the locations of weather observation stations they use to produce the data set on which temperature record reports are based.â€

Hell, even simply comparing raw station anomalies (without any spatial gridding) would have shown them that they were probably barking up the wrong tree:

http://www.yaleclimatemediaforum.org/pics/0110_Figure-23.jpg

Chuckles–

I now agree with you. It appears “NOAA-merged International Surface Hourly (ISH) dataset for this purpose.” is a ground based thermometer set.

Bugs–

Sorry, but you don’t get to gloat quite a much yet. Since I was mistaken Roy using the Aqua satellite, that means that the agreement doesn’t tells us anything about whether the barbecues, moves to roof-top etc make little difference.

But Roy’s analysis does suggest that the drop in station numbers didn’t result in a bias.

Zeke–

Was the show on cable? I don’t get that. 🙁

Is it on youtube? or Hulu?

Lucia,

Yep, its a cable channel: http://en.wikipedia.org/wiki/KUSI-TV

I blogged a bit about the broadcast at the time: http://www.yaleclimatemediaforum.org/2010/01/kusi-noaa-nasa/

Here is a video of the part in question: http://www.kusi.com/weather/colemanscorner/84174357.html

And the final puzzle was this comment by Dr. Spencer –

‘Note that the linear temperature trends are essentially identical; the correlation between the monthly anomalies is 0.91.

One significant difference is that my temperature anomalies are, on average, magnified by 1.36 compared to Jones. My first suspicion is that Jones has relatively more tropical than high-latitude area in his averages, which would mute the signal. I did not have time to verify this.’

which I will leave for others to interpret.

Isn’t it the case that you can introduce bias by oversampling? If so, perhaps this is the reason so many stations in the U.S. are being dropped. There were far more U.S. stations than anything else before the drop.

.

But regardless of the math, it’d be nice if we were told why they were dropping stations to begin with, rather than trying to guess the reason for it.

magicjava,

Stations aren’t dropped per se. To understand the “dropout” issue in GHCN, you have to look at how GHCN is compiled and where the temperature data comes from. A good portion of the historical temperature data were compiled from around the world during an effort by the WMO during the 80s and 90s to acquire surface temperature records from more remote areas. During that period of time, the WMO worked to identify a network of 1300 or so stations with good spatial coverage that could provide monthly temperature updates (called the Global Surface Network – GSN). Governments of the respective countries are in charge of filing reports (called CLIMAT reports) to the WMO, which supplies the data to the NCDC to incorporate into GHCN after basic quality assurance tests. Since 1990, there has been no major effort to collect data from remote stations outside the GSN network (with the exception of USHCN), though some scientists believe that it is time to do another round of updates. However, numerous studies have found that the GSN faithfully estimates global temperature (with the exception of Antarctica, which has its own network of stations).

So the “drop” in stations around 1990 reflects the date at which the last major effort to collect station data outside the GSN network was undertaken. I would argue that, a decade or two from now, the number of stations available for the 1990s and 2000s will probably exceed the 6,000-station peak reached in the 1970s. I’ve heard through the grapevine about plans to undertake a new effort to collect data from stations outside the GSN to update GHCN with more remote station data.

The key things to remember is the fact that GHCN is a retrospective compilation (e.g. there weren’t 7000 stations reporting to the GHCN in the 1970s) with only a representative subset (GSN) reporting regularly.

Re: magicjava (Feb 25 19:23),

Hypothetically, if you were computing the average surface temperature without doing some sort of reasonable area weighting, and then you added or subtracted thermometers, sure. Or maybe if you suddenly had loads of dropped grids in locations that were warming or cooling more than other places.

But the former really was never much of an issue since CRU, GISS, NOAA etc. did area averaging. The latter is something that could hypothetically occur. That just means you ask the question, then you check. The question was asked. Enough people asked that Roy and Tamino both were motivated to check. Their first cut says the bias isn’t happening. It looks like Zeke did something a bit less elaborate and he finds the same thing.

My guess is budgetary restrictions along with information that suggested the main factors limiting accuracy in whatever they wanted to observe or predict was not improved by increasing the number of thermometers above a certain level. Scientists and engineers optimize number of sample locations, vs. data rates vs. accuracy of individual instruments vs. cost in experiments all the time.

On UHI, see

A Pending American Temperaturegate By Edward R. Long see February 24, 2010

Such pairing of urban vs nearby rural sites may be particularly helpful for establishing ground truth temperatures for Roy’s satellite calibration. The 0.47 C UHI effect is a major portion of the IPCC’s 0.7 C rise for the 20th century.

magicjava (Comment#34981)-I wish more skeptics would make an effort to separate the issues with the surface data out. Spencer has ONLY refuted the argument that station dropout is biasing the trends. This does NOT mean that the data do not have urbanization, siting, and other biases.

John believes that he and his colleagues have shown that the surface data exaggerate the real warming. This is based not on the dropout of stations but extensive analysis of data available in certain regions and comparison with LT satellite data. This is elaborated on in Klotzbach et al and several other papers.

I used GHCN data and CRU gridding and global averaging scripts to do a similar analysis. I saw no reason to believe that dropping high alt, high lat or rural stations caused significant changes in the surface record. And of course, for the last 30 years, we have very similar numbers for both troposphere and surface records.

GHCN: High Alt, High Lat, Rural

http://rhinohide.wordpress.com/2010/02/01/ghcn-high-alt-

high-lat-rural

Welcome to the climate blogging world Ron; I haven’t had the pleasure to visit your site before, but I definitely will in the future.

Nobody should expect dropping stations (even if there is a altitude and latitude bias ) to introduce a bias into the mean.

If GISS does things right ( TEST WITH GISS) the anomaly method should handle this. speculating about this is fun, but the real test should happen with GISS code.

What will change is the noise; the mean??? probably not,

Steven,

I think the mean would be biased over time. If the high northern latitudes are warming faster than anywhere else and the lower atmosphere is warming faster than the surface, then there might be a detectable difference in trend. Might not amount to much though.

Raven:

This comment is based on a misunderstanding on your part about the UHI.

The purpose of the UHI correction in surface temperature measurements is not to remove the influence of urban environments (which is a real effect) but to prevent the introduction of spurious warming, either through shifts in microclimate or through over sampling of the urban environment.

On another point, if you look at the way CRUTEMP grids and averages data, it is easy to show that the drop in stations since 1980 should have no effect on their final answer. One way to do this is to look at mean latitude of the land-based stations using the same weighting they use to compute the global average.

Figure here.

The main effect of shifts in mean latitude is due to the fact that the temperature trend (what I call “Tdot”) increases as you move closer to the North Pole:

If you do an unweighed average (e.g., Smith’s approach), then dropping arctic stations will decrease the temperature trend for the recent years. Isn’t this the opposite of what Smith claims though?

(Note that this figure suggests the CRUTEMP algorithm introduces a positive bias to the the warming trend for the early 20th century.)

Latest post on SPPI regarding UHI effect

http://scienceandpublicpolicy.org/images/stories/papers/originals/Rate_of_Temp_Change_Raw_and_Adjusted_NCDC_Data.pdf“>

Edward R. Long is a physicist who retired from NASA where he led NASA’s Advanced Materials Program,

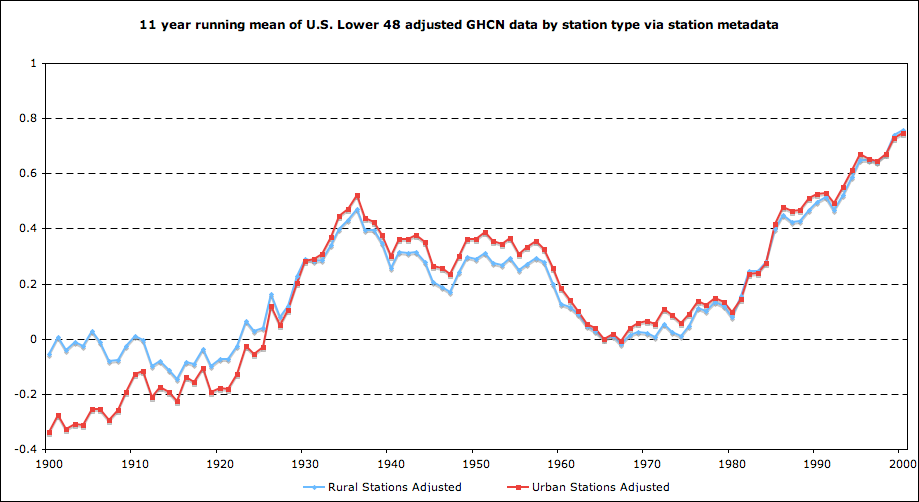

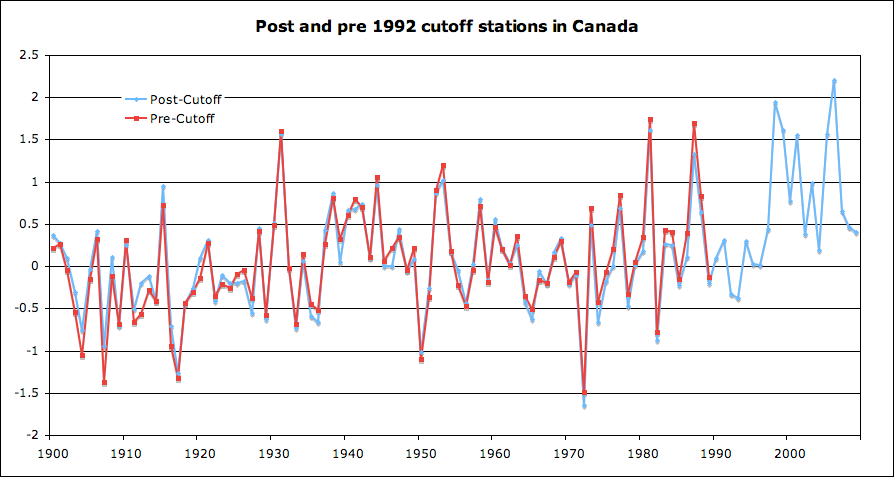

SUMMARY

Both raw and adjusted data from the NCDC has been examined for a selected Contiguous U. S. set of rural and urban stations, 48 each or one per State. The raw data provides 0.13 and 0.79 oC/century temperature increase for the rural and urban environments. The adjusted data provides 0.64 and 0.77 oC/century respectively. The rates for the raw data appear to correspond to the historical change of rural and urban U. S. populations and indicate warming is due to urban warming. Comparison of the adjusted data for the rural set to that of the raw data shows a systematic treatment that causes the rural adjusted set’s temperature rate of increase to be 5-fold more than that of the raw data. The adjusted urban data set’s and raw urban data set’s rates of temperature increase are the same. This suggests the consequence of the NCDC’s protocol for adjusting the data is to cause historical data to take on the time-line characteristics of urban data. The consequence intended or not, is to report a false rate of temperature increase for the Contiguous U. S.

Surely this is like any other sampling problem.

Lets say we are sampling cities and plotting the incidence of reported diseases. We go from a few tens to a few thousands and back to a few hundreds. As we do so, we plot that the incidence of a certain disease has risen over time, very much in the last 50 years, which also coincidentally is the time of the sharpest decrease in the number of cities reporting. Or at least, included in our sample.

It is a perfectly reasonable question to ask, whether the sample has remained equally representative at all times. Whether over representation of some locations may have occurred. Whether the larger cities may have changed in ways that affect infection rates more than smaller ones, and whether the sampling reflects this.

It seems that the onus is on those who think that homogeneity has been preserved during these large movements of the sample to prove it. Those being asked to spend huge amounts of money partly on the hypothesis that it has, may be excused for saying, show me.

It’s a pity that Tamino didn’t compute (or at least didn’t publish) the 20th century trends for both his data sets. Because I would have thought that cooling the 1920s-1930s by 0.25 degrees and warming the late 1980s by the same amount (as shown in his graph) would indeed introduce a warming bias to any “20th century trend” calculation?

Nobody is saying (except some perhaps as a strawman) that dropout explains the entire warming trend. But if there’s a small trend from dropout, a small trend from UHI and a small trend from “adjustments” then together these could be making a significant part of any observed overall trend.

Personally I’ve no idea whether station dropout is an issue, and it’s marvellous that people are investigating it. And it may well be that when you run the numbers these anomalies don’t make for a significant trend. But if his object is to convince people that it doesn’t, then he probably ought to show it and not just state it.

Haha. Temperaturegate now? Is there any word that climate conspiracy theorists will not add “gate” to?

Edward Long needs to read the literature. Yawngate.

Phil A (Comment#35103) February 26th, 2010 at 5:54 am

There’s the rub. There is no end to the issues that can be raised for investigation. Then demand the scientists prove it wrong.

Lucia:

Thank you for taking a look.

As always, I learned something useful from your work.

Is ground temperature (as opposed to air temperature) measured at a depth of, say, 10 feet a good representation of average air temperature? I’ve been thinking of getting a geothermal heating system and according to the literature I’m looking at someone has mapped the “mean earth temperature” of the US. Chicago is around 51 F. Perhaps ground temps would provide a way of checking whether the adjustments being made to station data were valid.

http://www.geo4va.vt.edu/A1/A1.htm – Figure 2.

Re: Chad (Feb 26 01:30),

Chad– yes. Bias can happen in the way you describe. That’s why I said the first order effect is noise. But Bias can happen. That’s why suggesting the possibility of bias is is not stupid, and checking is wise.

HankHenry (Comment#35113) February 26th, 2010 at 7:00 am

Good question~! especially if you think you can take the temperature of the planet; all that should matter.

You can google for data like that. “soil temperature” http://soils.usda.gov/use/thematic/temp_regimes.html

Steve Short:

“…consequence intended or not, is to report a false rate of temperature increase for the Contiguous U. S.”

Yep!

Seriously I don’t believe anybody can take the planet’s temperature let alone give it an “average” temperature by tenths of a degree. I don’t believe it’s possible to be accurate at all. I think it is misleading people and scaring them for no reason.

Re: Phil A (Feb 26 05:54),

Your right. There are a list of things that could cause bias and uncertainties. Dealing with one at a time makes things more tractable. That’s why I discussed the ±1C rounding issue with a ‘toy’ model (and chad did more involved analysis with data from models– rounding it then computing trends.) This is the easiest one because it can’t affect the computation of climate trends– it’s just buried in noise.

Then people can address the station drop out– Roy, Tamino, Zeke etc. did that. That’s a more difficult issue that the ±1C rounding because while it’s unlikely to be a huge effect, it’s not impossible. So, you really, really have to check. It looks like both Tamino and Roy are doing thorough jobs on that, and Tamino appears to be considering incorporating an suggestion by RomanM.

Urban Heat Island, rural/urban adjustments and station quality are the difficult issues. Addressing the first two just gets us to the point about arguing whether or not these have been dealt with properly (and whether it’s even possible to do it right.)

Re: bugs (Feb 26 06:47),

You know what the process of endlessly raising issues that to be investigated is called? Part of the scientific process!

‘You know what the process of endlessly raising issues that to be investigated is called? Part of the scientific process!’

Lucia,

I would suggest, exactly as we were doing at the beginning of this thread with regard to Dr. Spencers’ work?

Chuckles– Precisely.

Roy Spencer also noted

NOW…in retrospect, I’m surprised no one asked the following question:

If the monthly temperature anomalies are, on average, 36% larger than Jones got…why isn’t the warming trend 36% greater, too? Maybe the agreement isn’t as close as it seems at first.

Steve Short,

This result is very interesting; however, I have a few questions for the author:

1) What was the selection criteria to choose 2 stations per state. Can it be randomly varied to get ensembles?

2) Why “publish” this at SPPI? Did he try GRL and was rejected?

liza (Comment#35119) February 26th, 2010 at 7:27 am

Another curious thing. The average temperature of the oceans (considering total depth) is something like 4 C. We hear a lot about the greenhouse effect. What effect is it that cools oceans below average surface temperatures?

Dear Lucia, I know this is the wrong thread but I hate the fireworks on the others. The question I want to pose is, has Judith Curry opened wounds towards a possibility of civilized discourse or, inadvertently, rubbed salt in those wounds. Some of the rhetoric I find quite upsetting and think ‘is this the best we can do?’ Anyway, sorry for intruding in the quiet space of real thought!

Lewis

Lewis–

Actually, I think she is trying to get voices heard by an audience outside the blogosphere. She recently presented to the NAS(?) or someone. I could be mistaken, but I suspect she is hoping to get people who are positioned where they could possibly influence changes to

a) Recognize that strategies based on the assumption that skeptics are a monolithic block of people funded by the some climate-denial machine are not going to work.

b) At least some of the responsibility for loss of trust lies on the side of funded scientists. (This is true even if all they was act in a way that created a perception of misbehavior.)

c) That many of the steps to fix the loss of trust must be taken by scientists and that those steps and finally,

d) maybe get them to consider steps they could actually take.

As I said, I could be wrong. Maybe I’m projecting what I hope she is trying to do!

[First attempt possibly lost in cyberspace, so please delete if this is a repeat.]

I am much more surprised that Spencer’s result matches CRU so well. Why? Because he used an entirely different source of raw data. He used the rawest of the raw, most likely including some different stations, with no quality control, no adjustments, no nothing, and he still got such a close match to CRU? That’s something.

Tamino, on the other hand, started with the same raw data as GISS, and got about the same results as GISS. This isn’t terribly surprising. The GISS processing is very basic, if tedious. There are differences between Tamino and GISS (how to combine stations, grid coarseness, interpolating to a point or averaging within a box, UHI correction, etc), but we already know that the ‘answers’ are not terribly sensitive to those choices.

Mosher: No, you don’t have to use the exact GISS code to comprehensively show the most basic point. In fact, you don’t have to even do what Tamino did. You just have to know how the reference station method works, and then you’ll know that dropping a cold station won’t automatically make the remaining average warmer.

As for undersampling: I was a little surprised that Tamino’s two subsets matched so well. But that could still allow that both subsets were undersampled in the same way. If Congo/Zaire is missing in both sets, then they’ll both have the same undersampling error. Actually, this is a place where doing a more faithful emulation of GISS would be helpful, as the linear interpolation comes into play.

Speaking of faithful emulations of GISS: http://clearclimatecode.org/the-1990s-station-dropout-does-not-have-a-warming-effect/comment-page-1/

There’s something not quite right about all this concern about trust. In a scientific argument “Trust me” isn’t a great point made. The fact there is now a need says alot.

No, Lucia, I think your right. ( NB I think it was strange we got Leo’s Guardians’ right to reply first before Judiths’ article, which I haven’t actually seen published in the said paper) (My apostrophes are terrible!) In other words, she wishes that the, sometimes, insular world of academia might learn to face outwards to a world that really would trust if they felt they where having it straight. What a sentence! I like her for it. Anyway, this should be on another thread, no? But it all makes me nostalgic for a few years back when one could catch a SteveMc blog and follow the sometimes abstruse, opaque, too and throw of ‘r’ and ‘variant’ this and that. O those quiet days!

Re: carrot eater (Feb 26 10:35),

Looks like the guys at clearcode did it! 🙂

This is actually making the case for transparency. Because GISSTemp was released, the people at CC were able to rewrite it and verify their version gives pretty much the same results. Because they now have a version they like to use, with features that let them easily play around, they have quickly run this test. They don’t have to try to pester people at GISS to drop whatever the GISS staff are expected to do by program managers to fulfill the curiosity of bloggers– they just run their code. They post the results– it shows that dropping stations didn’t bias the trends upward.

This is cross-posting a bit, but:

I see it this way: the ccc guys are building basically a perfect emulation. But there’s also a small army of guys building their own versions from scratch. Which clearly shows that you don’t need to copy somebody else’s code; just be willing to do some work. The different people are making different decisions, adding different features; all adding their own touch.

So whenever a sceptic claim comes up, the response time will be shortened to a matter of days, or even hours. My regret is that the sceptics should also write their own code, so that they are more able to identify real problems, instead of wasting everybody’s time with “dropping cold stations makes it hot”.

The main missing link now is an emulation of the GHCN adjustment. I don’t suggest anybody bother right now, because they’re in the process of changing that adjustment. But it will be similar to the homogenisation in USHCN v2.0, which is a bit complicated, but seems well enough described in Menne/Williams 2009, and the code is now public.

Lucia

See another statistical wiz exploring temperatures:

RomanM at Statistics and Other Things

e.g.,

Comparing Single and Monthly Offsets

(apparently Tamino was not amused.)

David Hagen,

Actually, Tamino agreed with him: http://tamino.wordpress.com/2010/02/25/false-claims-proven-false/#comment-39888

However, its an argument about the best way to improve the method used to combine stations. It has little direct bearing on the whole “dropping” issue, since you will generally get the same result as long as you use any spatially weighted anomaly method.

Re: carrot eater (Feb 26 11:29),

On the ‘release the code’ issue, I’m actually not in the big “release any and all code under any and all circumstances” camp. I think some codes should be released sometimes– and at certain points. There have actually been discussions of that, and Chad, Mosher, Carrick and I all have somewhat different points of view.

On “release the code”, I’ve been a supporter of the notion CRU should release there code– for reasons that are too involved to explain in a comment here.

I don’t think the benefit of releasing code is for people to copy code. It has other benefits. Oddly, some are no more than PR benefits. In other cases, it can enhance communication. Of course only people who add their own work to codes can do anything ultimately useful.

See the benefit? 🙂

I think dealing with the UHI and poor station citing issues may take longer– but still not that long. Someone might want to keep a bullet list of the arguments, and how the have been responded to at blogs and in articles, that will help.

The arguments I know of:

* ±1 rounding of t-min/ t-max means precision in anomalies can’t be better than ±1C.

* Losing thermometers makes a difference.

* Urban vs. Rural — Urban Heat Island:

* Poor station sitings (i.e. the BBQ grill issue)

* How is homogenization done and how much uncertainty is involved in this? Does bias creep in when this is done?

Are there others? To my mind, the final three have always been the difficlut ones. They also interlace!

Re: Zeke Hausfather (Feb 26 11:40),

Your comment sounds about right to me. That’s why I previously said I can’t really comment on whether Roman is “right” or “not right”. Sometimes a method is sufficient for discovering an answer even the method is not perfect and/or someone can think of a better method.

Roman’s method may be much better for gettign minimum noise, low bias time series. That would be very useful for testing whether models are consistent with an observed time series (because noise in the observed series makes it more difficult to get “rejections” with similar amounts of data.) But Tamino may not need to get the lowest noise/ lowest bias time series to show dropping thermometers doesn’t matter, and if he’s on a roll, not worrrying about that for now makes complete sense.

Lucia: I strongly feel that somebody could have easily written a very good GISS emulator, well before they released the code. In fact, releasing the code probably slowed things down, as it was in such a messy condition. Writing a new GISS from scratch would have been faster than doing what the good ccc guys are doing, though it would not have been 100.000% perfectly matching. GISS really doesn’t do anything that sophisticated or complicated. I’m a bit annoyed actually that there wasn’t a high profile attempt to do so; it would have proven the point very nicely. But, better late than never.

As for CRU: they’re just a mess, I think. Some of their extra source data is already pre-homogenised by the providing country; some is not; just a tangle, it seems. And a lot of their older homogenisations were manual and subjective. So there simply is no code for that; it was done using human judgment. But I understand they’ve released some amount of code.

Chad, agreed..I guess my point is this . the claims that there would be some big shift due to dropping stations is one that has been kicking around since I first landed on this planet in 2007. As I said the anomaly method should “fix” all the issues ( agreeing with nick stokes and his comments at various places )

Still, the question of how GISSTEMP handles anomalies and creates them is an interesting little investigation that is best done with GISSTEMP code. I would not expect anything earthshattering out of this. I would not expect anything earth shattering out of any investigation of the temp record. Minor adjustments, improved understanding, better methods, better documentation, all of these, but the global warming seen since

1850 isnt going away. If I were a skeptic the best I would hope for is attributing a bit more warming to UHI (maybe .15C versus Jones .05C ) and more accurate error bars. The expectation that people will find some big error will be dashed. So my advice to them is reign in your wild claims. it’s like alarmism in reverse.

Anyways, we really won’t even need to worry about what GISSTEMP says or hadcru says anymore.

JeffId has put up some code that incorporates roman’s method.

there is your work.

Another blog called whiteboard has somebody committed to opening the code.

It would be cool, very cool if the folks from both sides in this debate worked together on a community effort to produce a

suite of tools and approaches. I’d even suggest an “olive branch” to tamino.

One thing missing ( as you know) is a good facility for managing metadata.

Project needs good database guys.

Re: carrot eater (Feb 26 12:21),

I don’t think releasing the code slowed things down. Releasing showed it was a mess, and then people elected whatever path they wished based on knowledge that the code was a mess. As long at it was not released, some people could imagine having the code would permit short cuts. Plus, why do you assume the only reason people want the code is to create a GISS emulator? There have been a range of reasons for wanting code released.

You get to be annoyed. So do other people.

Of course, you could have resolved your own annoyance and proved your own point by writing your own emulator, right? 🙂

I think releasing production codes like GISSTemp and CRU are useful steps. These things should not have been resisted. I think the same for CRU– even if it is a mess.

I do think part of CRU’s problem is that things very likely are a mess at CRU and Jones didn’t want to admit that. I’m a messy person. I understand that… but still…

carrot eater (Comment#35162) February 26th, 2010 at 12:21 pm

“Lucia: I strongly feel that somebody could have easily written a very good GISS emulator, well before they released the code. In fact, releasing the code probably slowed things down, as it was in such a messy condition. Writing a new GISS from scratch would have been faster than doing what the good ccc guys are doing, though it would not have been 100.000% perfectly matching. ”

Yes, carrot we heard this argument a dozen times in 2007. People arguing that we should work their way. When the code was released people could actually look at it and decide which path THEY wanted to go down. The issue with an emulation is the lack of 100% matching. Especially when the problem we wanted to look at ( UHI) is likely at the edge of the noise floor. The other thing is that ANYTIME you show something with an emulation that contradicts the real code, you will get this answer:

The emulation is wrong. the emulation is not peer reviewed.

So from a practical standpoint emulations can’t show anything

wrong with a package they emulation.

What you would have is two pieces of code that didnt produce

similar results. Like so:

GISS shows a warming of 1C

Emulation shows a warming of .98C

Everyone agrees that the emulation is “valid”

GISS shows no effect from dropping stations.

Emulation shows effect from dropping stations.

Conclusion?

With no code, the GISS fans will say the emulation is wrong.

So, you need the code. more importantly we have a right to the code regardless of our identity, intentions, or ability to do anything with it. full stop right to the code.

Magic

Isn’t it the case that you can introduce bias by oversampling? If so, perhaps this is the reason so many stations in the U.S. are being dropped. There were far more U.S. stations than anything else before the drop.

.

But regardless of the math, it’d be nice if we were told why they were dropping stations to begin with, rather than trying to guess the reason for it.

************

back in 2007 I argued with gavin and others that they should drop stations in the us because it was oversampled and they should drop the stations that didnt meet standards.

that suggestion was booed down. go figure.

carrot eater (Comment#35157) February 26th, 2010 at 11:29 am

This is cross-posting a bit, but:

I see it this way: the ccc guys are building basically a perfect emulation. But there’s also a small army of guys building their own versions from scratch. Which clearly shows that you don’t need to copy somebody else’s code; just be willing to do some work. The different people are making different decisions, adding different features; all adding their own touch.

1. You don’t need the code. Provided the paper in question describes the code well you don’t NEED the code.

But: “need” is the operative word.

A. you need the code to verify that the figures provided are actually results of the current version of the code. You and I both know that there are times when you rerun your code and get

different results due to improper version control. basic QA for

any publication I ever did.

B: you need the code to prove that there are no compiler or platform issues.

C: you need the code if you want to rehost.

D: I read hansen’s papers. they did not describe all the steps.

in particular they did not describe the QA steps taken prior

to applying the algorithms. I requested descriptions of these

undocumented methods. Gavin responded “read the paper”

But the paper was entirely vague. When the code was released

the readme indicated that the QA check was not done via

and algorithm but was human judgement. So, the paper didnt describe the step. Gavin said the paper did. it didnt. code got

released and my question got answered.

But more importantly, we have a right to the code.

From the ICO office:

3.2 … The public must be satisfied that publicly-funded universities, as with any other public authority in receipt of public funding, are properly accountable, adopt systems of good governance and can inspire public trust and confidence in their work and operations. The FOIA, by requiring transparency and open access, allows the public to scrutinize the actions and decisions taken by public institutions. Failure to respond or to respond properly to FOIA requests undermines public confidence in public institutions. The fact that the FOIA requests relate to complex scientific data does not detract from this proposition or excuse non-compliance. The public, even if they can not themselves scrutinize the data, want to ensure that there is a meaningful informed debate especially in respect of issues that are of great public importance currently and for generations to come.

3.3 It can also be said that failure to fulfill FOIA obligations undermines the development of public policy. The CRU is a leading climate research centre and its work has been incorporated into the assessment reports of the Intergovernmental Panel on Climate Change (IPCC).

3.4 Where public policy is based on science, the public expect the science to be the best science available and that the scientists imparting that science act impartially. Scientists must adopt high standards of ethics and scientific integrity, and allow their work to be peer reviewed, subject to appropriate safeguards of intellectual property rights.

3.5 This is especially the case in new areas of science such as climate change research, where it is clear the results are directly influencing the development of public policy. (Indeed, FOIA makes special provision for the easier disclosure of statistical data where the section 36 exemption could otherwise apply – see section 36(4)). Access to the original data, computer models and an explanation of the analytical methods used is necessary to ensure that results are reproducible. Any attempts to limit peer review, to omit or distort scientific data or to limit access to data sets, models or methodologies used and thus frustrating any review of the science would lead to legitimate questioning of the conclusions asserted. In the wider context of public sector transparency, there is a risk that attempts to withhold the disclosure of information without good reason will increasingly be characterised in terms of “something to hide.â€

Lucia: These issues weren’t on my radar screen before GISS released their code. Maybe I would have been the guy to implement it for myself. Maybe not. We’ll never know.

As for not releasing code: in most any field of science, people in other lab groups don’t sit around waiting, just in case the one lab group releases some code and allows a shortcut. This just simply doesn’t happen. Each research group is moving forward on their own projects, in their way.

And it’s this perspective that Mosher just simply lacks. The two competing lab groups don’t have to produce anything that matches within 100.00000%. But when their results do diverge, then people take a closer look to see what’s going on. Maybe a third group jumps in the fray. People work until the reason for the divergence is understood. And then you move on. Maybe it gets to the point where comparing code has to happen, but I don’t think that’s very common.

Now it does make sense for GISS to be public, since it’s something of a public utility. But that doesn’t excuse somebody from writing their own emulation. Or, their own implementation, with improvements as they see fit.

Looking around, there is a small army of amateurs doing their own implementations of something like GISS or GHCN; starting with v2.mean and doing something useful with it. All are sceptics, in that they’re wanting to build tools and see things for themselves. But so far as I can tell, none are ‘climate sceptics’, in that they incessantly blog about how evil scientists are, or how wrong they are, or how corrupt they are, or so on. Why is this?

You tell me. I wish it weren’t this way.

Steve Short (Comment#35094) February 26th, 2010 at 3:57 am

That paper is not a serious effort. It’s a nice blog post that just brings us back to the questions that really havent been addressed:

1. How do you select “rural” stations.

2. How do you insure that “rural” stations are really rural

and that urban stations are in “cool parks” or not in cool parks

3. Are adjustments calculated properly with error propogation.

Mosher: If a minor detail isn’t spelled out in the paper, then just make some decision for your own implementation, play with it to see how much it matters, and move on. This is how science works in the real world, where competing researchers don’t FOI each other. When you then write your own paper, you can put in as much detail as you want in the supplementary info, or the appendix to the thesis, or wherever.

GISS, CRU, NCDC and JMA are all doing the same basic thing, yet using their own implementations; these are actually quite different from each other. They don’t have to match 100.0000%.

“If a minor detail isn’t spelled out in the paper”

CE,

You can’t know if it’s a ‘minor’ detail unless you know what the detail is to begin with.

Andrew

carrot eater,

.

That is not how “climate” science works.

.

In climate science the insiders decide who is worthy of consideration or not. If you have the blessing of the insiders then they will pay attention. If you don’t you will be dismissed as an incompetent stooge of the oil companies.

.

The only way to get around this prejudice is start with the actual code and prove that it is really a problem with their code. Emulations always provide plausible deniability for the insiders and their groupies. This is what they have been doing to SteveMc for years.

Carrot:

” Each research group is moving forward on their own projects, in their way.

And it’s this perspective that Mosher just simply lacks. The two competing lab groups don’t have to produce anything that matches within 100.00000%. ”

I don’t lack that perspective. I have a different perspective. I’m well aware that different “labs” have different approaches.

My approach is the engineering approach using validated models.

I’m designing an aircraft. The science behind radar cross section is pretty clear. Estimating radar cross section is not that easy. There are different approaches. Various R&D organizations each have their go at the problem. But the customer, the air force, needs to decide. One model gets validated. everybody gets that model. You want to improve it? submit your changes. everybody gets the changes.

From my perspective I am the customer of climate science. You want to use the results of science to inform a policy, then I am the customer of that science. I got customer requirements. they may differ from yours. they are my requirements. I will buy your science when you adopt practices of transparency. full stop.

I want a red car. you try to sell me a green car and tell me that color doesnt matter. go away. you try to argue that I dont need to be able to pick the color of my car. go away. it doesnt cost anything to share code. the sky doesnt fall. refusing to share it may have cost CRU an important scientist.

And on point. Jones didnt share code with mcintyre because he thought that Mcintyre should do the code himself.

He specifically refused to share the code because:

1. he didnt think he could find it

2. he thought it was an undocumented mess.

3. he knew the code would SHOW Mcintyre why mcintyre

could not replicate the results from the description in the PAPER.

you see #3 is really insideous. Mc could not replicate the results.

he tried. So he asked for code. Jones says NO. and in a mail to Mann, he said ” I wont give him code, and I know why he cant replicate it”

So, you live in this world where you think people will just share the code when I say I cannot replicate the results. you trust them to be as reasonable as you are. Witness also the problems that GAvin had replicationg scarfettis work. they read the paper, wrote their code. didnt match. they requested code. the answer was NO.

Lucia,

This seems on topic. I’ve incorporated Romans method into a global gridded temperature series.

http://noconsensus.wordpress.com/2010/02/26/global-gridded-ghcn-trend-by-seasonal-offset-anomaly-matching/

carrot eater:

I’ve never had anybody turn down requests for information on “minor details.” Have you?

Nor have I ever turned down others when they have requested information.

Even if I can’t always give them the code, I have provided them with binary versions of the programs so they can test that their own implementations are producing the same results as my code.

Some thought I had on scientific confirmation related somewhat to steven mosher (Comment#35171)

–

1) Given the exact data and exact code, an audit can be made on implementation errors.

–

2) Given the exact data but using independent methods, results of the first team can be bolstered/undermined by the second team.

–

3) Using independent data and independent methods, results of the first team can be bolstered/undermined by the second team.

–

Historically, 3) was the method used most often to advance or correct a previously published work. Satellite data -v- surface records is an example of 3).

–

McIntyre has gotten very good at 2) – that is in taking the original proxy data and using it to reconstruct the proxy results produced by other teams. NASA GISTEMP -v- Hadley CRU is another example of 2) where both take GHCN as input but apply their own methods to produce output. E.M. Smith is struggling with 1). Stokes reimplementation is essentially a version of 1). Whereas the work to reconstruct CRUTEMP from descriptions in published papers is an example of 2).

–

In my limited understanding, in some science fields (sociology, I’ve read), providing complete data and exact code is common. In most, it has not. Traditionally, rather than an exact copy of the code, a description of the methods used along with a few key equations have been provided. That way, a second team could re-implement the method independently, if they so chose. Times are changing.

Andrew_KY

No. You read the papers relevant to the field to get a basic understanding of what’s going on; you collect your own data or in this case, take the publicly available v2.mean (or if you’re brave like Spencer, that massive weather data set he used – it must have taken a month to download), and you implement it for yourself. As you go through the steps of building it up, you’ll make various decisions as to what’s the best way to do something. And at the end, you’ll see what you have. Maybe, because you used different methods for whichever, you’ll have a somewhat different ‘answer’. Maybe not. Just as GISS, NCDC, CRU and JMA each get slightly different ‘answers’.

Raven: In what field of science does a first year grad student get emailed copies of all the code and spreadsheets of all competing lab groups, once (s)he decides on a thesis topic? It doesn’t happen. You have to do your own work.

Now, sometimes a software package does become publicly or commercially available, to help you do whatever you’re doing. As I’ve said, I do think it makes sense for GISS to be publicly available. But not having it available shouldn’t have stopped any motivated person from implementing something similar for themselves, or improving on it.

@ carrot eater (Comment#35162) But I understand they’ve [CRU] released some amount of code.

The CRU code that is released is a clean implementation in perl – which to my eyes looks like a recent implementation of some algorithms used in gridding and averaging. I am dubious of any claim that is “the” CRU code which has been used historically to generate the CRUTEMP tables. To my knowledge, no one in CRU (UEA or MET) has made such a claim. And it uses pre-adjusted station data for its input. No code has been released for those adjustments. Although I can note that the adjustments appear to be in-line with those in GHCN v2.mean_adj and the GIStemp end product.

Carrot–

Of course. But so?

This observation is utterly irrelevant to the question about whether or not some codes ought to be released to the public at certain key stages. No one has ever said that the purpose of publicly funded lab group A’s releasing code is so publicly funded lab group b will no longer have to wait before they can do their work. (Although, oddly, if they work on the same program, the program manager might actually require lab group A to release code so they don’t have to pay for essentially the same work to be done twice. )

Excuse? No member of the public needs an excuse to not write a GISSTemp emulation because other than NASA GISS no one has received funding to create that product. Tax payers doesn’t have any obligation to write their own emulation, so they don’t need a “excuse” not to write one.

I find the idea that any member of the public should even wish to write code before they can see certain code totally incomprehensible.

No idea.

That said, I’m not at all surprised since many asking for code never claimed their reason for wanting access was to build an emulator or do something you, ‘carrot eater’, decree useful.

Steve Mosher read the code and found an answer to a question he’d posed to Gavin, but which gavin hadn’t answered adquately. SteveMosher thought that was useful to SteveMosher. Why would SteveMosher them dash off to write an emulator just to do something ‘carrot eater’ considers useful?

Out of curiosity, what are you doing with the code? (I assume you are doing whatever you think is useful– which may be nothing, right? )

“carrot eater (Comment#35176) February 26th, 2010 at 1:08 pm

Mosher: If a minor detail isn’t spelled out in the paper, then just make some decision for your own implementation, play with it to see how much it matters, and move on. This is how science works in the real world, where competing researchers don’t FOI each other. When you then write your own paper, you can put in as much detail as you want in the supplementary info, or the appendix to the thesis, or wherever.”

You don’t get it. I’m not doing science. I am trying to decide whether to BUY THE SCIENCE or not. So when you tell me that

I have to do my own science if I dont buy the science you are selling, it’s kinda funny.

I’ve said this before. Perhaps it bears repeating. If you want

me to accept a result or an argument, i want to see your work.

I may go through that work, I may not. But, I want to see your work. If you don’t want to show me your work, don’t demand

that I buy it. Dont expect me to buy it because of letters behind

your name. dont expect me to buy it because I havented proved the opposite. Don’t expect me to defend my requirements.

Now, if you dont want to make this customer happy, that is

your choice. Lucia doesnt share code. Do I buy her analysis?

sure. Why? because NOTHING happens to me if I buy or dont buy.

Lets say GISS didnt share code. Would I buy their analysis?

No. why? because it matters more to me.

I

carrot eater,

.

Students often are expected to ‘re-invent the wheel’ because that is how they prove that they know the material. We are not talking about school work. We are a talking about real world science with real world implications. The standards are completely different.

.

Read Mosh’s comment – the scientists are trying to sell us their science. It is their job to convince us to buy. Not our job to prove them wrong.

Mosher: Extremely ironic that you bring up Schmidt vs Scafetta in this context. Schmidt and colleagues read Scafetta’s paper, tried every implementation of it they could think of, spent some time doing it, and just couldn’t get it to work. They contact Scafetta for clarification. That doesn’t help. So only then, after a lot of work they put in, did they publicly ask for code.

I must have missed the part where you guys did all that work to try to independently confirm, BEFORE the public call for code.

Tamino got as far as he got in a month. And he’s not even closely following GISS code, as he’s doing things his own way. Who knows if he’s even really looking at it. For something to be ‘robust’, it shouldn’t be highly sensitive to little decisions of how you choose to implement it.

Broberg: I’ve noted upstream, a lot of CRU adjustments were done by hand. So there is no code for that. It was Jones sitting down with a pencil and paper, and looking at the data before and after a station move.

These are the reasons I prefer objective adjustments to subjective methods.

Moshpit:

1. How about calling out Watts for locking up the Surface Stations data?

2. How about calling out McIntyre for taking several months (and requiring reminders and even a threat to go to GRL) to produce the 10ish lines of code behind his changed RE simulation in his Reply to Comment? (This from the same guy who has spent plenty of time writing blog posts advancing his Replies to Comment).

3. How about calling out Wegman for not responding to requests for code and data?

————————

If one is honest, it should cut both ways. I sense you admire honor. But you need to watch McIntyre and Watts and the like. They have a slippery side. Guys like Zorita, Jollife, even Atmoz, that English computer code guy. Those are ones that really will show it whichever way it cuts. (note, none of this excuses truculence from Mann et al…I believe in unilateral open-ness regardless of the side.)

But I honestly caution you to be careful to look deeply at the skeptic sides. Push your own side and see how they do, when pushed on things (for instance Watts and his solar silliness, white screen paint, Id/Ryan and the negative thermometers, etc.)

Re: carrot eater (Feb 26 13:08),

It’s true researchers rarely FOI each other. But some of your other notions are not quite right.

Often, when details are not spelled out in papers, researchers email, phone write each other and ask.

If things were really done the way you claim, people in other labs would notice that the paper didn’t explain details “a, b, and c “, they’d do “my wild guess at a, my guess at b, and my guess at c”. Then, they get a different result. But somehow, they don’t phone….. So, now, unless the two group communicate, how could the second lab know if the different result was due to “a, b, or c”? And maybe the lab is funded to explore the effect of “d”. How do they know if differences in later results were due to “d” or to having dealt with “a, b or c” differently?

What many real, honest to goodness researcher do is ask the person who failed to explain how he did “a, b or c”. The person who wrote the first paper usually answers questions about pesky details if… they remember… and they aren’t being butt-heads.

Researchers do ask for codes– and often share them, though the level of willingness to share can vary. Sometimes, sharing code is more time efficient, so it’s done rather frequently.

In the case of something like CRU and GISSTemp, where a code is run every month, and zillions of people are curious, making a code publicly accessible is a time efficient method of helping people discover answer to their own questions. The fact that you have some odd notion about how real research is done is not excuse for certain production to not be released.

Lucia, Mosh, Raven:

I’m not buying it.

“I find the idea that any member of the public should even wish to write code before they can see certain code totally incomprehensible. ”

How? With a typical person for whom the code would be meaningful in any way, they could also do a fair job of writing their own. GISS is not complicated; it won’t take you five years to write. For the average person on the street, seeing the code makes no difference to them, as they have no idea what they’re looking at anyway.

Look, I agree that GISS should be public. Yes, it should absolutely be public. And now it is. And then what happened? From the sceptic side, pretty much nothing. They don’t seem any more willing to ‘buy’ anything at all. Maybe some little thing, Mosher now understands. Great. But I know I’d be more willing to ‘buy’ something if multiple different groups, working independently, all came up with similar results.

“Out of curiosity, what are you doing with the code?”

Absolutely nothing. I don’t want to bias myself by looking at somebody else’s code. I am working with v2.mean, on and off. Maybe if I have a blog someday and find something interesting, I’ll share. Maybe not.

But I’m also not making baseless claims like “dropping cold stations makes it hot”. If I were making such claims and publishing them, then the onus would very much be on me to do the analysis to back them up.

“I’m not at all surprised since many asking for code never claimed their reason for wanting access was to build an emulator or do something you, ‘carrot eater’, decree useful. ”

Well, whatever reason they wanted it, they now have it, and so far as I can tell, nothing has changed except for the politics of them not being able to ask for it anymore. Seems like they’ve moved on to asking for emails about the code. Lovely. I’m sorry, but I simply don’t see good faith here.

Re: carrot eater (Feb 26 13:33),

A grad student doing a thesis is a very poor analogy for what’s going on here. The public is not a group of people who are doing work as a learning exercise. They are not funded to create new science for the enjoyment and edification of those at CRU, GISS, NOAA etc.

GISS, NOAA, possibly CRU have received tax payer funding to create products for the benefit of the public. It’s idiotic to suggest that if members of the public have questions, they need to redo repeat publicly funded work from scratch, on evenings and weekends while also doing remunerative work, which is taxed to create produts like GISSTemp etc.

Suggesting members of the public need to do so because grad students don’t arrive to find everything they could possibly need to write their thesis is silly.

What are you going to suggest next? That if I want to receive a letter in my mail box, I need to go spend two years as a letter carrier so I can truly understand what’s involved in mail delivery? Or that if I want to send my kid to public, I need to volunteer a year of my life teaching 1st graders to read because, after all, teachers are expected to teach? Obviously, this standard doesn’t make sense.

lucia (Comment#35196)

Depends on how consequential the detail was. Some things you’d just figure out for yourself; some things you might email about. Case by case thing.

Lucia:

I use the grad student analogy because some claim that climate science isn’t really science because of code availability. You see this on WUWT a lot. Let’s just be clear that this is a red herring.

Now, if you want to say that climate science should be treated differently than other sciences for various reasons, then fine. As I’ve said many times, GISS should indeed be public. But let’s not say that it isn’t science until every last spreadsheet is turned in.

I don’t think the academic analogies are relevant, this not about academic practices and little disagreements.

Possibly slightly off topic, if so forgive me, but this is the Institute of Physics take on the matter:

http://www.publications.parliament.uk/pa/cm200910/cmselect/cmsctech/memo/climatedata/uc3902.htm

Carrot

So what if the code is incomprehensible to the average person in the street? I can read french. Most american’s can’t. My local library would not refuse to let me check out a novel written in french merely because French is incomprehensible to the average person on the street.

The fact that the average person on the street does not understand code is no reason to a member of the public who can read code to replicate publicly funded work before they can access code created at tax payer expense.

Sure. Me too. But this isn’t an either or situation. GISS releasing the code does not prevent CRU or anyone else from creating a surface product, and it doesn’t force them to use the same algorithm.

If your gripe is Anthony, then say so.

However, that gripe is nearly irrelevant to the issue releasing codes like CRU or GISSTemp. Loads of people posting at my blog have been supporting releasing code and saying not only not saying “dropping cold stations makes it hot” but saying that dropping cold stations probably makes very little difference. (That said, releasing the code permitted emulation, and faciliated CCC guys running the GISSTemp code and becoming what I think is the 4th group showing that dropping cold stations does not make it hot.)

Is taking away that talking point a bad thing? Believe it or not, several of us here — including me– have said taking away the talking point is one of the reasons code should be released.

In fact, during the Scafetta/Schmidtt kerfuffle, I exchanged some emails with Schmidt. I asked him for his code precisely do I could report whether he gave it to me. I told him I didn’t run R, but he asked Rasmus to send it to me and Rasmus did. I could have given it to anyone I wanted.

Obviously, if Gavin had refused, I would have had to report that Gavin refused, which would have been pretty bad PR while he was asking for Scaffettas.

(BTW: Gavin’s request for code is very analogous to SteveMc. requests. Steve has generally posted output form his similations with comparisons of the difference between his and other peoples results while also asking for code to track down the differences. So, how is that different?)

Also, as for your claim that “nothing has changed”, didn’t having the GISSTemp code help CCC emulate it and run their recent cases? Isnt that a change since before GISSTemp was released?

You may be wishing for some different set of changes… but the fact is that the GISSTemp code release is starting to bear fruit– for the people who didn’t think it needed to be released. Smile at those silver linings man!

http://www.publications.parliament.uk/pa/cm200910/cmselect/cmsctech/memo/climatedata/uc4702.htm

Carrot

Of course. But your characterization made it sound like people don’t ask, share etc.

Ok… but you may need to learn to state what argument you think you are rebutting. From time to time, people arrive here and start arguing with comments they remember from some post at another blog. Obviously, the rest of us have no idea that they have just been presented with a rebuttal to “common idea I read over at blog Y, by commenter Z”.

FWIW: I don’t think code availability is the defining aspect of science. But code sharing is frequent in “real science”. It has been widely done in computational fluid dynamics. (I’m a mechanical engineer btw.)

Graduate students often benefit from obtaining base code from other groups and heading off from there. Do they arrive at their desks will all the codes form all groups bundled in an email? No.

But there is lots of sharing in engineering sciences and all research. Trying to claim otherwise is going to raise the eyebrows of anyone who has ever done research at a university or national lab.

carrot eater,

GISS is a publicly funded organization. EVERYTHING they do and have ever done (code, email messages, papers, and scribbles on the back of envelopes) is the legal property of the public, down to the last character in the smallest program they have ever written. What is crazy is that everything they do and have ever done has not always been automatically treated as the public property that It is.

.

And as you probably know, Phil Jones has been generously funded by the USA taxpayer as well. It is absurd that he was not contractually compelled to disclose his code and raw data as a condition of receiving that funding.

.

What anyone wants to do with that public property (code and/or raw data) is completely irrelevant.

It’s all in the “adjustments” made to the raw data. Willis Eschenbach has looked at Anchorage, Nashville, UK, Sydney. All have inexplicable adjustments to produce a warming effect where none was appparent in the raw data. Others have done similar examinations of adjustments and reached similar conclusions.

http://wattsupwiththat.com/?s=Guest+Post+by+Willis+Eschenbach

The difference between the raw data trend, and the adjusted data trend equals the alleged Warming.

Ultimately the explanation of why fraudulent adjustments were made to the raw data will be the basis for Criminal Prosecutions. The Scientists concerned can’t very well say they didn’t know what they were doing, so they will have to admit that they deliberately cooked the books.

Secondly Tamino and JohnV etc are only interested in getting their discrediting of Watt’s work in before he publishes his conclusion.

Thirdy: There’s a new paper out by Dr. Edward Long that does some interesting comparisons to NCDC’s raw data (prior to adjustments) that compares rural and urban station data, both raw and adjusted in the CONUS.

The paper is titled Contiguous U.S. Temperature Trends Using NCDC Raw and Adjusted Data for One-Per-State Rural and Urban Station Sets. In it, Dr. Edward Long states:

“The problem would seem to be the methodologies engendered in treatment for a mix of urban and rural locations; that the ‘adjustment’ protocol appears to accent to a warming effect rather than eliminate it. This, if correct, leaves serious doubt for whether the rate of increase in temperature found from the adjusted data is due to natural warming trends or warming because of another reason, such as erroneous consideration of the effects of urban warming.â€

“Dr. Long suggests that NCDC’s adjustments eradicated the difference between rural and urban environments, thus hiding urban heating. The consequence:

“…is a five-fold increase in the rural temperature rate of increase and a slight decrease in the rate of increase of the urban temperature.â€

The analysis concludes that NCDC “…has taken liberty to alter the actual rural measured valuesâ€.

Thus the adjusted rural values are a systematic increase from the raw values, more and more back into time and a decrease for the more current years. At the same time the urban temperatures were little, or not, adjusted from their raw values. The results is an implication of warming that has not occurred in nature, but indeed has occurred in urban surroundings as people gathered more into cities and cities grew in size and became more industrial in nature.” http://wattsupwiththat.com/2010/02/26/a-new-paper-comparing-ncdc-rural-and-urban-us-surface-temperature-data/

I am appalled at the lack of scientific rigour shown by those that suppose any of the adjusted data sets are any use at all. Shocking.

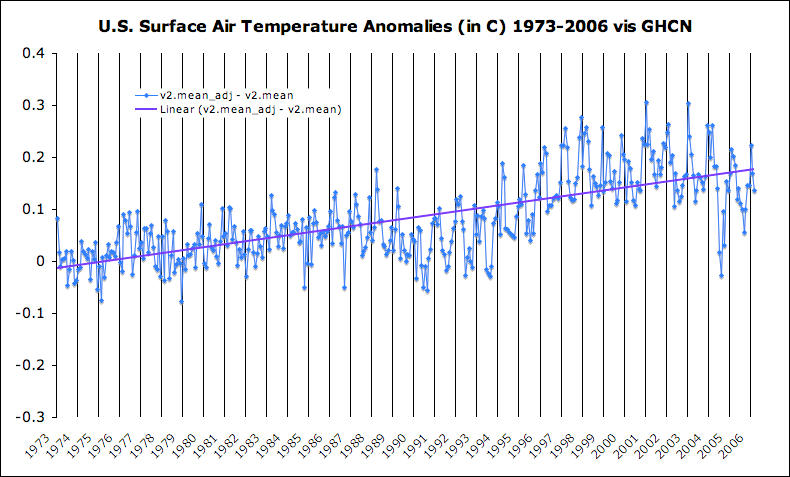

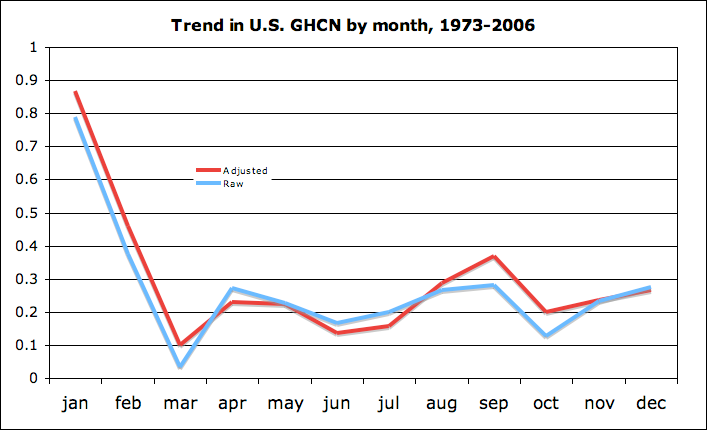

On a somewhat tangential note, there is an interesting secondary result to come out of these GHCN analysis; namely that the net effects of adjustments to the raw data appears to reduce the trend in temperatures.

Tamino showed this for the Northern hemisphere but gave no specific numbers (just a graph). Jeff Id’s analysis had the global raw data land trend at 0.233 C per decade for 1978-2010, compared to 0.19 C per decade for GISS.

.

Also, MarkR, methinks you are a tad out of your element.

“From time to time, people arrive here and start arguing with comments they remember from some post at another blog. ”

I mainly went in that direction because of something raven said at (Comment#35178), though upon re-reading it, she wasn’t quite saying the ‘it isn’t science’ thing.

“But there is lots of sharing in engineering sciences and all research. ”

Yes, but it also isn’t nearly as open source as some would appear to think. Even if I was actively collaborating with somebody, we might do our calculations in parallel, just to make sure. It gives you a lot more confidence when two people working independently find the same thing. In the cases where I got code from an outside group, I didn’t use it; I found it better to write my own.

“If your gripe is Anthony, then say so. ”

Seeing as I followed the links here through a discussion about the EM Smith station dropping thing, then that would be one major thing in mind at the moment. If you make a claim and publish it, do the analysis. If you didn’t do the analysis, don’t publish. Simple. Can we at least agree on that? Watts thinks he’s holding scientists accountable, but he’s got to be accountable as well.

“Is taking away that talking point a bad thing? ”

Just pointing out that it was a talking point. And again, I agree that GISS should be open, regardless of what the sceptics say, and I’m glad that it is.

“Steve has generally posted output form his similations with comparisons of the difference between his and other peoples results while also asking for code to track down the differences. ”

I was not aware of he or any other sceptic doing this for GISS; just the paleoclimate stuff, which I’m not into. I think you’d have to mess up pretty badly to not get a result more or less in line with GISS/GHCN/CRU/JMA, if you sat down to do it. Like, you’d have to use average together absolute temperatures…

“didn’t having the GISSTemp code help CCC emulate it and run their recent cases? Isnt that a change since before GISSTemp was released? ”

It’s helping them re-write it by looking at it. This’ll make sure there’s no simple stupid coding error in there. I maintain you could have started from scratch and done a pretty good of emulation without it; it’s a shame that apparently nobody tried, back then. It seems to me that the zekes, Brobergs and Taminos of the world aren’t heavily looking at the GISS code, but going about things their own way.

But what I’m saying is that nothing has changed from the sceptic camp. They’ve got the code, so then they FOIed for emails related to changes in the code. Really? Where does this end, and is it really related to science and understanding? Or is this a game?

Even with the code in hand, they don’t seem to be using it to answer their own questions.

“Tamino showed this for the Northern hemisphere but gave no specific numbers (just a graph). Jeff Id’s analysis had the global raw data land trend at 0.233 C per decade for 1978-2010, compared to 0.19 C per decade for GISS.”

Zeke,

These kinds of statements may justify something in your mind, but an average person doesn’t know what that is supposed to be, or why they should care about it. I certainly don’t know what difference it makes to the obvious… that the public has been lied to by climate scientists.

How does this help?

Andrew