With all the discussion of the new BEST results, there has been renewed interest in comparisons of different land records, with good discussions here and here for example. There has been quite a bit of confusion over differences between BEST, NCDC, CRUTemp, and GISTemp, with some commenters incorrectly arguing that the differences between NCDC/BEST and CRUTemp are due to data homogenization instead of spatial weighting.

Both NCDC and BEST spatially weight land temperatures proportionate to the land area. NCDC uses spatial gridding with 5 by 5 lat/lon gridcells, while BEST uses a kriging approach. GISTemp’s published “land” record is actually an attempt to estimate global temperatures using only land stations with no land mask, and is the average of the anomalies for the zones 90°N to 23.6°N, 23.6°N to 23.6°S and 23.6°S to 90°S with weightings 0.3, 0.4 and 0.3, respectively, proportional to their total areas. CRUTem uses a land-area weighted sum (0.68 × NH + 0.32 × SH), which also differs in practice to the system employed by NCDC/BEST. Its unclear if CRUTem uses a land mask in calculating their hemispheric averages. Because of these difference in spatial weighting, simple comparisons of these published records can be quite misleading!

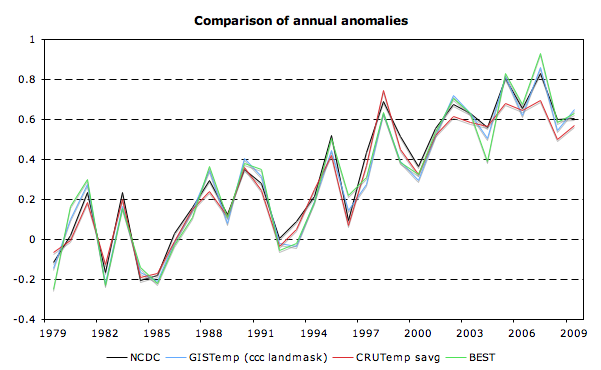

Thankfully, a number of intrepid commenters over at Nick Stoke’s blog took the time to track down land-only runs of GISTemp and CRUTem that are more directly comparable to the NCDC/BEST spatial weighting scheme. For GISTemp, this involved taking the Clear Climate Code implementation and applying a land mask (raw data available here). For CRUTem, this involved tracking down a (rather poorly advertised) simple weighted average version, available here (note that I’m still not sure if a land mask is being used or not). Once we use these sets who have more comparable spatial weighting, we get much more similar results:

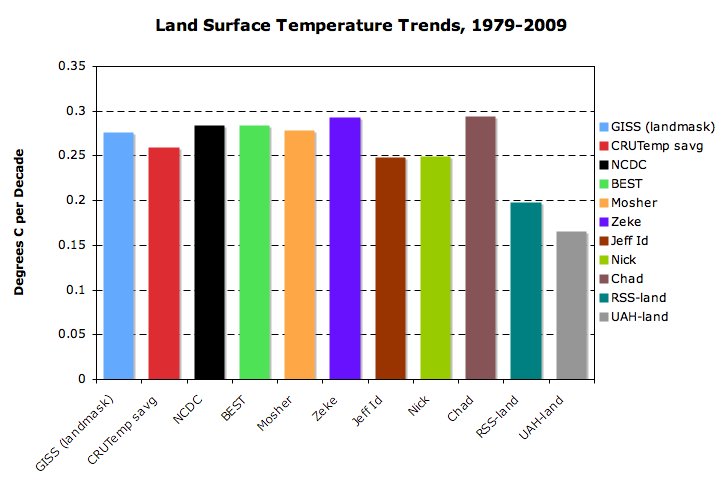

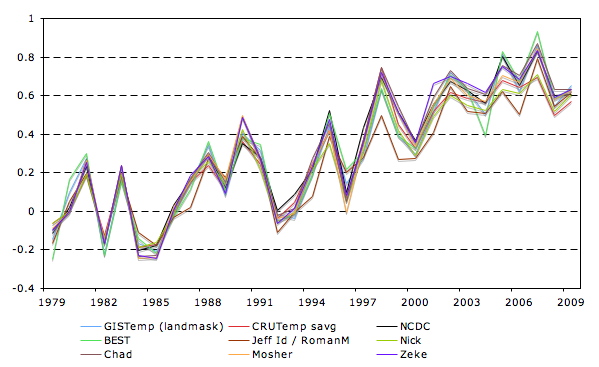

We can also compare these records to the various science blogging community reconstructions from last year. Recall that these reconstruction by-and-large used unadjusted GHCN data (v2.mean), and a methodology similar but not identical to that of NCDC. Note that a few of these (particularly Nick Stokes numbers) may be out of date (I think the version I have for Nick has no land masking, while a later release of his uses one):

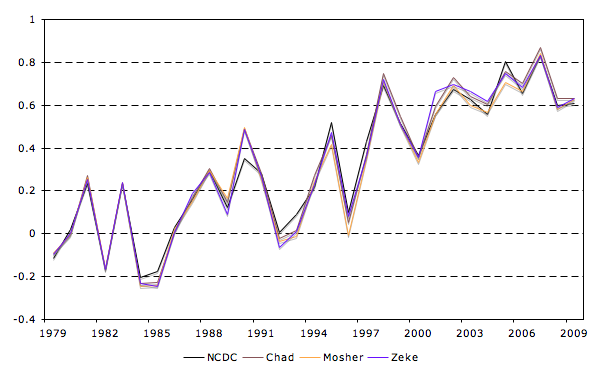

Its worth pointing out that a few of the reconstructions (Chad’s, Moshers, and my own) almost perfectly replicate NCDC despite using no homogenization:

While there are some differences between the records, when using similar spatial weighting techniques all produce rather similar results. My hunch is that any remaining differences between CRUTemp savg and NCDC are due to the lack of a land mask, but I’m not sure how to check save emailing the East Anglia folks.

Update: While Zeke is locked away in a cabin, I updated images Zeke created using data Gavin. I think I sorted the images out correctly -lucia

You can always try an FOIA request.

Zeke,

There’s a useful discussion of this in the AR4, Sec 3.2.2.1:

“Most of the differences arise from the diversity of spatial averaging techniques. The global average for CRUTEM3 is a land-area weighted sum (0.68 × NH + 0.32 × SH). For NCDC it is an area-weighted average of the grid-box anomalies where available worldwide. For GISS it is the average of the anomalies for the zones 90°N to 23.6°N, 23.6°N to 23.6°S and 23.6°S to 90°S with weightings 0.3, 0.4 and 0.3, respectively, proportional to their total areas. …As a result, the recent global trends are largest in CRUTEM3 and NCDC, which give more weight to the NH where recent trends have been greatest.

…Table 3.2 (useful)

…

Further, small differences arise from the treatment of gaps in the data. The GISS gridding method favours isolated island and coastal sites, thereby reducing recent trends, and Lugina et al. (2005) also obtain reduced recent trends owing to their optimal interpolation method that tends to adjust anomalies towards zero where there are few observations nearby (see, e.g., Hurrell and Trenberth, 1999). The NCDC analysis, which begins in 1880, is higher than CRUTEM3 by between 0.1°C and 0.2°C in the first half of the 20th century and since the late 1990s. This is probably because its anomalies have been interpolated to be spatially complete: an earlier but very similar version (CRUTEM2v; Jones and Moberg, 2003) agreed very closely with NCDC when the global averages were calculated in the same way (Vose et al., 2005b). Differences may also arise because the numbers of stations used by CRUTEM3, NCDC and GISS differ (4,349, 7,230 and >7,200 respectively), although many of the basic station data are in common. Differences in station numbers relate principally to CRUTEM3 requiring series to have sufficient data between 1961 and 1990 to allow the calculation of anomalies (Brohan et al., 2006). Further differences may have arisen from differing homogeneity adjustments (see also Appendix 3.B.2). “

I haven’t used a land mask with TempLS. I haven’t done much with global land at all recently – mainly land/sea, and regional.

I would note that centering the anomalies in the center of the graphs over the time period provides a visual illusion that the graphs are the same. Pin them to 1979 and they look much different.

One should also note there were two large stratospheric eruptions which reduced global (as opposed to Land) temperatures by 0.45 and 0.5C respectively in March/April 1982 El Chichon, and June 1991 Pinatubo.

This effect increases the trend by 0.05C per decade in the UAH/RSS lower troposphere temperatures and some other amount in the Land temperatures.

The charts would look quite different with 0.5C added (who knows how much for Land temperatures) in 1982/83/84 and 1991/92/93.

Bill,

I’d avoid pinning them on a single year (1979) because of the possibility of reflecting noise more than anything else. However, an early baseline (1979-1984) appears nearly identical to the charts in the post: http://i81.photobucket.com/albums/j237/hausfath/Screenshot2011-11-04at62911PM.png

Nick,

Oddly enough, I quote from those paragraphs in the post (albeit with indirect attribution via a link to an earlier post with the AR4 language in it).

It looks to me like you’re using unweighted fits for CRUTEM3, BEST & NCDC.

Given that they have quoted uncertainties, does that really make sense?

We had a discussion on Nick’s blog on that. Tamino claims you can’t use the quoted errors, but I find his arguments unconvincing.

So far he’s the only one who’s “weighed in” on this.

I’ll repeat the comment I made on Nick’s blog that unless you know exactly how they are doing the land average and you’re sure it’s equivalent, land only comparisons are a bit of apples to oranges

With BEST using an unweighted least-squares-fit gives a very different answer:

BEST(unweighted) 0.279±?.??? °C/decade

BEST (weighted) 0.344±0.005 °C/decade

It doesn’t make that much of a difference for CRUTEM3:

CRUTEM3(unweighted) 0.268±?.??? °C/decade

CRUTEM3(weighted) 0.266±0.009 °C/decade

I was always taught a number without a CL is meaningless. I’d hate to insinuate that climate science is meaningless. 😉

On Nick’s blog, some people think “ordinary” is a synonym for “unweighted”. That seems like a pointless use of the word “ordinary” to me, and I pointed out to them that you can search for and find in google scholar papers that talk about “weighted ordinary least squares fit” and even “unweighted ordinary least squares fit”.

I have always assume it refers to an optimization function that fits this pattern:

$latex L_2 = \sum_{n=1}^N W_n [y_n – (a + b x_n) ]^2$

You can have $latex W_n = 1/\sigma_n^2$ or even something like a Hann taper function. (In the latter case if your model is $latex a \sin\omega t+ b \cos \omega t$, you’ll end up deriving the formula for the Hann-weighted Fourier coefficient for frequency $latex \omega$.)

You can also have optimization functions for any power:

$latex L_p = \sum_{n=1}^N W_n |y_n – (a + b x_n) |^p$

With $latex W_n = \hbox{\it constant}$, minimizing $latex L_1$ is minimizes the sum of the absolute value of the residuals and minimizing $latex L_\infty$ minimizes the maximum of the residuals.

Zeke,

“…with some commenters incorrectly arguing that the differences between NCDC/BEST and CRUTemp are due to data homogenization instead of spatial weighting.”

“…almost perfectly replicate NCDC despite using no homogenization…”

You name homogenization the operation which Giss use in his UHI process. This operation acts on trends in urban stations and has a final effect close to zero.

We use also the same term of homogenization for the treatment which consist in removing the discontinuities in raw series. This operation is not implemented by the GISS global product but is impemented by CRU (and probably by GHCN or NCDC), it is also by BEST in the particular form of slicing. This is not neutral but cause a warming of 0.5 ° C in the twentieth century.

For comparison, it is better to use the land in the northern hemisphere that are less sensitive to the very different treatments of polar regions.

#85198

Carrick,

To add to the terminology, what you are talking about is diagonal weighting. W could be a general (symmetric pd) matrix kernel. That’s really the proper way of dealing with correlated residuals.

Nick, with respect to weighted least squares, I’m aware of an underlying relationship to maximum likelhood analysis (as MP so elegantly outlined on your blog).

One question is, is there a similar relationship for what I would called “generalized least squares”, were you replace the diagonal weighting with (what turns out to be) the inverse of the covariance matrix?

The other question is of course how one goes about building a covariance matrix.

We just had a discussion about this at Climate Audit. And as we concluded there, “the boys” are likely to be far off on their reconstructions since they don’t account for UHI at all and since the evidence for substantial UHI is very strong. To repost what I said on CA: —

–

First go back and read Steve M’s post on the subject:

–

http://climateaudit.org/2010/12/15/new-light-on-uhi/

–

And go back and read this NASA site on the subject:

–

http://www.nasa.gov/topics/earth/features/heat-island-sprawl.html

–

What did they find?

–

“Summer land surface temperature of cities in the Northeast were an average of 7 °C to 9 °C (13°F to 16 °F) warmer than surrounding rural areas over a three year period, the new research shows. The complex phenomenon that drives up temperatures is called the urban heat island effect.â€

–

Here are some of the numbers:

–

Providence, RI – 12.2 C of UHI

Buffalo, NY – 7.2 C of UHI

Philadelphia, PA – 11.7 C of UHI

Lynchburg, VA – 5.5 C of UHI

Syracuse, NY – 10.6 C of UHI

Harrisburg, PA – 7.6 C of UHI

Paris France – 8.0 C of UHI

–

Note: Lynchburg has a population of only 70,000

–

Here is the abstract from the Imhoff paper:

–

http://pubs.casi.ca/doi/abs/10.5589/m10-039

–

“Globally averaged, the daytime UHI amplitude for all settlements is 2.6 °C in summer and 1.4 °C in winter. Globally, the average summer daytime UHI is 4.7 °C for settlements larger than 500 km2 compared with 2.5 °C for settlements smaller than 50 km2 and larger than 10 km2.â€

–

And here are some charts from Spencer showing the relationship between UHI and population. Notice that the effect starts at very low population densities.

–

http://www.drroyspencer.com/wp-content/uploads/ISH-UHI-warming-global-and-US-non-US.jpg

–

It looks like even this young boy is able to find the UHI.

http://www.youtube.com/user/TheseData?blend=21&ob=5

–

–

I should also add that in the discussion with Zeke at CA, he could provide no evidence that homogenization actually removed any UHI effect at all. But instead it appears that it simply spreads that effect around. If homogenization actually removed UHI, then the amount of warming and the slope of the rise would both be reduced.

It’s absolutely amazing to me to see “skeptics” perform their game of obfuscation by conflating the UHI effect which is well known and undeniable, with the faith-based expectation of dramatically higher warming due to UHI. What this is really about is attacking any analysis that fails to prove what people “know” from the safety of their comfy chairs.

You can “remove” the UHI effect by splitting off rural sites and analyzing them seperately. You have to have a good urbanity proxy to do this, and you have to account for the lack of precision of the location in the station metadata. If warming of rural sites is no different then you have failed to show that UHI, on a global basis, contributes to the warming signal.

cce: “You have to have a good urbanity proxy to do this, and you have to account for the lack of precision of the location in the station metadata. If warming of rural sites is no different then you have failed to show that UHI, on a global basis, contributes to the warming signal.”

Maybe you didn’t read the links that I posted above. Substantial UHI contribution is a proven fact. Change in UHI at very low population densities is shown by Spencer’s diagram. Throwing all stations into two bins proves absolutely nothing. But even that weak effort yielded a significant UHI in Zeke’s own study. Problem is that Zeke thinks he can get rid of it by streading it around.

“It’s absolutely amazing to me to see “skeptics†perform their game of obfuscation by conflating the UHI effect”

Stop talking like an AGW wako!

Here is a highly simplified explanation of why the practice of taking stations and throwing them into either an “urban” or “rural” bin for the purpose of finding UHI simply doesn’t work.

Let’s say the year is 1950 and we are going to put a thermometer in a growing city. But the city is already there and already has a very high built density. So, let’s say that the city already has 1C of UHI effect. Over the next 60 years the city continues to grow, mostly around the perimeter. The UHI effect goes up, and by 2010 there is 1.5C of UHI effect. The thermometer was only there since 1950, so the thermometer will only see the delta UHI change from 1950 to 2010 as an anomaly. So, by that thermometer, the delta UHI effect for that period is .5C.

Now, in the same year, 1950, we put another thermometer into a medium size town. Let’s say that it has a UHI effect of .1C at the time we put the thermometer there. The town grows over the next 60 years, there is a lot of building that happens close to the thermometer, and by 2010 it has .6C of UHI effect. Again, the thermometer will not register that first .1C as anomaly. But it will register the next .5C as anomaly.

So, in 2010, what we end up with is that the urban thermometer has 1.5C total of UHI effect, and the rural thermometer has .6C of total UHI effect. But, the delta UHI for both thermometers since they were installed is .5C. It is that .5C that both of them will show as anomaly.

Now BEST comes along and decides that they will measure UHI by subtracting rural anomaly from urban anomaly. Let’s also say that there has been .3C of real warming over those 60 years. So the rural thermometer shows .8C of warming anomaly and the urban thermometer shows .8C of warming anomaly. BEST subtracts rural from urban and gets zero. Their conclusion is, “either there is no UHI or it doesn’t effect the trendâ€. But, as we have just seen, .5C of the .8C in the trend of both the urban station and the rural station were UHI.

With their results, BEST has failed to discover the pre thermometer urban UHI effect, the post thermometer urban UHI effect, the pre thermometer rural UHI effect, and the post thermometer rural UHI effect. They have also failed to discover the UHI addition to the trend in either place. In other words, their test is a total fail. Even if they did their math perfectly, ran their programs perfectly, and did their classification perfectly, their answer is still completely wrong. Why? Because the design of the test never made it possible to quantify UHI. Now, many of you may object to my scenario.

Some of you may wonder if it is reasonable to expect a small town to grow at a rate that pushes up the delta UHI as fast as a city. This is where the definitions of rural and urban come in. Modis defines an urban area as an area that is greater than 50% built, and there must be greater than 1 square kilometer, contiguous, of such an area. So, for example, if you have two .75 square kilometer areas that are 60% built, separated by one square kilometer of 40% built, it’s all rural. So the urban standard is high enough that an area must be strongly urban to qualify. The rural standard is anything that is not urban. And that allows for a whole lot of built. 10 square kilometers of 49% built is all classified as rural.

BEST then goes on and further refines the rural standard as “very rural†and “not very ruralâ€. Unfortunately, they make no new build requirements for “very ruralâ€. The only new requirement is that such an area be at least 10 kilometers from an area classified as urban. But a “very rural†place could still have up to 49% build.

This means that you can have towns, small cities, and even some suburbs that are classified as rural. In such areas there is still plenty of room to build and build close to the thermometer. In the urban areas, there is little room to build. So either structures are torn down in the city to make room for new structures, or structures are put up at the edge of the city, expanding it. The new structures being put up at the edge of the city are far from the thermometer and while they still effect it, the further away they are, the less effect they have.

In the rural area there is still space to grow close to the thermometer. So, in the rural area you can actually have more UHI effect with less change in the amount of build. So, if a rural area goes from 10% built to 30% built it will still be rural and it can have the same UHI effect on the thermometer as the city where most of the new building is around the edges. The urban area may go from 75% built to 85% built around the thermometer, and it may have it’s suburbs growing, but the total effect will be close to that to the rural build.

All of this is essentially confirmed by Roy Spencer’s paper and by BEST’s own test results.

Of course this doesn’t apply to “the boys” reconstructions since they did nothing at all about UHI.

cce, rural sites generally have been tampered with by anthropogenic activity. Sometimes the effect is to decrease night time temperatures (what happens when you cut down all of the trees and increase wind exposure) and sometimes it is to cool daytime temperatures (irrigation). I can’t think of any rural only mechanisms that would lead to warming, bu tI would suppose they exist.

Anyway, you can’t just go to rural only stations to get away from anthropogenic influence on your stations.

cce,

huh?

That’s exactly what the kid and his dad explained and did in the youtube video, or are you being sarcastic? or do you criticize without viewing and reading first?

cce: “You have to have a good urbanity proxy to do this, and you have to account for the lack of precision of the location in the station metadata. If warming of rural sites is no different then you have failed to show that UHI, on a global basis, contributes to the warming signal.â€

Maybe you didn’t read the links that I posted above. Substantial UHI contribution is a proven fact. Change in UHI at very low population densities is shown by Spencer’s diagram. Throwing all stations into two bins proves absolutely nothing. But even that weak effort yielded a significant UHI in Zeke’s own study. Problem is that Zeke thinks he can get rid of it by spreading it around.

“It’s absolutely amazing to me to see “skeptics†perform their game of obfuscation by conflating the UHI effectâ€

It’s absolutely amazing to me to see “warmers” perform their game of obfuscation by rationalizing for anything to increase warming and against anything to decrease it.

Carrick: Anyway, you can’t just go to rural only stations to get away from anthropogenic influence on your stations.

Agree. It seems to me that people go through this action of parsing stations into two sets, rural and urban. Then having done that they think that all of their urban stations are in skyscraper land and all of their rural stations are in national parks. Problem is that the real thermometers are spread across the entire spectrum between those two kinds of places – with very few actually being in national parks. Then, when you draw a line and split them in two, you get a lot of those thermometers that barely make it to one side of that line or the other. And, as Spencer’s diagram shows, the effect starts at very low population densities. And with the two bin method you only get to see delta UHI effect, not total UHI effect.

As a layperson in these matters I am always impressed with how a graph of several series can show excellent correlation over time yet have significantly different trends over the same period. When most of us compare temperature series we are most interested in trends over a given period of time. Always helpful, but not conclusive in determining significant differences without further calculations, are presenting CIs for the trends or noting if CIs have not been calculated.

If we peruse Zeke’s trend chart we would expect there to be significant differences in the trends calculated from the various temperature series noted if we accept the BEST CIs of -/+ 0.005 degrees C decade or the CRUTemp -/+ 0.009 degrees C per decade. If we assume significant differences then some or all series trends could be in error. Or we could assume that the CIs are erroneously too small and all these trends are part of the same distribution. For the non satellite series it should not be all that surprising that the trends are as close as they appear in Zeke’s graph – as the sources are nearly all the same.

I am not at all certain that the rather narrow CIs presented for the various temperature series trends and particularly those going back in time have accounted for all the uncertainties. I think part of this uncertainty might arise for assumptions made about the homogenizing algorithms and the kinds of non homogeneity in the station records. Menne hints at this in his paper on breakpoints and his algorithm for determining and replacing bad data. Some kinds of changes would be difficult to find and others that are legitimate climate changes might cause false alarms.

The way I read the Menne paper on replacing a breakpoint regime deemed legitimate is to replace that regime or a minimum length of the series with a segment from a neighboring station series that is estimated to represent a median difference with the station series in question. Alternatively the BEST process does not replace any of the segments in error but instead down weights that segment in their summary calculation.

I have been determining significant breakpoints for all the USHCN stations in the before breakpoint adjustments, i.e. the maximum and minimum TOB monthly series and the after breakpoint adjusted series, i.e. the maximum and minimum Adjusted monthly series. According to my reading of the Menne paper the breakpoint of a difference series between neighboring stations should correspond with a breakpoint that would be found in the station series in question. The differences series are used to more readily find legitimate breakpoints due to station changes and avoid making any adjustments with breakpoints that are common to neighboring stations and assumed to be due to climate changes. Looking at breakpoints with all neighboring stations takes extraordinarily lengthy recursive computer calculations and thus I have been looking at individual station series breakpoints and assigning those breakpoints to climate and station changes. In doing so I have found what could be considered both over and under corrections, but this needs further work and analyses.

I was also surprised to see how differently the breakpoints occurred for the maximum and minimum temperature series of individual stations and further how consistent the breakpoints were for the averaged series of TOB and Adjusted for both maximum and minimum series (I found a single break date for each series that was common to all four series) given the wide range of break dates found even in the Adjusted maximum and minimum series for individual stations. All this indicates to me that there are a number of rather localized climate changes that occur over time that tend to average to the same year/month for the entire US. I am not sure how this process would fit the model of an overiding climate background superimposed on local weather variations.

Unfortunately I’m not at my normal IP address this weekend (I’m in a cabin in the woods), and hence cannot update the post, but Gavin sent me a link to the actual GISTemp land masked proportionally spatially weighted data: http://www.columbia.edu/~mhs119/Temperature/T_moreFigs/Tanom_land_monthly.txt

This shows a somewhat higher trend than the CCC landmasked data, and brings it much more closely in like with BEST/NCDC. Updated figures are below:

http://i81.photobucket.com/albums/j237/hausfath/Fig1.png

http://i81.photobucket.com/albums/j237/hausfath/Fig2.png

http://i81.photobucket.com/albums/j237/hausfath/Fig3.png

http://i81.photobucket.com/albums/j237/hausfath/Fig4.png

phi,

By homogenization I mean the use of BEST’s scalpel or NCDC’s PSA. For comparison, all the blogger-created records (mine, Moshers, Chad’s, Nick’s, Jeff’s, etc.) use the raw GHCN data with no adjustment to individual station records). These do not attempt to correct for UHI or other factors, but do show that the net effect of NCDC and BEST’s homogenization is relatively small compared to a simple reconstruction using the raw data.

Tilo Reber,

As I mentioned over at CA, we used a rural-only set to homogenize to test if spreading was possible. This idea was developed based on Troy_CA’s excellent work on the subject. We found that some spreading is possible when using all stations (both urban and rural) to correct for inhomogenities, but the net effect is relatively small. You might disagree that this approach can correctly eliminate the risk of spreading due to residual UHI as rural sites, but we specifically tested it with four different urbanity proxies (ISA, Nightlights, GRUMP, and Historical Pop Growth) to try and test different gradations of urban. We even tested all possible cutoff values for continuous proxies like ISA. If you have a suggestion of a “truly rural” proxy that can get around the issue of residual UHI, I’d love to try that as well.

Zeke: “These do not attempt to correct for UHI or other factors, but do show that the net effect of NCDC and BEST’s homogenization is relatively small compared to a simple reconstruction using the raw data.”

Which tells you that the BEST and NCDC homgenization does nothing to remove UHI.

Carrick,

I’m talking about the “urban heat island” effect. Microsite changes would be mostly handled by the scalpel, and Fall et al did not find a bias in the mean temperature trends due to such contamination. Increased irrigation would reduce trends. Wide scale land use change is another issue and I haven’t read enough about it, although the scalpel might detect the change (for example, clear cutting a forest to make room for crops).

Kermit,

Unless the kid and his dad created time series over the past 30 years and compared the amount of warming at each location throughout the city and countryside independently and then replicated that all over the globe, the youtube video isn’t “exactly” what I am talking about.

Tilo,

Please link to studies that actually say what you are asserting. Do not link to studies or blog posts that show that urbanized areas are warmer than rural areas, since that is obfuscation in its purest form. I want to see actual estimates of the contribution of UHI to the global warming signal, not dataless worst case scenarios that exist in someone’s head.

Zeke,

Have you tried a union of all urbanity proxies? And then allowed for a large “cushion” between those areas and the stations? With the BEST dataset, you should probably have adequate coverage since at least the early seventies.

“As I mentioned over at CA, we used a rural-only set to homogenize to test if spreading was possible.”

Yes, and it gave you a different result than homogening without that limitation. And the temperature reconstructions for “the boys” above don’t even do rural-only homogenization.

“You might disagree that this approach can correctly eliminate the risk of spreading due to residual UHI as rural sites, but we specifically tested it with four different urbanity proxies (ISA, Nightlights, GRUMP, and Historical Pop Growth) to try and test different gradations of urban. ”

It really doesn’t matter about the urbanity proxies. You still created two bins, some very close to 50/50. And that won’t show you what you are looking for. Like I said above, the simple action of automatic parsing of stations into a rural bin and an urban bin doesn’t get you skyscraper land in one bin and national parks in the other – no matter which of the methods you use. Because the thermometers are not just in skyscraper land or national parks. In fact, the minority are probably in those areas.

Zhang and Imhoff and Spencer do a good job of identifying the problem. Look at what they did. Just because you don’t have an easy way of letting the computer do the work for you doesn’t mean that the problem isn’t there.

Like I said before, if your homogenization doesn’t reduce the temperature and the slope of the warming trend, then you haven’t done anything except spread the UHI around. UHI is not a discontinouity. And you don’t do anything about it by adjusting to nearby coop stations, since they have the same UHI problems themselves. And the two bin method only gives you delta anomolies where you could easily have as much delta growth near rural thermometers as near urban thermometers.

Zeke,

“By homogenization I mean the use of BEST’s scalpel or NCDC’s PSA.”

Okay, so there is a serious problem somewhere because all the case studies that I know (New Zealand, United States, Switzerland, Alpine region) show that the raw series are characterized by discontinuities bias of 0.5 ° C in the twentieth century. Do you have a regional case study that would show a different result?

Update: Zeke emailed and ask I update some images. I substituted some, I hope without having screwed anything up. If the images seem to be mismatched, let me know.

Does anyone know why my reconstruction came out at the bottom. All I did was pile the data and use LS regression to offset it. The trend was lower if I used anomaly methods. I’m not familiar enough with the non-standard blogger series to know what is happening.

jeff, it depends on the integration period:

Tilo Reber (Comment #85227)

November 5th, 2011 at 11:37 am

Here is a highly simplified explanation of why the practice of taking stations and throwing them into either an “urban†or “rural†bin for the purpose of finding UHI simply doesn’t work.

That makes sense to me. The BEST methodology is simply saying that for UHI to be true, large cites on average must be warming faster than smaller towns.

This could introduce Simpson’s Paradox or similar problems, because averaging could be masking other causes of warming. A small town growing rapidly could for example show greater warming than a large town that is no longer growing, something that the BEST methodology would not detect. While this would be evidence for UHI, BEST would see this as evidence that UHI does not exists

To avoid this BEST should have compare the rate of warming with the rate of urbanization at individual stations, before averaging. Something BEST failed to do.

Carrick #85125,

Actually, “generalized least squares†is what it is called. This is one area poorly covered by Wiki, but the basic algebra is here. Here are some Cornell notes.

The matrix algebra is a little more complicated than just inverting the correlation matrix. But you do have to do that, which is a kind of robust estimation problem. It needs empirical variogram kind of stuff. A common method seems to be to guess the structure and fit a scale factor.

I expected BEST would do this, using kriging for the estimator, but I don’t think their approach is equivalent.

ferd: A small town growing rapidly could for example show greater warming than a large town that is no longer growing,

The large town could even be growing at a healthy rate, but more at the edges than near the center of built, where there is not much room. Both the volumn of growth and the proximity to the thermometer make a difference.

cce: “Unless the kid and his dad created time series over the past 30 years and compared the amount of warming at each location throughout the city and countryside independently and then replicated that all over the globe, the youtube video isn’t “exactly†what I am talking about.”

–

You don’t need a time series to tell you that a significant difference exists between the city and the countryside, or that it it got that way through time. And the sites were compared independenty. And you don’t need the entire globle, because assuming that we have stong UHI in the US but not in the rest of the world is absurd. So, if nothing else, the kid’s finding can tell you that the BEST UHI result is stupid. Of course Zhang and Imhoff and Spencer proved that as well.

–

cce: “Please link to studies that actually say what you are asserting.”

–

Spencer’s chart shows it to you. Imhoff’s paper quantizes the effect:

–

“Globally averaged, the daytime UHI amplitude for all settlements is 2.6 °C in summer and 1.4 °C in winter. Globally, the average summer daytime UHI is 4.7 °C for settlements larger than 500 km2 compared with 2.5 °C for settlements smaller than 50 km2 and larger than 10 km2.â€

“I want to see actual estimates of the contribution of UHI to the global warming signal”

Yes, you would think that having spent 50 billion trying to prove that mankind is guilty, that someone would have taken the known effect and integrated it as a part of the whole. But that would have gone counter to what they were desperate to prove. But if the average amplitude is 2.6C for all settlements that should give you a clue. “All settlements” likely includes 50% of the thermometers or more. So even if you distribute that 2.6 C summer + 1.4C winter / 2 = 2C from just settlements to all thermometers it is still going to be big. Even if you go to the extreme and use the 27% of the thermometers that are known to be in cities and take 27% of 2C, you still get .54C of UHI across all thermometers. But we know from Spencer’s chart that the UHI effect starts at very low population levels, so the number is likely much higher than .54C.

Now if you want time series integrated into a global result, just give me a Mann sized grant and I’ll do the work.

Tilo.

Spencer used an Alpha population data product and did no QA on station locations. His is an interesting IDEA, but hardly a compelling case given the inaccuracies in the data he used.

cce (Comment #85246)

November 5th, 2011 at 12:56 pm

Zeke,

Have you tried a union of all urbanity proxies? And then allowed for a large “cushion†between those areas and the stations? With the BEST dataset, you should probably have adequate coverage since at least the early seventies.

########################################

I won’t pull a Muller, but let’s just say that this approach is on the table. with a couple twists.

There are other confounding factors as well. One can, for example, take one of the proxies ( say lights) and create two piles

No lights: bright Lights. so the extremes of the proxy. And you can show a difference. So compare the top 10% to the bottom 10%

But then you have a spatial coverage issue.

You can do the union of all proxies, but then, you lose places like South America, parts of africa, India, eastern Coastal China.

so you kinda have a trade off between finding the signal by looking at extremes and having enough stations to do a decent global average.. of course you could say.. pick the best and live with the uncertainty.. in the end we are fighting over less than .1C decade.

Thats a fun fight, but it’s side discussion if you want to discuss AGW. Frankly I prefer the side discussion more and more. fewer idiots

Tilo

So even if you distribute that 2.6 C summer + 1.4C winter / 2 = 2C from just settlements to all thermometers it is still going to be big.

You have to ascertain whether Imhoff was discussing the effect on Tmean or Tave. Next you need the Fall and Spring numbers.

Next you have to realize that the size of places he was looking at are typically not in the station database.

Mosher: Even if he is off by 20-30% the idea would hold. And Imhoff wasn’t using any alpha products to come up with 2.6C summer and 1.4C winter of UHI for all settlements. So even if “settlements” meant nothing but the 27% of thermometers known to be in cities, and even if everything else had zero UHI, you would still get .54C of UHI as an average for every thermometer on the planet.

Mosher: “You have to ascertain whether Imhoff was discussing the effect on Tmean or Tave. Next you need the Fall and Spring numbers.”

Nope, I don’t need any of it to know that the UHI effect is large and to know that using .01C is stupid and to know that the BEST negative UHI effect is even stupider. You are grasping for straws again Mosher. Are you going to suggest that fall and spring have a negative UHI effect now? Please don’t waste my time.

Jeff Id,

I’m almost sure its due to the lack of a land mask; with one, your and Nick’s reconstruction should match mine/Chad’s/Mosh’s.

Carrick,

You might be using a dated file. With the latest version I get this for 1950-2009: http://i81.photobucket.com/albums/j237/hausfath/Screenshot2011-11-05at33658PM.png

The data file is here: http://www.2shared.com/document/b4cq5AnU/BEST_scratchwork.html

Tilo. .54C? over on CA you were saying more like .1C

Further, I’m not suggesting that Fall and Spring are 0. strawman

Im suggesting you actually read Imhoff.

Next, look at what LST is and what it is not.

Next where do you get a 27% number? and is that the same criteria as Imhoff?

Look, I do not disagree with the approach, but you need to be a bit more consistent and diligent.

On spencer. do you even know the dataset he used?

do you know that the locations in that dataset are good?

are they good enough to use with a 30 arc second population dataset?

Do you know how that dataset was created?

Trying to draw conclusions from web posts and paper abstracts is Bruce like. you are better than that.

Interestingly enough, once can quite easily think of a case where there is a very large absolute UHI with no (or slightly negative) UHI trend. Central Park comes to mind. That said, I am suspicious of the BEST negative UHI result as well, though its worth pointing out that BEST is using homogenized (via scalpel) rather than raw data in their comparisons.

the satellite data would differ significantly from thermometer data, if the UHI effect was anything close to what Tilo suggests.

apart from that, the “U” in UHI comes from the word “URBAN”. Tilo suggests a “UHI” effect in villages and the countryside, which is stronger than what we see in cities. that is “urban” places, where we get zero light data at night.

and Steven Mosher is right. Tilo forgot to mention, what his data is. day max? minimum? monthly averages? with windy days or without?

Zeke,

If you are saying that deweighting of ocean/land squares by the land mask would reduce trend, I think that makes sense. Roman’s method really should produce a higher trend with the same data and gridding so it was disappointing to see the result at the bottom.

Mosher “Tilo. .54C? over on CA you were saying more like .1C”

That was “per decade” since 79.

“Next where do you get a 27% number?”

From BESTs UHI paper.

“Urban areas are heavily overrepresented in the siting of temperature stations: less than 1% of the globe is urban but 27% of the Global Historical Climatology Network Monthly (GHCN-M) stations are located in cities with a population greater than 50,000.”

“and is that the same criteria as Imhoff?”

Well, Imhoff says this:

“Globally, the average summer daytime UHI is 4.7 °C for settlements larger than 500 km2 compared with 2.5 °C for settlements smaller than 50 km2 and larger than 10 km2.—

For comparison, Philadelphia is 790 km2, Lynchburg VA, with a population of only 70,000 is 29km2. So if the 27% is everything above 50,000 population, and if Imhoff is including everything over 10km2, then Imhoff’s study probably includes more than 27% of the cities. So I’m giving you the slop on everything you bring up Mosher, and you keep bickering as though there were some magic that was going to make the truth go away. UHI is large – accept it.

Sod: “the satellite data would differ significantly from thermometer data, if the UHI effect was anything close to what Tilo suggests.”

–

It is significantly different from the thermometer data, Sod. But go back and read all my posts along with the links I provided before you get started. I don’t want to have to write all of that all over again for your benefit.

Mosher: “So compare the top 10% to the bottom 10%”

“But then you have a spatial coverage issue.”

–

I would say use the top 5% and the bottom 5%. You don’t need spatial coverage. Get representative coverage. Whatever thermometers you use, make sure that their breakdown covers deserts, polar areas, shore areas, city areas, farming areas, some different elevations, and continents in proportion to the reality of the globe. Over the long haul, convection is going to move any global change around to where it is seen everywhere. A hundred thermometers, with no adjustments required or moves made and correctly distributed, simply averaged together should tell the story better than thousands of the “homogenized” or “kriged” broken and spliced, grided, TOBd, MMTSd, interpolated, extrapolated, etc., etc., etc., set. But of course if it’s really fun to do all that math and programming, then it’s absolutely necessary. Kind of a shame about the proxy data, however. Because if all that is required to produce a meaningful number, then you can’t get there with proxy data.

Oops:

1 Using a minimum and maximum temperature dataset exaggerates the increase in the global average land surface temperature over the last 60 years by approximately 45%

2 Almost all the warming over the last 60 years occurred between 6am and 12 noon

3 Warming is strongly correlated with decreasing cloud cover during the daytime and is therefore caused by increased solar insolation

4 I’ll then add a part 4 covering some additional analysis of mine

Reduced anthropogenic aerosols (and clouds seeded by anthropogenic aerosols) are the cause of most the observed warming over the last 60 years.”

“I find it surprising to say the least, that Tom Karl, Director Of The National Climate Data Center hasn’t in the last 20 years investigated whether minimum temperatures (which he correctly states occurs in the early morning) do indeed measure nighttime temperatures, as this is perhaps the most important assumption underlying the evidence for AGW.”

How many have died for the false AGW theory?

Trust the Aussies not to take the bait.

Jonathan Lowe, Philip Bradley and Bishop Hill

http://bishophill.squarespace.com/blog/2011/11/4/australian-temperatures.html

There are two different issues. The first, is there an urban heat island effect, and the answer is obviously yes.

The second is, has the magnitude of the heat island effect affected temperature anomalies measured in urban areas, and the answer there is no, because urban densities have remained constant over more than a century.

Where the anomalies have changed due to heat island increases is suburban areas, and Eli strongly suspects in small towns which have lost population.

“The second is, has the magnitude of the heat island effect affected temperature anomalies measured in urban areas, and the answer there is no, because urban densities have remained constant over more than a century.”

No, an increase in urban area resulting from growth around the edges will also increase the effect. And no again because many areas that are urban now were not urban a hundred years ago. But yes, development around the edges of a city doesn’t effect thermometers as much as development close by.

“Where the anomalies have changed due to heat island increases is suburban areas,”

Very likely.

“and Eli strongly suspects in small towns which have lost population.”

And thermometers.

Here is the population chart.

http://en.wikipedia.org/wiki/File:World-Population-1800-2100.png

Denver 1898

http://en.wikipedia.org/wiki/History_of_Denver

Denver today:

http://www.rexwallpapers.com/wallpaper/Denver-1/

Mosher,

As Tilo says “I would say use the top 5% and the bottom 5%. You don’t need spatial coverage. Get representative coverage.”

So could you start from the ends and work your way to the middle and see if there are any patterns that emerge?

Zeke:

You were right… I had the latest file downloaded but hadn’t converted it. It just made things worse (makes sense, they were reporting TMAX instead of TAVG before).

Have you tried the weighted least squares? These are what I get (not including confidence intervals):

BEST unweighted: 0.279

BEST weighted: 0.344

There is currently a difference in approach to climate science between the sceptical Baconian – empirical appraoch solidly based on data and the Platonic IPCC approach – based on theoretical assumptions built into climate models.The question arises from the recent Muller – BEST furore -What is the best metric for a global measure of and for discussion of global warming or cooling. For some years I have suggested in various web comments and on my blog that the Hadley Sea Surface Temperature data is the best metric for the following reasons . (Anyone can check this data for themselves – Google Hadley Cru — scroll down to SST GL and check the annual numbers.)

1. Oceans cover about 70% of the surface.

2. Because of the thermal inertia of water – short term noise is smoothed out.

3. All the questions re UHI, changes in land use local topographic effects etc are simply sidestepped.

4. Perhaps most importantly – what we really need to measure is the enthalpy of the system – the land measurements do not capture this aspect because the relative humidity at the time of temperature measurement is ignored. In water the temperature changes are a good measure of relative enthalpy changes.

5. It is very clear that the most direct means to short term and decadal length predictions is through the study of the interactions of the atmospheric sytems ,ocean currents and temperature regimes – PDO ,ENSO. SOI AMO AO etc etc. and the SST is a major measure of these systems.Certainly the SST data has its own problems but these are much less than those of the land data.

What does the SST data show? The 5 year moving SST temperature average shows that the warming trend peaked in 2003 and a simple regression analysis shows an eight year global SST cooling trend since then .The data shows warming from 1900 – 1940 ,cooling from 1940 to about 1975 and warming from 1975 – 2003. CO2 levels rose monotonically during this entire period.There has been no net warming since 1997 – 14 years with CO2 up 7% and no net warming. Anthropogenic CO2 has some effect but our knowledge of the natural drivers is still so poor that we cannot accurately estimate what the anthropogenic CO2 contribution is. Since 2003 CO2 has risen further and yet the global temperature trend is negative. This is obviously a short term on which to base predictions but all statistical analyses of particular time series must be interpreted in conjunction with other ongoing events and in the context of declining solar magnetic field strength and activity – to the extent of a possible Dalton or Maunder minimum and the negative phase of the Pacific Decadal a global 20 – 30 year cooling spell is more likely than a warming trend.

It is clear that the IPCC models , on which AL Gore based his entire anti CO2 scare campaign ,have been wrongly framed. and their predictions have failed completely.This paradigm was never well founded ,but ,in recent years, the entire basis for the Climate and Temperature trends and predictions of dangerous warming in the 2007 IPCC Ar4 Summary for Policy Makers has been destroyed. First – this Summary is inconsistent with the AR4 WG1 Science section. It should be noted that the Summary was published before the WG1 report and the editors of the Summary , incredibly ,asked the authors of the Science report to make their reports conform to the Summary rather than the other way around. When this was not done the Science section was simply ignored..

I give one egregious example – there are many others.Most of the predicted disasters are based on climate models.Even the Modelers themselves say that they do not make predictions . The models produce projections or scenarios which are no more accurate than the assumptions,algorithms and data , often of poor quality,which were put into them. In reality they are no more than expensive drafting tools to produce power point slides to illustrate the ideas and prejudices of their creators. The IPCC science section AR4 WG1 section 8.6.4 deals with the reliability of the climate models .This IPCC science section on models itself concludes:

“Moreover it is not yet clear which tests are critical for constraining the future projections,consequently a set of model metrics that might be used to narrow the range of plausible climate change feedbacks and climate sensitivity has yet to be developed”

What could be clearer. The IPCC itself says that we don’t even know what metrics to put into the models to test their reliability.- i.e. we don’t know what future temperatures will be and we can’t yet calculate the climate sensitivity to anthropogenic CO2.This also begs a further question of what mere assumptions went into the “plausible” models to be tested anyway. Nevertheless this statement was ignored by the editors who produced the Summary. Here predictions of disaster were illegitimately given “with high confidence.” in complete contradiction to several sections of the WG1 science section where uncertainties and error bars were discussed.

A key part of the AGW paradigm is that recent warming is unprecedented and can only be explained by anthropogenic CO2. This is the basic message of the iconic “hockey stick ” However hundreds of published papers show that the Medieval warming period and the Roman climatic optimum were warmer than the present. The infamous “hide the decline ” quote from the Climategate Emails is so important. not so much because of its effect on one graph but because it shows that the entire basis if dendrothermometry is highly suspect. A complete referenced discussion of the issues involved can be found in “The Hockey Stick Illusion – Climategate and the Corruption of science ” by AW Montford.

Temperature reconstructions based on tree ring proxies are a total waste of time and money and cannot be relied on.

There is no evident empirical correlation between CO2 levels and temperature, In all cases CO2 changes follow temperature changes not vice versa.It has always been clear that the sun is the main climate driver. One new paper ” Empirical Evidence for a Celestial origin of the Climate Oscillations and its implications “by Scafetta from Duke University casts new light on this. http://www.fel.duke.edu/~scafetta/pdf/scafetta-JSTP2.pdf Humidity, and natural CO2 levels are solar feedback effects not prime drivers. Recent experiments at CERN have shown the possible powerful influence of cosmic rays on clouds and climate.

Solar Cycle 24 will peak in a year or two thus masking the cooling to some extent, but from 2014 on, the cooling trend will become so obvious that the IPCC will be unable to continue ignoring the real world – even now Hansen and Trenberth are desperately seeking ad hoc fixes to locate the missing heat.

Nick, I don’t know if you have access to matlab, but they have a solver that uses the covariance matrix when available:

For general info:

A is the matrix composed of the vectors you’re minimizing with respect to, x is the set of parameters you’re fitting for, and b is the array of measured data you’re fitting to.

I would say use the top 5% and the bottom 5%. You don’t need spatial coverage. Get representative coverage. Whatever thermometers you use, make sure that their breakdown covers deserts, polar areas, shore areas, city areas, farming areas, some different elevations, and continents in proportion to the reality of the globe.

###

I can see you have not worked with the data. desert stations are almost all rural so you cant get a representative urban sample.

same with polar. they are all rural. Shore areas, mostly urban.. go figure we like to live by the ocean.

Like I said when you look at the extremes you lose spatial variety

anyways the top 5% are no different than the top 10%

Next

Tilo.

On CA you said that you were willing to say that UHI was over half

of the .11 c per decade difference between UHA and the ground record

UAH = .18Cdecade

BEST = .28C decade

You agreed with spencer, christy and McIntyre that this .1C

difference might be attributed to UHI and guess that some of it was due to kidging and over half was UHI

.05C to .1C per decade from 1979to 2010 is not large. Recall

this is 30% of the total.

So are you disagreeing with your self

Eli on de urbanization

I can put that to rest. The number of sites where population dropped from 1950 to today is mouse nuts

I love this speculation

Next

“The second is, has the magnitude of the heat island effect affected temperature anomalies measured in urban areas, and the answer there is no, because urban densities have remained constant over more than a century.”

Eli is dead wrong about this when it comes to stations.

Lets look at a good example.

The largest collection of daily min/max sites is GHCN Daily

there are 26000 stations that report tmax and tmin on a daily

basis.

If we use a BEST like strategy to divide them into very rural and

Not very rural, we end up with the following for historical population density.

These are the figures for the Very rural by Best rules

1950 5.9 persons per sq km

1960 6.9

1970 7.7

1980 8.7

1990 9.7

2000 10.6

Not very rural by Best rules

1950 230 people per sq km

1960 281

1970 335

1980 387

1990 444

2000 502

Of the sites that are classified as Not very rural 3% de urbanized

that is 3% had less population in 2000 than in 1950

Next Myth.

Carrick, #85300

I don’t have access to Matlab. But it sounds like the scale factor fitting version that I mentioned:

“A common method seems to be to guess the structure and fit a scale factor.”

You have to supply V (and make sure it is invertible).

Zeke, FYI:

Here’s a comparison of best fits, weighted versus unweighted on BEST.

The outliers in BEST seem to have a larger associated uncertainty, which downweights them in the weighted LSF, resulting in a steeper slope. (It’s my impression that the typical effect of the generalized LSF discussed by Nick is to widen the uncertainties, not dramatically shift the computed trends, so using that won’t rescue BEST.)

0.344 °C/decade is really not very a consistent result compared with the other series. For the “results based” group, let’s have them circle the wagons and explain why you can’t use weighted least squares fit in climate science. That’s already happened a bit on Nick’s thread.

Zeke (Comment #85273)

November 5th, 2011 at 4:49 pm

Interestingly enough, once can quite easily think of a case where there is a very large absolute UHI with no (or slightly negative) UHI trend.

#####

in fact when looking at UHI in Portand the number 1 regressor for predicting UHI was …. Canopy

Canopy created negative UHI about -2 to 4C

The second most significant/important regressor was building height

if you look around you can find this not behind the paywall

http://www.springerlink.com/content/2614272141303766/

http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=4&sqi=2&ved=0CDAQFjAD&url=http%3A%2F%2Fams.confex.com%2Fams%2Fpdfpapers%2F127284.pdf&ei=fh22Tur9C4O0iQLYzbBm&usg=AFQjCNFHZMbRpg7qAF_eFfy__Vi_2F1YJg&sig2=rNvk32SgKVaeUVFW17hCJw

You can see the -4C urban cool parks here

http://www.epa.gov/statelocalclimate/documents/pdf/sailor_presentation_heat_islands_5-10-2007.pdf

If you live in brussels you can see the UHI for your house.

http://geowebgis.irisnet.be/BXLHEAT/mapviewer.jsf?langue=NL

About 50% of the temperature change at Uccles from 1833 to today has been attributed to UHI.

Nick, I think it’s basically what the WIKI causes feasible generalized least squares. There’s an option where you just give a vector of the weightings, which get used as a diagonal matrix as you described above.

Here’s a link to the full documentation.

Unfortunately lscov is missing from octave still.

At least it now supports classes. I haven’t tested any of my class-based code to see how well it works though.

http://journals.ametsoc.org/doi/pdf/10.1175/JCLI3431.1

FIG. 6. UHI analysis where the station rural (urban) classification was based on whether it was below (above) a given population threshold. The first statistically significant UHI is detected at

14 000 people within 6 km. This classified 42% of the stations as

urban. The peak value at 30 000 classifies 30% as urban. And the

final statistically significant threshold at 60 000 within 6-km radius

classifies only 18% of the stations as urban

It should not be surprising from the scatter in Fig. 5a that the correlation r of

temperature anomaly from the dataset having the

strongest UHI signal and population anomaly at the

radius with the strongest urban temperature signal is

only 0.17, indicating that the population anomaly only

explains 3% of the variance in temperature anomalies.

The results shown in Figs. 7a and 7b suggest that

removing the 30% of the most populated stations from

an UHI analysis essentially removes the UHI signal

from U.S. data. As stated earlier, the 30% most urban

in this analysis refers to stations with 30 000 or more

people within 6 km. The U.S. HCN (Easterling et al.

1996) is the most widely used in situ network for longterm temperature change analyses in the United States.

Figure 8 shows the percent of U.S. HCN stations with

populations in excess of various thresholds within 6 km

calculated from the 1 km gridded population data for

2000 rather than 1990 as used previously. It turns out

that 16% of the U.S. HCN stations are at or above

the 30 000 population within the 6-km threshold

######################

Interesting.

It is clear from recent discussions that UHI or more properly the disturbances of stations by urbanization can be estimated at about 1 ° C per century since 1979 on the basis BEST UAH. In fact, it would be better to make comparisons considering only land in the northern hemisphere to avoid the problem of Antarctic, in this case, we get at least 1 ° C per century but based on CRUTEM UAH.

What was the evolution of perturbations before 1979?

To get an idea, one can imagine that most of these disturbances are caused by energy expenditure and drainage of urban surfaces. We can now estimate the shape of the evolution of perturbations from the increase in energy consumption:

http://1.bp.blogspot.com/_6VdeaIXri4s/TNnRB-6iUiI/AAAAAAAANLc/_qsfsdDiB9I/s1600/energy%2Bconsumption%2Bsince%2B1850.PNG

“I can see you have not worked with the data. desert stations are almost all rural so you cant get a representative urban sample.”

Try Phoenix, Tuscon, Albuquerque, El Paso.

“Shore areas, mostly urban”

Ever driven up California coastal highway 1? Every been to the shores of Australia?

“So are you disagreeing with your self”

Still up to your old tricks of blabbing without reading. It’s the second time you’ve asked the question and I already gave you the answer after the first time. The .1C refers to the “PER DECADE” trend since 79. The “greater than .54C” refers to the entire error in the instrument record.

Tilo

.1Cper decade for the period 1979 to 2010

you said over half

you wanted to reserve some of this for your kridging delusion

last time I looked 1979 to 2010 was 3 decades

3* .1 = .3

But then you got no kriding delusion

3. .05 = .15

The mistake you are making is extrapolation the 1979 to 2010 stuff

backwards before 1979.

and we know what you think of extraoplation.

1. When I speak of desert stations I am speaking of ALL desert stations, so when I say almost all, I mean that idiots can of course find some. thank you.

2. when I say mostly urban I mean what I say. Yes, idiots can find rural coastal sites. thank you. Some of us can actually compare the rural coastal stations with the urban coastal stations.. and look at desert coastal stations both rural and urban

Next.

Suggest a rural coastal station. Any one. I will use the characteristics of that rural station to DEFINE rural. that is, I will use your definition of rural. Pick one. any one. as your prime example

and remember. if you want both UHI and kridging error they have to sum to .1

and remember there are three decades from 1979 to 2010.

no extrapolating allowed

Mosher:

From the article that you linked.

“An additional analysis was performed using what should be

only the “most rural†stations and the “most urban†stations defined by the station being classified as rural or urban by both metadata sets. Interestingly, using only the most rural and most urban stations, as shown in Table 1, the mean urban rural temperature difference was fairly large but no longer statistically significant, perhaps because of the smaller sample size and

variability.”

So basically, they found that using the most rural and most urban stations gives you a large temperature difference – but they won’t tell you what the difference was. Another case of hiding the data by an AGW team that never wanted to know the truth in the first place. Instead, they try to blow it off by claiming it was not “statistically significant”. Just like they blow off the UHI changes above 60,000 and below 10,000 by claiming they are not statistically significant. So their interpretation doesn’t say we didn’t find the UHI. It means they found it but because they couldn’t classify it as statistically significant it meant they could pretend that it didn’t exist at all. And their interpretation of statistiacally significant wasn’t necessarily related to the magnitude of the UHI, but rather to other factors like quantity of stations and coverage. So, from figure 6, you get between .2C and .3C of UHI effect at populations over 60,000 that is blown off because it doesn’t qualify as statistically significant. And they couldn’t be bothered to get more stations to make it significant.

Really, this study was BS.

In any case, this is an old study that has been overcome by better work from Zhang, Imhoff and Spencer.

Tilo,

Will you please post links to papers that show what you are asserting? Because, to date, you haven’t provided us with anything.

The question of whether UHI contaminates global trends has been answered by the literature and the answer is: very little. If “skeptics” don’t like that answer, it is up to them to show that it is false. It is up to them to put up their time and money to show this. They have spent decades complaining, and they now have all the information required to do this. They need to put up or shut up, rather than continue this game of linking youtube videos and blog posts and papers about UHI while claiming they prove things that they do not address. If multiple analyses show that UHI contamination doesn’t substantially affect global trends, they are not immediately wrong because you don’t want to believe it.

“.1Cper decade for the period 1979 to 2010”

you said over half

Yes, Mosher, I said over half. I only used the .1C as a simplified reference because you used it that way. What I said was over half of .11C. That means from .055 to .11C per decade. But likely not the full .11C because of the statistical extrapolation errors. Good lord, Mosher, are you trying to be dense on purpose?

“last time I looked 1979 to 2010 was 3 decades”

That’s right, and the rate for that 3 decades period was given as between .055 and .11C PER DECADE. That means roughly between .165 and .33 C for that period. Is that spelled out clearly enough for you? And likely somewhat less than .33C because of the statistical extrapolation errors. A five year old could figure out what I mean. Are you trying to be contradictory and petty because you’ve got nothing. Are you puroposely looking for a way to misinterpret what I said just so that you can jump up and down yelling, “I gotcha”?

“1. When I speak of desert stations I am speaking of ALL desert stations, so when I say almost all, I mean that I can of course find some. thank you.”

And as I clearly explained in the very example that you were addressing, you don’t need all the desert satation, only a representative sample.

In any case, you have again returned to babbling like a confused lunatic. So get lost, you have again exhaused my patience.

cce: “Will you please post links to papers that show what you are asserting? Because, to date, you haven’t provided us with anything.”

I posted them above. If you don’t understand my argument then don’t waste my time. If you do understand my argument, then make your objections directly to those points.

“The question of whether UHI contaminates global trends has been answered by the literature and the answer is: very little. ”

It’s been answered in more recent literature, and the answer is: a lot.

“They have spent decades complaining,”

Blah, blah, blah.

Tilo,

I haven’t been able to find this Spencer study that you keep referencing. Perhaps you are mistakenly conflating a blog post on absolute UHI differences with some publication on trend effects?

Zeke:

“I haven’t been able to find this Spencer study that you keep referencing.”

–

With regard to Spencer, I’m talking about these charts.

–

http://www.drroyspencer.com/wp-content/uploads/ISH-UHI-warming-global-and-US-non-US.jpg

–

And this study.

–

http://www.drroyspencer.com/2010/03/the-global-average-urban-heat-island-effect-in-2000-estimated-from-station-temperatures-and-population-density-data/

–

You can see the abstract from the Imhoff paper here.

–

http://pubs.casi.ca/doi/abs/10.5589/m10-039

–

And the NASA article is here:

–

http://www.nasa.gov/topics/earth/features/heat-island-sprawl.html

Tilo:

.

OK, allow me to summarise:

.

1- You absolutely fail at reading. They do tell you what the difference is. As indicated in the goddamn paragraph that you quote, it’s in Table 1, just under the numbers in bold. Let me spell them out for you: .22 for the “adjusted” data and .15 for the “modified adjusted” data.

.

2- You confuse effects of UHI on absolute temperatures (which is the subject of that passage) and on trends (which is what people are talking about).

.

3- You don’t understand what statistical significance means. Which may explain why you like Judith’s blog so much.

.

4- You get rude when people suggest that maybe, just maybe, the burden of proving that everybody else has completely missed an obvious UHI effects on trend falls on you, not on the rest of the world.

.

Can you see why people don’t take you seriously?

Tilo,

Please provide quotes from your oft-pasted links that quantify the portion of warming in the global land record that is attributable to urbanization.

Also, I understand your argument just fine. You believe that because the UHI effect exists, any study that shows minimal contamination of the global temperature record from urban sites is wrong and the authors are idiots. You prove this assertion by repeating it.

Tilo,

Those charts from Spencer’s blog post refer to absolute temperature differences, not trend differences. The two really aren’t comparable.

toto:

“You confuse effects of UHI on absolute temperatures (which is the subject of that passage) and on trends (which is what people are talking about).”

You can’t get to a large absolute UHI effect without having gone through a trend to get there.

“You don’t understand what statistical significance means.”

I understand perfectly well what statistical significance means. Apparently you don’t. The values that they have show large UHI. The fact that they are not statistically significant is not the same as showing that the UHI doesn’t exist. In their own words.

“Interestingly, using only the most rural and most urban stations, as shown in Table 1, the mean urban rural temperature difference was fairly large but no longer statistically significant,

perhaps because of the smaller sample size and variability.”

Obviously if the sample size is too small and the variability too large, then the right answer is to get a bigger sample size, not to blow off the result as non-existent UHI.

“You get rude when people suggest that maybe, just maybe, the burden of proving that everybody else has completely missed an obvious UHI effects on trend falls on you, not on the rest of the world.”

Everybody else hasn’t missed the UHI effect. It’s been documented again, and again, and again, as is shown in the links that I gave you. The burden of proof lies with those that would seek to deny both that which is obvious and that which has been documented.

Zeke:

“Those charts from Spencer’s blog post refer to absolute temperature differences, not trend differences. The two really aren’t comparable.”

That is absurd Zeke. When you have the huge absolute differences that are currently measured, you didn’t get to that point by way of zero trend differences. Think about what you are saying. In fact, it’s you who are confused about UHI. If you have a rural UHI trend and a city UHI trend and then you difference the two and find no difference, then you cannot conclude that there is no UHI. It only means that both trends have been made more positive by UHI. And if you read Spencers study it tells you this:

“For instance, a population increase from 0 to 20 people per sq. km gives a warming of +0.22 deg C, but for a densely populated location having 1,000 people per sq. km, it takes an additional 1,500 people (to 2,500 people per sq. km) to get the same 0.22 deg. C warming.”

This is where you, Mosher, and others get completely confused. When you are trying to answer the question, “How much of the warming we see in the temperature record is UHI”, that is not the same as, “What is the difference in trend between rural and urban stations”. The questions are not necessarily related. Just because the name “urban” is in UHI doesn’t mean anything. What you are trying to determine is the contribution of man made structures on the temperarture record. Throwing stations into two bins and finding their difference fails to do that.

Tilo,

Oddly enough, most currently urban stations were not originally built in pristine rural areas.

Zeke:

“Oddly enough, most currently urban stations were not originally built in pristine rural areas.”

This is true. But even for the ones that began life in an urban environment, the degree of urbanity has continued to increase (Mosher gives you some numbers at 85304). Also, if you put a thermometer into a city in 1950, it will not show you the UHI that was accumulated before 1950. However, the total UHI change that occured from 1850 (if that is where your reconstruction starts) to 1950 is still registering on that thermometer – even though you cannot see it as a change on that thermometer alone.

I’m going to put the argument together from all the pieces, for cce’s benefit.

Let’s start with the results from Imhoff’s paper.

“Globally averaged, the daytime UHI amplitude for all settlements is 2.6 °C in summer and 1.4 °C in winter. Globally, the average summer daytime UHI is 4.7 °C for settlements larger than 500 km2 compared with 2.5 °C for settlements smaller than 50 km2 and larger than 10 km2.â€

This tells us that UHI contamination for all “settlements” is 2.6C summer + 1.4C winter / 2 = 2.0C. So, what qualifies as a settlement. At the end of that quote he says:

“for settlements smaller than 50 km2 and larger than 10 km2.”

So we know that a settlement would have to be larger than 10 km2. What does that mean in terms of population. Zhang gives the example of Lynchburg VA, which has a population of 70,000 and an area of 29 km2. So if Lynchburg has 70,000 in 29km2, then I’m going to assume that Imhoff’s 10 km2 will have less than 50,000 people. And I’m giving away quite a bit of slop here.

Now, turning to the BEST UHI study, they say this:

“Urban areas are heavily overrepresented in the siting of temperature stations: less than 1% of the globe is urban but 27% of the Global Historical Climatology Network Monthly (GHCN-M) stations are located in cities with a population greater than 50,000.â€

From this we can conclude that Imhoff’s settlements have, at a minimum, 27% of the GHCN thermometers. Let’s say that all the rest of the thermometers have no UHI effect. As Spencers study shows, this is not going to be the case, since the effect starts at very low population densities. But I’m again giving away the slop here. So, if that 27% has 2C of UHI contamination, then that averages out to .54C of UHI contamination for every thermometer globally – as a minimum. Considering Spencer’s study about low density UHI effect, and the fact that Imhoff’s paper likely meant more than 27% of all stations, that number could be as high as 1C, in my mind.

Now, in 1850, when many of these reconstructions start, the world population was 1.2 billion. Today it is 7 billion. The fact that there was already some UHI in 1850 means that some of that effect, which is meant to show global temperature change for the study period, will have to be given back. But, regardless, the answer remains that the contamination of the temperature record is very large.

There’s a fairly obvious check on all this, since population growth is a well known quantity: check to see if the growth (if any) of the UHI is actually related to increased urbanization.

For example, many large central cities in the US have seen little population growth (and some declines) since 1960. Thermometers in those central cities should be unbiased for detecting AGW during any such period of roughly static population.

And I’d be surprised if there are not similar cases of small towns having roughly static populations over long time periods too.

I’m betting it’s not the cities that are the problem, and not the Podunks. It’s the suburbs where growth is fastest. Should be easy to test, and easy to filter out if found.

This is a fruitless and often silly discussion that bounces from blog to blog. I find this dumb dismissal of the UHI issue as particularly amusing “It’s absolutely amazing to me to see “skeptics†perform their game of obfuscation by conflating the UHI effect which is well known and undeniable, with the faith-based expectation of dramatically higher warming due to UHI.” This character is no doubt convinced by the BEST result, that although it is not statistically consistent with previous estimates on UHI, it nonetheless confirms that UHI is not a biggy, because the BEST result was negative. Priceless.

The reason they can’t find the “undeniable” UHI effect is because the thermometers are almost totally absent from areas that are not influenced by human habitation. They are comparing inhabited areas, with inhabited areas, and the rates of growth are not that dissimilar. See Mosher’s post above #85304.

This is where the thermometers are:

http://journals.ametsoc.org/doi/abs/10.1175/1087-3562%282004%29008%3C0001%3AGPDAUL%3E2.0.CO%3B2