PeteB was trying to puzzle out why I am saying the multi-model mean is outside uncertainty intervals of any ‘best’ ARIMA(p,0,q) model with p<5 q<5 even though last year, Tamino said it was inside the uncertainty intervals for ARIMA(1,0,1). Specifically he asked:

luciaI was just interested in how noisy these relatively short periods are

When I look at here from Tamino’s (albeit a year ago) using ARMA(1,1)

Looking at start year 2000 and estimating where it hits the dotted lines it would seem to suggest the underlying trend from 2000 could be anywhere between +0.025 C/year to – 0.010 C/year (which I guess would probably include the MMM) but your analysis above seems to contradict that – is that because you are using a different model of noise ? or an extra years data ? or am I missing something ?

I gave a long answer based on some things Tamino has done in the past. To better answer his question I also requested Pete B give a link to the article itself: That post comments on Goddard’s discussion of a GISSTemp trend since 2002.

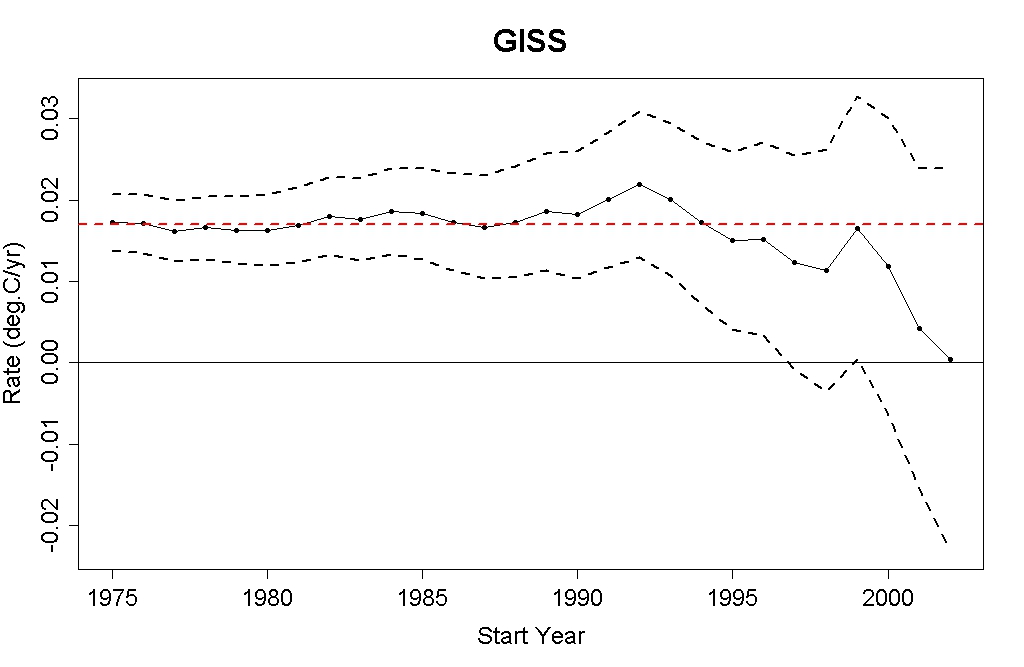

In his article, Tamino explains his method of making the graph shown above as follows:

Let’s consider the trend up to the present (through June 2011) from all possible starting years 1975 through 2002. I’ll use GISS monthly temperature data, and I’ll treat the noise as ARMA(1,1) noise when estimating the uncertainty in the trend. That will enable me to compute what’s called a “95% confidence interval,†which is the range in which we expect the true trend to be, with about 95% probability.

To show PeteB what we get using data I did this for a start years from Jan 1975 through Jan 2002:

Took the GISTemp time series from January of the start year through August 2012. Used “arima” in R to obtain the best trend under the assumption that the data consisted of a linear trend + ARMA(1,1) ‘noise’. Plotted the best trend with black circles and the trend ± the arima estimate for the 2σ uncertainty in the trend.

The trend and the estimated uncertainty intervals obtained are shown below:

(Update:I couldn’t help myself. I added to the graph. 1:43 pm.)

I added a trace to indicate the nominal value of 0.2C/dec projected by the IPCC. That nominal trend is close to what one gets for the multi-model mean computed either from 1980-to now or 2000-now. I have omitted a 0 C/dec trace and also omitted Tamino’s red trace at 0.17C/dec trace. I’m sure a number of people will use the eyeball method to figure out where it is … or just run the script. (Link provided below.)

I’m not certain I did exactly what Tamino did. But this is what I get if I do what his text appears to explain. (He’s done somewhat different -shall we call them “tweaks”?- in the past. But I’ll refrain from commenting on those unless he repeated them in the post commenting on Goddard.)

I’d also note: I suspect if I were to run Monte Carlo cases for ARIMA(1,0,1) cases this method slightly under-estimates the uncertainty intervals. Note that I am not using this method in my comparison post. the uncertainty intervals I have been showing are nearly always wider than those computed using ARMA(1,0,1). But I’m under the impression the method itself is standard enough provided one is confident the ‘noise’ is ARMA11.

Here’s the R script: Taminos_Goddard_ARMA11

Update:Arthur Smith asked why I didn’t end in 2003 when recreating Tamino’s graph that ended in 2002. Ending in 2003 would make the final trend almost 10 years long which is as long as the final trend on Tamino’s graph was when he created it in 2011. Here is with the final trend computed starting in 2003:

What we now see is:

- The final 3 points show the observed trend inconsistent with warming at a rate of 0.2C/decade. In my previous graphs only the final 2 points show that. So adding this third point makes the model’s ability to forecast look worse that it looked without the extra point on the graph.

- The final two points show a computed best fit trend that is negative. Previously only one point showed a negative trend. So, adding the 10 year trend shows that the choice of 2002 may not be quite as bad a cherry pick as Tamino suggested. (I do think it was a cherry pick to some extent– but you get a negative trend with 2003 too.)

Update Nov. 26 Arthur wants to discuss trends up to starting in 2008.

BTW: I can’t help but chuckle at this

The upper open circles sure do dip below the horizontal dashed red line now!

May I ask if this his a true statement, statistically speaking

“There has been no significantly significant upward trend in the past 10 years”

As to me it looks like 2002 is zero.

DocMartyn, significant^2 ?

Doc–

If we use ARMA 1,1, if you limit trend computations periods starting in January and use GISTemp, then you can say that there has been no statistically significant upward trend since 1995. You determine “statistically significant warming” by comparing the lower circles to the dashed line indicating ‘0C/d’. Warming is statistically significant when the lower circles are above 0C/dec.

Bear in mind: I don’t think “no statistically significant warming” means much. It’s a “fail to reject” statement. “Fail to reject” will always happen if the time period is sufficiently short.

(The difficulty is that those defending models want to turn this upside down and complain that we shouldn’t take “Reject” seriously because of short time periods. That’s not right. If we get a ‘reject– as we are for 0.2C/decade right now, the “too short time period” is not a valid criticism. Valid criticism exist but that one is not one of them.)

I know Tamino stopped at 2002 – but that was over a year ago. Why did you stop at 2002?

Arthur– PeteB asked me to compare what I got to what Tamino did so I used the years Tamino used to make the comparison. That’s pretty much it. Pete B did want to know how things changed for a start year of 2000 specifically. I’d been showing that in the previous post. Had Tamino’s post not included that I would have added that to address his question. But — basically PeteB wanted to know how that specific graph of Tamino’s would change with the addition of another years data and that’s what I showed.

I assume Tamino ended in 2002 because that’s the start year Goddard used in his post. So that seems to be the original basis for ending the analysis in 2002 and it seems to be given the narrative about commenting on Goddard’s choice, that’s an appropriate choice.

notes the arrival of a climate attack blogger – someone who reviewed Mann’s book before it was published

diogenens–

Who reviewed what book and your suspicions of when they might have reviewed it are not relevant to this post.

you are right, Lucia but it is still interesting to check the arrivals of the “attack dogs”. I smile when I note that a book that was published on March 6, 2012, was reviewed by Stephan Lewandowsky on January 29 and by Arthur P Smith on February 1.

diogenes– Arthur has been commenting here since 2008. Also, your analysis is overlooking the possibility that Arthur was sent a copy by the author prior to official publication. That happens. It’s not even uncommon.

I would imagine that if Arthur received an advance copy that he would indicate this in his review as required by Amazon rules.

lucia, I think you misinterpreted diogenes’s point. My impression wasn’t that he is “overlooking [that] possibility,” but rather, making a point based on it. Copies of MIchael Mann’s book were distributed early to a number of individuals to get a bunch of glowing reviews right off the back. It was a PR move (coordinated in part by John Cook). Someone being involved in a move like that could be taken to indicate things about them.

In the same way, Arthur Smith may have been commenting here for years, but he doesn’t comment on that many posts. The fact he posted on this one, one sort of critical of Tamino, within hours of it being posted, could be taken to indicate things about him.

Or at least, that’s how I interpreted diogenes’s remark.

To add to Steven Mosher’s remark, Amazon’s rules for reviews say:

There are a number of reviews posted to Amazon from people given a free copy specifically so they’d write good reviews. To my knowledge, not a one disclosed that they had received a free copy, much less that they were given a free copy so they’d write a positive review.

diogenese,

Early copies of a book (or even journal article) almost always will be sent to those expected to give glowing reviews. Mann sure wasn’t going to send Steve McIntyre an early copy of his book. 🙂

.

Like lots of people, Arthur Smith seems to comment selectively on subjects which interest him. The general category in this case is ‘any post which suggests or implies low climate sensitivity’. ‘Attack dog’ seems more than a bit unfair.

SteveF, if all one was going off of is what topics Arthur Smith finds worth discussing, I’d agree it’d be unfair to consider him an “attack dog.” However, he is an active participant in the “blogosphere,” so there is a lot of material to draw from.

I won’t go into details as I don’t think anyone wants that, but my experience with him is he doesn’t post enough to be considered an “attack dog,” but otherwise, the description fits well. I have seen him make many unsubstantiated attacks against people, often based upon what appear to be misrepresentations and/or willful delusions.

Coordinated attack dogs or not, if they ask a good question then why not? If they start posting links that SkepticalScience pseudo-science then its time to complain.

Brandon– Maybe I misunderstood. But I don’t want this thread to be a discussion of reviews of Mann’s book at Amazon.com or whether people might or might not be “attack dogs”. Arthur’s question was on topic.

Fair enough.

So i’m just curious – how many of the models have correct aerosol forcing values for the 2000s or are they just set to zero (or assuming a decrease rather than increase)? It’s a fun game to play where we all look at the multi-model means and use the CIs to determine the accuracy but somehow I get the feeling that all models are not created equal…

thanks lucia – very interesting looks like we are getting to the point where the reduction in warming trend is becoming statistically significant

Lucia, what trend would be required whereby one could reasonably state that there has been no statistically significant warming for 17 years?

I only ask because Ben Santer said he’d become a sceptic if that happened (note: I may have exaggerated this a bit). 🙂

cui–

Do you have a quote? Did he say no statistically significant warming? Or that he’d be a skeptic if the trend wasn’t above 0?

Also– which noise model? In his paper on tropospheric trends he used AR1. That makes narrower uncertainty intervals. Consistency being what it is….. those seem to be “allowed” if one is proving warming, but “not allowed” if one is showing models are off. (Because using that, the models are waaaaayyyyy off and have been consistently for some time now.)

I have good guess on what Tamino did, I am close to replicating his graph, take a look:

http://i46.tinypic.com/1ovbbq.jpg

I have limited the data to 1975 to June 2011 to match the same data as Tamino. I calculate the trends from January every start year to June 2011. To estimate the arma(1,1) uncertainty intervals I use the method outlined by Tamino ins his “Alphabet soup part3a + 3b” and “Hurst” posts. I estimate the arma parameters over the whole period 1975-2011, and then use Neff = N/v, where v= 1+2*rho[1]/(1-phi), rho[1] is lag 1 autocorrelation and phi is the AR coefficient. Replacing N with Neff can then be obtained by multiplying the OLS errors with sqrt(v). Check the code from Foster&Rahmstorf to see this implemented.

With this approach one cannot reject the AR4 projections.

SRJ–

I thought he might have done that.

If so, his results rely on cherry picking a data to create a “noise model” with in appropriately wide uncertainty intervals. That is: He is specifically picking a period when major volcanoes erupted. During that period, the models themselves say the mean (not noise) is non-linear.

But his method treats deviations from linear by the mean as noise and throws that into his estimate of the noise and it’s correlogram. The consequence of this is to widen error bars above the level that is appropriate if you goal is to estimate the variability of trends over repeat samples of the same period.

This matters because what those uncertainty intervals are supposed to be is an estimate of the variability of trends over repeat samples of periods with similar forcing. They are not– for examples– supposed to be an estimate of the range of trends we might have gotten had Pinatubo or some other volcano had a stratospheric eruption at some time between 2000-now.

The general principle that you should only include “the noise” when estimating the properties of “the noise” must be well understood in statistics. It is well understood in other fields where statistics is used and applied.

But anyway: If tamino’s error bars are what you say they are, then his decribe the amount of variability that one estimate if we were forced to pretend we had no idea whether volcanic eruptions went off when they did and/or had no idea that those eruptions do affect the temperature trends. In such a case, the variability due to Pinatobu, El Chicho etc. would by a mistery to use and we would have to decide that “for all we know” the “same” thing could have happened during any period at the same rate it happened during the full 1975-now period.

But… in reality, we know the volcanic eruptions have a major effect and we know when they went off. So computing the uncertainty intervals during periods when we know these major eruptions did not occur using uncertainty intervals based on the assumption that we have no idea whether they occurred or not is called “cheating”. Fishing out a time period where one knows the occurred to estimate “universal” estimates of the time series properties is called “cherry picking”. If Tamino did as you said, he did both.

My bad, I think, but I’d appreciate your comments.

He was talking about lower troposphere (TLT).

“Because of the pronounced effect of interannual noise on decadal trends, a multi-model ensemble of anthropogenically-forced simulations displays many 10-year periods with little warming. A single decade of observational TLT data is therefore inadequate for identifying a slowly evolving anthropogenic warming signal. Our results show that temperature records of at least 17 years in length are required for identifying human effects on global-mean tropospheric temperature.”

I’ll even link to the fount of all wisdom:

http://www.skepticalscience.com/print.php?n=1333

cui–

In that paper, Santer used AR1. That will give tighter error bars– and we would reject the multi-model mean for surface temperature using those and would have at the time that paper was published. Those testing models then seem to have “discovered” the inadequacy of AR1. Nevertheless, I’m sure that if one shows that the trend is not statistically singificant using the wider ARMA11 error bars, he would just say you should use the tighter AR1 ones– for testing the “no warming” model. (But they would remain inadequate for saying the models are off. 🙂 )

(Yes. I am being snide. But collectively, this is happening.)

Lucia,

“cheating” “cherry picking”

” If Tamino did as you said, he did both.”

Now why would a smart guy like Grant do that? Perhaps there is a desperate, viseral need to not admit the models are running too warm. I am not being snide. 🙂

Lucia,

I do not understand your point. And I am not even sure you understood my approach.

To clarify, here is another plot.

In blue I have plotted the same uncertainty intervals as before. These are calculated with arma(1,1) parameters estimated over the period 1975-2011. E.g. for the data point in 1999 the trend is calculated from January 1999 to June 2011, while the arma-parameters are estimated over January 1975-2011.

The reason to do this is that Tamino explains that you need lots of data for estimating the arma parameters reliably.

In green I have plotted uncertainty intervals calculated with arma-parameters estimated over the same periods as the trend. E.g. for the data point in 1999 the trend is calculated from January 1999 to June 2011, as are the arma-parameters.

http://i47.tinypic.com/1zvwrwz.jpg

Besides that, Tamino does not compare the trend estimates from that graph with the climate models. He is only comparing trend estimates over different periods of time to see whether they are significantly different from the long term trend since 1975.

The word climate model is not mentioned in his post. It was PeteB and you that started comparing with climate models.

Tamino’s approach is purely statistical and could be applied to any time series with the same statistical properties, regardless of the data being temperature or no. of potatoes eaten pr. year.

I think your accusation of Tamino doing cherry picking is wrong in this case.

Steve F,

Tamino was not comparing these trends with climate models

I thought we had established that volcanoes had minimal impact, especially compared to ENSO?

.

The way I see it, *your* method consists in faulting the model for failing to capture middle-term fluctuations (like ENSO) which the ARIMA fit (on monthly data!) cannot “see”.

.

The problem with this is that the models don’t claim to be able to capture these fluctuations in the first place.

Hehe! Thanks Lucia. You’re always entertaining and certainly right. 🙂

@SteveF: “Smart Guy Grant” extrapolated linear interpolation of a time series for decades in the conclusion of his recent paper. That’s the kind of mistake that new users of Excel (“Hey, Apple’s up 10% today, so if I wait for a month, it’ll be up 1700%!”) make. I think he’s very smart, but suffering from a severe case of confirmation bias.

SRJ (Comment #104009)

You repeat here:

What I am saying is given our knowledge of what happened with volcanic eruptions during the period from 1975-2011 and and what happened during the period from 1999-2001, computing the arma parameters using data from 1975-2011 and usign them to compute uncertainty intervals for trends for the period from 1999-2001 is both cherry picking and cheating. That is: I am saying the uncertainty intervals computed the way you computed the blue ones is “cheating” and “cherry picking”.

Have I understood what you did correctly?

I never said he compares them to climate models. In a comment I pointed out that he does not compare them to climate models. In fact– I’ve noticed that he has gone utterly silent on this topic. 🙂

So? People are allowed to discuss other features. Goddard didn’t mention trends from 1975, but when discussing Goddards post about trends since 1975, tamino added trends from other years.

Then show it. The fact is: It is well accepted that volcanic eruptions cause ‘dips’ with subsequent recoveries in temperature series. It is well accepted this is a deterministic effect. I only mention models to show an example where this is reflected– but the principle is well understood. Moreover, Tamino knows it because he includes volcanic aerosols in some of his multiple regressions.

So: Tamino knows that an important effect:

1) Volcanic aerosols would result in high deviations from the linear trend during the period from 1975-2011.

2) That such deviations would not occur during the period from 1999-now.

3) That the character of the ‘noise’ would be affected by this.

4) That if you estimate the character of the “noise” using the period with volcanic eruptions, that “noise” will have a) higher standard deviations from the mean and b) higher auto-correlations and result in higher estimates of the ‘variability of trends’ for repeat samples.

5) He either knows– or ought to know– that the estimates using ARMA are supposed to be estimates of the variability of repeat samples over the same time periods with similar forcings. They are not supposed to be estimates over repeat samples were possibly a volcano might have gone off in 2001 (etc.) but we just don’t know.

So, he either knows or ought to know that computing the ARMA11 parameters to describe uncertainty as if we don’t know that volcanic eruptions went off and applying them to a period in which we know they did not go off is incorrect. Since he either knows or ought to know that this method results in higher uncertainty intervals that one would get if these were estimated fairly and he gets an answer he seems to “like” better than he would otherwise, what he is doing is both a) cheating and b) cherry picking.

If you think what he did is not cheating or cherry picking you are going to have to explain why it is ok to compute the ARMA parameters using data from 1975-2011 knowing volcanic eruptions were rife during that period and then applying them to the period from 1999-2011 knowing that no volcanic eruptions occurred during that period.

SRJ

I want to comment on this:

Yes. And using your analogy I still conclude his method is wrong. It would be wrong to estimate the variability in potatoes eaten during years when we know crops did not fail basing the estimate of variability using a period that included years when crops failed repeatedly (e.g. Irish Potato Famine). Moreover, if he knew that the “crop failure” factor differed in the period when he estimated variability and nevertheless applied it during the period when it did not, and doing so got him an “answer he liked better”, this would be classic cheating and cherry picking.

@SRJ: Let me try to explain, perhaps from a slightly different angle.

There’s a tradeoff between a model being more useful and a model being more falsifiable. Huge error bars are generally a bad thing, since they make a model less useful (even less credible, perhaps), but they do make it less falsifiable. Predict a huge range of things and you’ll probably be correct, though no one will really care.

So, in general, we don’t want to lump lots of things into the catch-all “noise” category, we want to pull out things that we can model and model them, decreasing the noise.

For example, I was once modeling electricity usage for an organization and my first, naive cut at it resulted in forecasts with +/- 50%. Try presenting that to someone working on a budget! So I dug in deeper and found several things I could model and finally got the forecast interval to less than +/- 10%, which was useful.

In Tamino’s case, his incentive is for the opposite: enlarge the error bars to make the model less falsifiable. And I think that’s what Lucia’s getting at: he’s not pulled out known effects, but is leaving them in the “noise” category to increase his error bars. (Which is the only thing saving him at the moment, since the point estimates look very unfavorable.)

You tried to address this by using shorter ranges of data, but you didn’t actually change the components or characteristics of the “noise”. (In fact, as you noticed, you made things worse since your parameter estimates’ CI’s probably exploded as you decreased your data length, so your second set of error bars was increasingly nonsensical.)

So the appropriate next step is to dig into the data and pull appropriate things out of the “noise” category and into the “modeled” category. Perhaps there is nothing to pull out and it really is all noise. Lucia disagrees.

If I understand the argument.

Wayne– It’s worth nothing that Tamino himself explains part of the deviations using metrics that capture the volcanic effect. If he consistently denied that volcanic eruptions do cause deviations, I would not consider this “cherry picking” or “cheating”. I would just disagree with his view on whether or not volcanic eruptions can cause temperatures to dip and then rise again.

But I know that Tamino– like pretty much everyone else including modelers– does ascribe dips and recoveries to Pinatubo. He does not dispute that the multi-model mean is correct to show these dips after eruptions that are sufficiently violent to throw stuff into the stratosphere. In fact, other posts indicate that he seems to agree this should be so.

Despite that he periodically resorts to ignoring this when computing uncertainty intervals in those posts where it would suit his narrative to have wider uncertainty intervals.

That’s why I call “cheating” and “cherry picking” rather than just a mere disagreement over what volcanic eruptions do.

SRJ (Comment #104009),

No, Tamino did not compare to the multi-model mean. But it seems to me reasonable to see if Grant’s analysis is a) correct (it is not) and b) if his analysis approach, when done correctly, has implications for the statistical validity of the AR4 climate model projections (it does, the models are running warm). Do you not find this interesting?

Wayne2,

“I think he’s very smart, but suffering from a severe case of confirmation bias.”

Of course, just as most climate scientists seem to, especially the “stars” in the field.

.

Which is not to say the same does not happen in other fields (it does!). But the large public policy consequences and potential societal costs make confirmation bias in climate science less acceptable than in any other field, including drug trials, where despite explicit and widespread efforts to reduce bias, it’s influence is obvious. In climate science there doesn’t appear to be even a halfhearted effort to reduce confirmation bias. It is a sad commentary on the maturity of the field: importance similar to nuclear weapons, conducted like it were ‘trendy’ research in psychology.

I did not receive an advance copy of Mann’s book; I purchased and reviewed the Kindle edition which was released January 24th, as can be seen on the Amazon page:

http://www.amazon.com/Hockey-Stick-Climate-Wars-ebook/dp/B0072N4U6S

and you will notice that my review is labeled with:

“Amazon Verified Purchase” which proves that I purchased the book *before* I reviewed it.

I would note that diogenes’s accusation above is a perfect exemplar of the conspiracy ideation which Stefan Lewandowsky has been investigating. I do appreciate Lucia somewhat standing up for me as not being an “attack dog”, but nobody seems to have taken the simple step to discover that diogenes claim was completely unfounded – so much for “skepticism”. Thanks for another datapoint diogenes!

Lucia,

your interpretation of my approach is correct.

“If you think what he did is not cheating or cherry picking you are going to have to explain why it is ok to compute the ARMA parameters using data from 1975-2011 knowing volcanic eruptions were rife during that period and then applying them to the period from 1999-2011 knowing that no volcanic eruptions occurred during that period.”

Because the graphs is produced as a response to people claiming things about trends with different start periods.

Tamino shows what happens if you start you calculation in different years. It is just a simple statistical exercise, not considering of the underlying physics or data generating process (though I hate that word).

If I understand Taminos points right, he would also argue that using data only from 1999-2011 gives too uncertain arma-parameters.

In any case, I do not think that he is cherry picking. My impression is that he used that used data from 1975-2011 to make sure that his estimates of the arma-parameters were reliable enough, implicitly assuming that the arma(1,1) model is valid over the whole period.

Well, I think we will have to agree to disagree on this matter about Tamino cherrypicking or not. I think he had valid reasons for the choices he made about the data used for calculating uncertainty intervals, you disagree.

I do see your point about volcanoes affecting the noise variability. We can loosen up the restriction about having lots of data and then calculate the arma-parameters over the same years as the trend. I.e. we are trading off some reliability in the parameter estimates but then we are taking into account the changing properties of the noise, due to e.g. volcanoes.

I showed the result of that in my previous comment.

Taminos point was that no trends are significantly different from the trend since 1975, and that still holds true using my modified approach (green lines). Using your approach with arima(1,0,1) the last three trends are different from the long term trend since 1975.

I agree that you can do more complicated things to estimate the uncertainty, the approach from Taminos graph is just a first quick estimate, at least that is how I think it should be considered.

I will be curious to see how you guys would do this in a more rigorous way that could deal properly with volcanoes.

Lucia, I have not been following these threads closely but in looking at Tamino’s R code I find he is using the sd of trends, whereas, as I recall, in earlier threads you talk about using the se of the trends. Here is the function he develops for obtaining the regression trend slope and the sd of the slope for evidently ever shortened periods of time coming forward. It is almost like the difference one might expect using confidence interval limits versus limits from prediction intervals for a trend. I just had a percursory look so I might be mistaken in what I see.

ms_and_sms<-function(temperatures,year=1975){

norm_months=c(1:length(temperatures))-mean(c(1:length(temperatures)) )

fit=arima(temperatures, order = c(1,0,1), xreg=norm_months, include.mean=TRUE )

var_m=fit$var.coef[4,4]

m=as.numeric(coef(fit)[4])

return(list(m=m,sm=sqrt(var_m),year=year,n=length(temperatures) ))

}

I am in the process of using Monte Carlo simulations with Arima models to estimate CIs for the observed results of a GHCN segment and comparing those results with the more commonly used methods.

Back to the topic of this post – I actually downloaded Lucia’s R code (thanks Lucia!) and extended the plot to 2008. Interestingly, it’s only a brief period (2001-2005) that “rejects” in this way. 2007 is wildly in the other direction. It’s a nice way to show that things bounce around a lot, but I’m not sure it tells us anything (either what Lucia or Tamino were trying to communicate).

Arthur Smith:

I’m kind of there with you. As toto points out short-period climate noise is dominated by ENSOs, which are highly variable, as AFAIK unpredictable, and this variability is poorly if at all captured by climate models (see e.g. the AR4 summary, if I’m reading it right, their ENSO amplitudes are almost uniformly too small by a factor of 10 in amplitude.)

I understand that Lucia was just redoing what tamino had already done, only maybe more correctly, so this really is addressing the underlying premises put forward by tamino, who dreamed up the exercise to start with.

Anyway, IMO the underlying problem is that the ensemble of climate models are not capable of producing realistic climate noise over these time periods, so you’d have to adjust the models for this additional noise—which the models aren’t yet up to being able to predict—if you really wanted to statistically compare observed to modeled trends.

Put it another way, IMO, the apparent failure of the models to validate is really the failure to produce realistic ENSO patterns, and that is thought to be substantially a resolution issue (granularity of the models).

It’s not a failure of the physics of the models if a realistic solution requires a bigger computer than they can currently fit their models into (unless there were another method that allowed a more compact representation and a more efficient solution, but that doesn’t seem to be in the cards).

SRJ

Huh? The graph was produced in an article commenting to Goddard’s post writing something about a trend since 2002. Goddard didn’t say anything at all about different start periods.

Tamino added what he wanted to add to make points Tamino wanted to make. That’s pretty standard. But people get to observe, criticize of comment on Tamino’s cherry picking. The could do so even if all Tamino had been doing was commenting on people claiming things about trends with different start periods!

And in doing show, he elected to show uncertainty intervals which are computed using a flawed method. And his discussion goes on and on about what we learn from the magnitude of the uncertainty intervals.

If he didn’t cherry pick periods to compute his uncertainty intervals he would have showns the green ones– not the wider blue ones. My cherry pick statement applies to his decision to use a method that showed the blue uncertainty intervals. That’s it.

Goddard showing the trend since 2002 was also a “just a simple statistical exercise, not considering of the underlying physics or data generating process”– and Tamino criticized his choice as cherry picking. The fact is: being “just a simple statistical exercise” does not preclude cherry picking. Of if it does preclude it, you have to decide that Goddard did not cherry pick by using 2002 as a start year.

This is a claim Tamino makes. As with most cherry picks, it is covered with a plausible excuse. (For example: Goddard’s cherry pick is justified as being a 10 year trend- which is a round number. Tamino criticizes Goddard for this. But it is true that 10 years is a round number and it is true that people often report 10 year trends precisely because they are round numbers.)

On the truth part in Tamino’s claime: Using a short data periods does give uncertain data parameters. This is true for all statistical analyses.

But the correct way to fix the problem of possibly too small error bars due to a short period is to run monte-carlo to estimate the effect and fix it (if it exists). Finding a period with uncharacteristically high variance due to volcanic activity is not the correct way. It’s cherry picking and remain so even if one can can concoct a ‘cover story’ like “Ten is a good round number” or “I wanted a lnger period of time.”

If estimating since 1975 is somehow better than estimating since 2000, why not estimate the uncertainty intervals using data since 1900? Surely if 36 years gives less uncertainty in our knowledge of the ARMA parameters, using 112 would do an even better job, right? Why not pick that period? These are real questions and they cut to the issue: Why start in 1975 to “freeze” your ARIMA model? I would suggest you do this exercise bearing in mind that all the data are known to the analyst before he or she selects 1975 as the start year.

If you mean we could use the green trace. Sure: We could. Had Tamino used the green trace, I wouldn’t say he cherry picked. I would merely say he got a graph based on data that ended in June 2011– which would have been fine. But of course we’d update as we got more data.

I don’t disagree that Tamino could have said substantially the same thing back in july 2011 withouth cherry pickig his uncertainty intervals. I merely pointed out that — among other things– he did cherry pick them. He did: He showed teh blue cherry picked ones rather than the green ones.

Moreover, if you look at the green ones in your graph, had he shown the green trace, we would see that using a start year of 2002, the IPCC projects were now outside the ±95% confidence intervals of consistency with observations. Note your greent race falls below 0.2 for the final point.

Because the nothing in the 20th century can possibly be considered a test of forecasting ability, the green trace falling below 0.2 C/decade after 2000 has important implications with respect to diagnosing any possible bias in the multi-model mean for climate models. Even if Tamino elected to not show the IPCC trend of 0.2 c/dec and even if he didn’t discuss where that trend fell relative to his computed uncertainty intervals, I have very good reasons to believe that Tamino would not have wished to show that green trace falling below the dashed red line on any graph he creates. Ever .

As I said: I would not have criticized Tamino for cherry picking if he’d used the green trace. It’s acceptable.

Sure.

But that’s a pretty big subject change relative to anything I wrote about in my post. My post is a response to a question from PeteB. PeteB wanted to know why my uncertainty intervals were smaller than the ones in Tamino’s post.

Was it because of the extra year of data? (Yes. In part) Was it the method? (Yes. In part– Tamino used a non-standard method that permits him to cherry pick to find periods with different sized intervals. He picked ones that result in smaller intervals– tha tis he gets your wider blue ones rather than the green ones.)

I engaged PeteB’s questoin and gave him his answer to his question. This is permitted even if Tamino was discussing something else. And Tamino was cherry picking to get wider uncertainty intervals even if he wasn’t testing models.

If it’s quick– why don’t you make a graph using data through August 2012. It would be interesting to see Tamino’s graph updated using your blue and green bands. I bet PeteB would like to see it too.

Lucia – Tamino certainly uses volcanic aerosols as suits him. The effect of Pinatubo was prominent in the Foster-Rahmstorf paper arguing that Hansen had got all of his predictions spot on.

From their abstract: “When the data are adjusted to remove the estimated impact of known factors on short-term temperature variations (El Niño/southern oscillation, volcanic aerosols and solar variability), the global warming signal becomes even more evident as noise is reduced.”

Meanwhile, if the trendline has error bars which equate to +2.5C to -1C per century, we should perhaps remain calm. 🙂

Arthur Smith (Comment #104027)

Can you post a plot of the results?

Arthur

It only rejects in the period where one might test forecasts: That is, in the 21st century. The models aren’t so bad if they are testing using data available for tuning while they were ‘built’.

Everyone agrees that “fail to reject” over short periods doesn’t mean much. I’ll post the graph in a second. I’m not sure what you mean by wildly the other way” in the final year. What I see is the uncertainty intervals grow and for short period of times we “fail to reject” both the hypothesis that m=0 C/dec and m=0.2 C/dec. Fail to reject at very short intervals is not– by any stretch of the imagination- a contraction of “reject” at longer intervals. I think you understand this when people are crowing about Phil Jones admitting that we have “no statistical warming since 19??”

Carrick (Comment #104029),

While this is only for ocean areas, what jumps out (for me) is how clear the volcanic influence becomes and how much reduced the the overall variability is… and even more so if you were to subtract some reasonable estimate for the volcanic effects; the whole trend would be pretty smooth, with a fairly steady rise from 1979 to 2001, and flat to slightly falling post 2001. The other interesting thing (off topic) is the appearance of “tropospheric amplification” short term, but its complete absence long term.

While this is only for ocean areas, what jumps out (for me) is how clear the volcanic influence becomes and how much reduced the the overall variability is… and even more so if you were to subtract some reasonable estimate for the volcanic effects; the whole trend would be pretty smooth, with a fairly steady rise from 1979 to 2001, and flat to slightly falling post 2001. The other interesting thing (off topic) is the appearance of “tropospheric amplification” short term, but its complete absence long term.

“so you’d have to adjust the models for this additional noise—which the models aren’t yet up to being able to predict—if you really wanted to statistically compare observed to modeled trends.”

.

Or adjust measured temperature trends to account for the influence of ENSO sort of like this:http://i45.tinypic.com/166g7bc.png .

@SteveF: The more I think about it, the more I believe Tamino is very good and thorough with the math. He’s just not very good at the deeper issues of making reasonable hypotheses, models, etc.

Like I said, I looked at F&R 2011 and couldn’t believe the final two sentences: “This is the true global warming signal. // Its unabated increase is powerful evidence that we can expect further increase in the next few decades, emphasizing the urgency of confronting the human influence on climate.” Essentially projecting a straight line for “a few decades” off the end of his data.

In that paper, he lumped everything other than the three natural forcings they were considering into a catch-all “global warming” category, which obviously maximized that. Now, he juggles things around and lumps the same natural forcings into “noise”, when it suits him, as Lucia points out.

(The example I gave earlier about electricity forecasting was a real eye-opener to me. I knew that I was lumping all kinds of things I didn’t want to worry about into “noise”, but didn’t realize how worthless it would make my forecasts. I had one of those heart-in-your-throat moments when I imagined telling people, “I project you’ll spend $200,000 next year for electricity… plus-or-minus $100,000.”)

SteveF, if your point is to use 30-year trends, that is one I can endorse. I don’t think any of the people calling for immediate action are going to be very happy about it though.

(I know yo know this—using smoothed data is visually useful for humans, but unless you are doing non-linear processing, there isn’t any advantage in terms of trend uncertainty in presmoothing the data.)

It is striking how much less noisy the oceans are than the land surface air temperature. You kind of expect this when making measurements in the surface boundary layer, which is stirred up by convection and weather, but … to me still striking.

To followup on that comment, you can’t use a 20-year period of overlap between model and data to validate the model, if the data were present when the model was written (Lucia’s point to I think).

You really need 30 years of solid data, 20 is an absolutely minimum. I had done this trend uncertainty using a Monte Carlo based approach and assuming homoscedasticity (this is a best case assumption, if Hansen is right, that means the variability is increasing over time, and the period you need to use to resolve 0.2°C/decade just gets longer). The 95% CL from one of Lucia’s posts are shown for comparison.

I don’t recall the particulars of how she obtained those estimates or whether they differ from the ones shown here, but it’s clear she’s not understating the uncertainty in her analyses.

Carrick,

Smoothed data (11 month centered average) is for human consumption only, and has no influence on trend uncertainty. 😉

.

My point was that adjusting raw measurement data by removing known (identified) short term influences like ENSO and volcanoes reduces variability, and helps to reduce uncertainty in the underlying secular trend. As to whether or not 30 years is better than some other period: I guess it depends on the data itself. In my graph there sure looks to be a change in the trend near 2000; whether or not that is statistically significant can be tested, but the chance of finding a significant trend is improved if known sources of short term variation are first removed from the data.

.

If there is a pseudo-oscillation with a period of ~60 years and an amplitude of ~+/-0.1C, superimposed on a GHG forced trend, as many have suggested, then looking at a 30 year period (say 1970 to 2000) could give an exaggerated estimate of the response to forcing, while 2000 to 2030 might give an underestimate of the response to forcing (as I am sure you are aware).

Wayne2, X +/-50%X is a quite reasonable range in many things.

Have a go at how much will be spent on my healthcare next year; nice error bars.

Official US inflation figures now exclude anything most folks might actually buy (food, fuel…), I believe. So it’s like 2-3%?

There is a samizdat inflation figure based on what used to be included. It’s strangely different. 10%+.

Just saying, if we’re excluding things….

SteveF:

OK I wasn’t sure that’s what you were talking about. Of course if you have an additional explanatory variable, I agree it helps to remove it.

Example: I’m characterizing a sensor at the moment that is grossly sensitive to temperature. I also expect it to be sensitive to atmospheric pressure (physics based reasons why it has to be), but if you don’t cofit for temperature and atmospheric pressure (or first subtract off the temperature dependence), good luck extracting out the % effect of e.g., a 1kPa increase in atmospheric pressure.

@Carrick (Comment #104039): You say “if Hansen is right, that means the variability is increasing over time”. Do you mean his recent paper (nicknamed “Loaded Dice”)?

I’ve actually been walking through that paper/data and as far as I can tell, he only proves that if the mean in era B is greater than the mean in era A, maximum values in era B will tend to be farther from era A’s mean than maximum values in era A are. That’s so blindingly obvious that it’s easy to misunderstand and think he’s talking about variability in era B versus variability in era A, but he’s not.

(Not to mention that era A ended and era B began in 1980, so isn’t very applicable to the current discussion which begins in the 1990’s.)

Or were you talking about another Hansen paper?

Lucia

(thanks for fixing my graphs so they show up in the comment)

“If it’s quick– why don’t you make a graph using data through August 2012. It would be interesting to see Tamino’s graph updated using your blue and green bands. I bet PeteB would like to see it too.”

Sure, no problem. I have also added the estimates from the arima-function, dashed red is trend estimate, thick red is 95% CI. To make comparison with my earlier plots easier the last trend showns is from 2003-2012, as before.

http://i45.tinypic.com/2j4t086.png

14 more months data do make CI’s smaller, so that the upper CI for the long term trend since 1975 now is just above 0.02 for the approaches using OLS and adjusting via the arma parameters. The CI’s for the long term trend from arima(1,0,1) do not include 0.02.

“If estimating since 1975 is somehow better than estimating since 2000, why not estimate the uncertainty intervals using data since 1900? Surely if 36 years gives less uncertainty in our knowledge of the ARMA parameters, using 112 would do an even better job, right? ”

My answer: Because since 1900 you cannot model the temperature as a simple linear trend + noise. There is a breakpoint in the temperature series somewhere around 1970 plus minus 5 years, depending on the method you use to estimate the breakpoint.

So to keep it simple, one starts in 1975, after the breakpoint.

I guess one could fit a piecewise linear model and then estimate the arma-parameters for the residuals from that model.

” if Hansen is right, that means the variability is increasing over time, and the period you need to use to resolve 0.2°C/decade just gets longer). The 95% CL from one of Lucia’s posts are shown for comparison.”

Well, its largely a methodological artifact. stay tuned.

@SRJ: Thanks for you graphs, etc! I’d note that in the best case (blue line), Tamino is vindicated, but in the other two cases he is not. But even in the best case, the lower bound for starting years of 1997 and later includes zero. Seems to me that’s a problem in and of itself.

Hi Lucia – I have a comment (#104023) in moderation regarding diogenes’s attack on me in this thread – since his comment contained a provably false statement I hope you will either approve my comment or snip the entire discussion. Thanks.

I don’t know how the IPCC projections could have predicted the observed global temperature numbers much closer than what we have seen. All these statistical machinations trying to challenge AR4 are silly as there simply is not enough data to say anything useful about the success of their projected temperature trends.

–

On the other hand there is starting to be enough history to get some ideas about the validity of AR3 projections. Those projections, based on models run in the late 1990s, now have around 8 years of post-dictions and 14 years of predictions to check against observations. UAH, for example, shows a 0.17C/decade LT warming since 1990 vs the AR3 predicted 0.18C/decade.

http://s161.photobucket.com/albums/t231/Occam_bucket/?action=view¤t=fig9-15ahighlighted.gif

–

As to these graphs showing trends from different start dates, (which again include trends based on dubious amounts of history), they would show remarkable agreement with AR3 if they included the impact of the ENSO and solar insolence – as I have done below.

–

http://i161.photobucket.com/albums/t231/Occam_bucket/LTModelVsObsTrend.gif

–

–

PS.

Are there directions somewhere on how to insert a graphic in a post?

Arthur–It’s released from moderation. I didn’t realize it was there– it must have been there a while. Sorry I didn’t notice sooner.

Dave E–

The directions for inserting an image are:

1) insert a link to the image itself.

2) Wait for lucia to notice and add the html.

WordPress automatically strips html for images so you can’t do it yourself.

The Kindle edition of Mann’s The Hockey Stick Wars was published January 24 2012. Arthur’s review appeared February 1 2012. The review specifically states that Arthur read the Kindle edition, and the publication date thereof is easy to find. Diogenes’s (and some others) owes Arthur an apology.

I don’t know why you think this.

The third report was not called the AR3– it was called the “TAR” (T for third.) The second was SAR. The difficulty was that FAR would then be first, fourth, fifth and so on. So it’s the AR4. As for the projections in the TAR– that report predicted slower warming than the AR4. We are seeing less warming than in the AR4.

Wayne2,

” But even in the best case, the lower bound for starting years of 1997 and later includes zero. Seems to me that’s a problem in and of itself.”

This is often interpreted as meaning that you need to consider periods longer than 15 years, i.e. 1997-2012 to find significant trends. JeffId made a post in 2009 where he did this:

http://noconsensus.wordpress.com/2009/11/12/no-warming-for-fifteen-years/

Tamino have a similar post called “How long”.

In discussion of these kinds of graphs at SKS some statistically skilled person wrote that it is actually better to plot the statistical power of the t-test as function of the length of the trend period. Then you can see when the power becomes reaches some required level, and then you will know how many years of data you need to be able to detect a trend.

Btw. I think that linear trends are used way too much in climate discussions. It gives just too much discussions about when to start or end the calculation. I like the approach that Gavin Simpson is introducing here:

http://ucfagls.wordpress.com/2011/06/12/additive-modelling-and-the-hadcrut3v-global-mean-temperature-series/

Arthur–

You seem to think that not doing ‘research’ to clear you of whatever it was diogenese was accusing of you of indicates a lack of skepticism. I’m not going to spend time doing “research” to discover which books you’ve read or reviewed on Amazon, how you came to get the book, whether or not you followed Amazon rules etc merely because diogenese wants to come in and throw an OT stinkbomb to derail comments. My reasons for not doing it is that

a) I think discussions of who reviewed what at Amazon are boring and I don’t care.

b) I don’t want to waste my time pursing that issue because I don’t care about it.

c) I want to spend my time discussing the topic of this post and

d) I am perfectly content to wait for you to provide information available to you on this topic.

I suspect a number of other people neither believed of disbelieved digonese. And mostly, the thought that entire OT conversation was boring and not worth wasting any thought on.

I’m glad to see that you did read the book before reviewing. (I’d assumed that was diogenes complaint when I first read it. I never imagined he thought the possibility that you read it before the ‘official’ publication date was “the problem”. I guess I have now learned something (which I will make no effort to bother to remember.)

SRJ

Do you mean they did something like this:

http://rankexploits.com/musings/2008/falsifying-is-hard-to-do-%CE%B2-error-and-climate-change/

This is not quite right. Among other things, the power of a test is a function of

a) How far wrong the null hypothesis actually is.

b) and the confidence level you use to decree “statistical significance”.

c) the properties of your noise and

You never know (a), so when designing an study, you substitute a “detection threshold” to estimate the amount of data you will need to collect if the null you want to check is off by some specified amount.

So, for example, the AR4 has a nominal warming trend of ‘about 0.2C/decade’ and looking forward, we might estimate the variability to be what we saw in the past. ( Looking forward, we don’t know if volcanoes will erupt, so it’s fine to use those periods when predicting when you’ll see statistically significant warming. This estimate already includes the volcanic eruptions.)

Once you can do a calculation that tells you the probability you will reject the null of no warming (m=0C/decade) under the assumption that the actual magnitude of warmign is 0.2C/decade.

But what this does not tell you is “how many years of data you need to be able to detect a trend” . You actually need to specify the magnitude of the trend that you think exists.

But also: no matter what answer you get for your power, if you get a reject it’s a reject. The power estimate is only important if you have a fail to reject. In the graphs above, the model is telling us to reject the multi-model mean of 0.2C/decade. There is no “minimum number of years” for this finding to be meaningful because it’s not a fail to reject type finding.

Linear trends are used for testing the AR4 forecasts because the multi-model mean is nearly linear for the few decades of this century. So, while I have no objection to what Gavin did in that post– most of which applies to the hindcast not testing models– the fact is, if a forecast has a linear trend in its expected value, it’s fair to test that hypothesis by saying the individual realizations are “linear + noise”.

The Kindle edition of Mann’s The Hockey Stick Wars was published Jan 24. Arthur’s review appeared February 1. The review specifically states that Arthur read the Kindle edition, and the publication date thereof is easy to find. Diogenes’s (and some others) owes Arthur an apology.

Dave E.,

Please explain how you accounted for the influences of ENSO and TSI; without some explanation, the graphic does not mean much.

I wasn’t going to discuss the off-topic stuff again, but… there’s no way I can ignore this nonsense from Arthur Smith:

diogenes was wrong. Does that make his accusation “a perfect exemplar of the conspiracy ideation which Stefan Lewandowsky has been investigating”? Not at all. The coordinated PR move I referred to above is known to have happened. One can find undisputed information about it with a quick Google search.

Interestingly, Arthur Smith claims diogenes’s remark is “completely unfounded” because we can see he purchased a copy of Michael Mann’s book. However, he overlooks the fact purchasing a copy in no way prevents a person from having received an early copy.

In any event, I’m not sure “conspiracy” fits what was done, but if believing coordinated PR moves whose existence are public knowledge happened counts as “conspiracy ideation,” Lewandowsky’s work is even more rubbish than I thought.

Arthur:

Well in my case, and I suspect I speak for most everybody else who didn’t respond, I really just don’t care one way or the other.

Sorry I don’t find you and what you do particularly interesting. What you write here or other places I read, as it pertains to what I find interesting to contemplate or comment on, substantially more so. However, I didn’t seek out and read your review of Mann’s book, nor do I intend to, nor do I intend to read Mann’s book. Just not interested.

Never blame lack of skepticism when indifference will do as well. I figured if you wanted to defend yourself, you’d do so.

Sometimes I just shrug off criticism of myself rather than respond. You have the same freedom. There’s only so many daylight hours and I have other priorities that loom bigger than what somebody is saying about somebody on the internet.

Of course there’s a certain irony about Arthur making certain comments about Lucia on tamino’s blog (a place where she got banned for the temerity of noticing an error on the blog owner’s part), knowing full well she can’t respond to those comments on that blog. In fact these non-skeptics quite regularly engage in patting each other on the back in their little sport of kicking around people who don’t, can’t or are simply unaware they are the topic of somebody else’s criticism.

Arthur and Eli both probably want to skate softly on this point.

Carrick, I’m not sure what comments you’re referring to since I rarely visit that site, but according to a recent remark from Tamino, lucia isn’t banned for pointing out his mistake. He says:

😉

Brandon, I ran across that comment by accident.

Tamino can get very angry when you point out mistakes in his reasoning. I know that personally, and stopped going to his blog simply because if you can’t have reasoned discourse and you just have to take everything he says as gospel and smile, what’s the point?

Brandon Shollenberger

I guess historical accuracy is not his strong suit. He banned me after I pointed out that the two-box model he claimed we should have some sort of “extra” confidence in because it was somehow physically based violated the 2nd law of thermo. And I was right.

He did stop claiming we should have some sort of “extra” confidence in his model. This post is related to “the incident”.

http://rankexploits.com/musings/2009/two-box-models-the-2nd-law-of-thermodynamics/

“Please explain how you accounted for the influences of ENSO and TSI; without some explanation, the graphic does not mean much.”

——————————————————————————–

True. This is the basic formula I used for a predicted monthly global average temperature (assuming I didn’t screw up something transcribing it from my spreadsheet):

–

–

M: is the months since 1990

TSI: Total Solar Insolence Anomaly, W/m^2 (lagging 30 day avg)

ONI: ONI index, C (lagging 3 months)

AGW: monthly increase, (0.18C per decade/120)

P: Predicted Temperature

P=AGW*M + [(0.16*ONI)^2 + (0.2*TSI)^2 ]^0.5

Also, I apply some thermal inertia by averaging the predicted value with the previous month’s actual value.

–

Not optimal, but I think it captures most of the natural component produced by the ENSO and Solar variation.

The shape of the curve is not very sensitive to changes in selected sensitivities.

–

This clearly shows that the deviations from the IPCC predicted trend for years after 1990 through to 2012 are due to natural cycles, not changes in the AGW forcing.

Or in other words there is no evidence that there is any significant change in the AGW trend since 1990, which is about 0.17C/decade in the UAH data set.

Just had a chance to read back up thread and saw this comment from Lucia:

This reminds me of what should be but isn’t a famous story (relating back to Arthur Smith’s review of Mann’s hockey stick book):

Mann used the gacked-up argument that the correlation coefficient is always one if you are comparing two data sets that contain a linear trend (this is an example of fails to reject) as a reason not to use R2 in his data, where in fact his problem was a “fails to verify”. R2 was too small, and that is a serious issue.

I noticed Mann has stat course notes on line.

That should be interesting. 🙄

lucia, I apologize in advance for going off-topic, but:

That’s often the case with people like him. In fact, that sort of thing is one of the reasons I hold no respect for Arthur Smith. About two years ago, Arthur Smith was going on and on about people not providing evidence for Mann’s bad behavior. He then used that idea to smear Mann’s critics and basically say they should be dismissed out-of-hand.

I responded, putting serious effort into explaining and demonstrating a number of simple and easily verifiable points. Smith constantly made excuses and avoided addressing anything substantial. Eventually, the discussion narrowed to a single point (Mann’s hiding of R2 verification scores), and he said he would look into it. About a year later, he responded to me on another blog saying:

A year after forcing me to put a large amount of effort into giving him evidence, he flat-out said I didn’t provide it. When people simply make **** up to try to rewrite history to paint their critics in a negative light, they lose any credibility they might have (at least, in my eyes). And yet, it seems to be considered perfectly appropriate by people like Arthur Smith and Tamino.

Carrick:

In a related matter, it seems the standards of what is and is not a “good” measure of something’s validity often change based on convenience. A test may be “good” when it gives one result, but later when it gives a different one, it is suddenly “bad.” Or at least not the test discussed and promoted.

In Mann’s case, he included the R2 scores for one (1820) step of his reconstruction in his paper. Later, when criticized for not publishing adverse R2 scores, he and his defenders (including Smith) argued for the idea that R2 was a bad measure of skill.

Personally, if I got results I believed were right, I’d be willing to discuss any measure of skill people thought was useful. I wouldn’t say it’s a bad measure and thus it should be ignored. I’d explain why my results failed that measure and how it didn’t indicate a problem in my case.

Which is kind of why I’ve followed these matters for so long. If I wanted people to believe we needed serious changes to combat global warming (or any other threat), I would be bending over backward to get them to understand my position in as much detail as they were willing to consider. Instead, it seems the exact opposite is true.

Brandon, foolishly challenged by Boris, I gave a fairly long list of the errors of judgement and substance in Mann’s paper on this blog. You’d have to write a book to get them all. And even then you’d have to leave stuff out.

I’m afraid I’m entirely credulous of people who insist that Mann can do no wrong and that his mistakes are all in the heads of his critics.

Even if you don’t like the new reconstructions, MBH98 got it wrong. New papers aren’t so much refinements of his method as corrections to it.

I also chortle every time somebody says “well we fixed this error and it didn’t make any difference”.

This is often from people with math backgrounds who should know better.

Look if you expand a function in order epsilon:

F(x) = F_0(x) + epsilon F_1(x) + …

suppose F_1(x) has four terms. And you include one.

What order is the resulting expression…. ans: O(0) in epsilon.

Now suppose you add one more term of order eps.

It’s still O(0) until all of the corrections of that order have been made.

Strictly speaking fixing the uncentered PCA did change the answer, it did make a difference (the reconstructions using centered PCA don’t overly the ones that don’t use centered PCA, even with nearly the same data set), that is not the same as “did not change the answer”

But the answer’s still wrong because you haven’t corrected everything of equivalent order. That’s really not complicated, I have to assume people are being intentionally obtuse not to grasp obvious facts like that.

Except the ones who really are obtuse. We know who they are already so no need to enumerate that list.

Cantankerous egotistical fanatics like Mann and Tamino are more dangerous to their friends than their critics, imo.

Carrick, I don’t think a book would be wise due to structural reasons. A web site would be much better since you could split/reference things effectively.

In fact, I once considered making a website that would do something like that. Basically, the idea is the site would cover all the aspects of the hockey stick debate. The idea is people often say one problem “doesn’t matter” while hand-waving at other things which have their own problems. Then, discussion loses focus/breaks down without anything getting resolved. A web site like I envision could prevent that by having every issue covered in one place. And because it was a website, I could split off discussions of individual details into separate spots so they wouldn’t interfere with discussions of other matters.

My hope for something like that was by making everything simple and easily accessible, many of the pointless disagreements I saw could be stopped. If it worked, I could expand the focus to cover some other topics. And in some ideal world, it would grow into a useful source of information on most every topic involving global warming.

The idea was it would provide a sort of reference to allow people to learn about and understand whatever they wanted in a straightforward manner. They wouldn’t have to search through dozens of old blog posts, read a bunch of different papers or look in thousand page reports. Instead, they could easily navigate to whatever issue they wanted to know about.

But the hockey stick lost a lot of its place in the public eye, and I’ve come to suspect no matter how clearly or reasonably things may be explained, it won’t matter most of the time. Beyond that, I expect my efforts would be demonized and belittled by the very people who ought to be supporting the concept.

It just doesn’t seem worth it. What I keep coming back to is this: Al Gore could write a check and have my ideal website built within a year.

I’ve found this thread very interesting

I’ve seen it suggested that the reason for the apparent ‘slowdown’ in rise in temperatures is variations in ENSO

http://blog.chron.com/climateabyss/files/2012/04/1967withlines.gif

http://blog.chron.com/climateabyss/2012/04/about-the-lack-of-warming/

AIUI the GCMs cannot forecast ENSO cycles, but I don’t think this necessarily affects their ability to forecast the forced response.

Is there an argument for a ‘looser’ noise model because the GCMs don’t account for ENSO and we have had an unusual mix of El Nino, La Nina or neutral conditions over recent years that has conspired to flatten the temperatures ?

Alternatively would it make sense to ‘adjust’ the MMM to take into account ENSO and then have a tighter noise model ?

@Carrick: Maybe you missed my previous question (Comment #104045). I’m really very curious, since I’m working on a paper that’s basically a response to Loaded Dice. If I’m misinterpreting him entirely, I’d like to know.

Brandon, life is a lot easier if you learn to forgive and forget rather than holding grudges against people for years and years. I have no idea what you’re even talking about regarding my comments – long forgotten issues on my side. I certainly don’t believe I ever claimed any expertise on “R2” – I’m not a stats guy, not my issue. And MBH 98, really? I think we need a version of “Godwin’s law” of climate discourse regarding that – if you bring up MBH 98 in a discussion, you’ve already lost.

As far as people not responding to other people’s efforts to explain things to them – I seem to recall some examples in just the past few weeks involving Brandon Shollenberger that if I actually cared about I could look up and cite back at you. Sorry Brandon – it would require effort on my part which, as in almost all such blog discussions, the recipient would very likely ignore or not even notice.

Here’s the thing that really tweaked me about diogenes – this is not the first time somebody has gone around claiming people were doing Amazon reviews of Mann’s book a month or more before it was actually published. I’m sure it was an honest mistake to look at the hard-back publishing date and not notice the earlier Kindle date. But a real scientist seeing the dozens of reviews before the apparent publication date would not have jumped to the conclusion that there was some sort of scamming going on, but would have checked to see if they had made a mistake in looking up the publication date.

So many “skeptic” arguments fall into this same pattern: they make a mistake, and then seeing data (dozens of reviews before the apparent publication date, in this case) that is in dissonance with their mistake, they assume the explanation of the conflicting data is not that they might have made a mistake, but that other people were doing something wrong.

Humility in realizing you can make your own mistakes. Forgiveness of other people’s honest mistakes. Try them some time, they make life a lot better.

Arthur, while you may have not been a part of the astroturfing of the Mann book, it is documented here http://www.bishop-hill.net/blog/2012/9/7/michael-mann-and-skepticalscience-well-orchestrated.html

Brandon:

Which is the point I was trying to make about a sum of errors of the same order. Fixing one of them changes the answer but

“doesn’t matter” until you’ve fixed them all at that order.

Once you fix enough of them, it’s not a hockey stick.

A website would only be useful for the deniers who still like to claim that Mann didn’t make errors, or the errors didn’t matter, or he didn’t behave in an irresponsible manner both while writing up that paper and afterwards.

Wayne, sorry I did miss it. I was thinking of this paper which is I believe the paper you were reviewing. I know what he proves isn’t all that spectacular, but his interpretation is firmly that variability in climate is increasing. Tamino has some thoughts on that here which sound like they match up with what you are saying.

Arthur

As far as I can see, brandon said he has no respect for you, he has a reason and he remembers what it is. You don’t remember what you did. But that’s hardly surprising. You were probably unaware of his evaluation at the time and you probably don’t think whatever you did was something that would cause someone to lose respect for you.

But responding that Brandon shouldn’t hold grudges is idiotic. Failure to hold you in high esteem is not the same thing as holding a grudge.

I can see why those who know MBH 98 is flawed and don’t want to admit it would like this rule established. If defenders would just admit it’s wrong, the discussion could be dropped. But trying to have the last word by suggesting the subject of the problems in MBH should somehow be verbotten is ridiculous. FWIW: if any rule is required, it is that whoever suggests topics they don’t want to discuss should be ‘godwin-ized’ has lost. In that case, you would have lost. (And subsequently I would lose for suggesting your suggestion that what you want to say should be off-limits! 🙂 )

Some “alarmist” and “true believer” arguments also follow the same pattern. So.. yeah. People do this. On both sides.

I understand that diognese’s mistake– which involved an accusation toward you– bothers you. That’s fair enough. But it’s rather foolish to think that this shows that the mistakes made by people you call (in parentheses) “skeptics” are qualitatively different from those you would call something else (and who happen to be in the group you might consider “the one Arthur falls in”).

You are not only making a huge number of generalizations on practically no basis, but you need to look at the mote in your own eye.

Good advice. But do remember that sometimes the pot needs to be introduced to the kettle. Seems to me your own life could use some improvement too.

MrE-=

Thanks for the link

http://www.bishop-hill.net/blog/2012/9/7/michael-mann-and-skepticalscience-well-orchestrated.html

That’s rather amazing evidence of orchastration of those book reviews. While I believe Arthur when he says he merely read the book and posted a review, I can see given the evidence from the SkS forum files, people will tend to assume almost everyone posting those early reviews was involved in the SkS-Mann push to flood Amazon with solicited reviews.

I can also see why the fact that the Kindle version was available for reviews would not be taken as evidence that the reviews was not solicited– because that’s discussed in the SkS forums.

I can understand Arthur being miffed for being mistakenly lumped in with the masses of people who got pdfs prior to publication did post solicited reviews the moment the reviews window was opened at Amazon, but it’s pretty clear Arthur is mistaken to believe that looking up the Kindle publication date clears anyone of the accusation diogenese made. It appears people were acting collectively to post favorable reviews and the campaign was being conducted by Mann and John Cook.

This by the way is the text indicating that the campaign was geared up to start bombarding Amazon with reviews after the kindle publication date:

and later

Since the campaign specifically advised people to post reviews immediately after the kindle version was available, and evidently, Amazon was bombarded with reviews immediately after it was available, the fact that a review came shortly after the Kindle version was available is not evidence that a person was not involved in the Mann-Cook-SkS astro-turf book review operation!

Kenneth-

Note I wrote this:

I don’t think the issue is an se/sd one. The arimafit is supposed to give the sd of trends based on information from 1 run. There is no practical difference between se/sd here because we have only 1 run. The se/sd distinction becomes important when we are estimating the uncertainty in the mean based on N replicate samples (se) and contrasting that with the variability of individual samples around that mean (sd). For uncorrelated samples the uncertainty in the determination of the mean is se~sd/sqrt(N).

But with time series, the the ‘var.coef[4,4]’ above is supposed to give you the true variance for repeat realizations of the ARIMA(1,0,1) with known coefficients. To the extent that it does not the method is biased.

For what it’s worth: lots of things are biased in statistics. For example: The sample standard deviation based on “N” samples is biased low relative to a true standard deviation. (The sample variance is an unbiased estimate of the true variance. These two facts are related because any x can be decomposed into E[x]+x’=x. If you do the math you will see that E[x^2]=E[x]^2 + E[x’^2]. Since x’2 is always positive, 0<E[x’^2]. So, E[x^2]>E[x]^2. Substitute the sample standard deviation for ‘x’ and you will see that if E[x^2] is unbaised, E[x] must be biased low.

Owing to this bias, so we need to use the student ‘t’ distrubution for t-tests. These values approach gaussian as the number of samples increases– but the fact is, lots of perfectly ‘respectable’ methods in statistics will be biased in some way.

I’m pretty sure the variances coming out of ARIMA fits are biased a little low. I haven’t done the monte-carlo for zillions of things– but I’ve done for some. And you’ll find it’s biased a bit low.

I don’t know if there is any recommended method to deal with this bias. I can think of fair ones– but the fair ones shouldn’t permit cherry picking. (A fair method I can think of is to take the best recommended fit, run montecarlo for those parameters and find the biase– and inflate using that bias. It’s not perfect– but at least you can’t just pick a periods you “like” to get uncertainty intervals you “like”. )

SteveF,

–

I made a mistake in my original graphs. The data I had on hand to construct the graph was designed to predict monthly UAH temperatures, not to generate trends. Also the AR3 trend prediction I got from work I did a few years ago – I don’t think it was well chosen as an average model prediction. Anyway, after revisiting the problem I see that I can’t use the previous months actual temperature without compromising the contrast between observed and modeled trends – so I am no longer using any actual temperatures in the model. So the predicted trends are based soley on AR3 average model linear trend projection, ONI index and TSI anomaly. Second, the average model projection was 0.85 C rise between 1990 and 2030 which equates to 0.21C/dec. so I changed that as well.

–

http://i161.photobucket.com/albums/t231/Occam_bucket/AR3proj.gif

–

–

You can see from the graph below that my original conclusion would be qualitatively unchanged. I would add though that the observed trend since the early 1990s (the most statistically significant) shows greater warming than what would be expected from AR3, i.e. 0.17C/dec observed vs prediction of 0.15C/dec with ENSO and TSI taken into account. However, that difference changes already from the mid 1990s so I don’t read too much into it yet and the recent short term trends don’t have enough history to waste time pondering.

![]()

–

–

http://i161.photobucket.com/albums/t231/Occam_bucket/LTModelVsObsTrend-1.gif

–

Dave E. (Comment #104112),

Thanks, but what I wanted to know was adjustments involved with how “ENSO and TSI taken into account”. In other words:

.

1. what TSI changes were assumed,

2. how were those TSI changes converted into temperature adjustments (this would seem to implicitly assume a specific short term climate sensitivity value),

3. what ENSO index was used, and

4. how was that ENSO index value converted into a temperature adjustment?

SteveF,

See my previous explanation, it has a formula.