In comments Ed Barbar wrote

Provided that the noise is random over time and provided the screening time period is different than the time period used to determine the “m†in w=mT+b, it seems there should be no added bias. Yes, you might exclude some perfectly good trees, and include some bad ones, but the noise will tend to cancel and not bias “m†in any particular direction.

You will see I agreed, and then Carrick agreed with one caveat:

Lucia hit on this a bit, but for temperature proxies, the noise is highly correlated. So you’d need to widely separate the period where you’re correlating to and the period where you’re computing m. Otherwise, I agree with your comment too.

I hadn’t thought of the need for separation, but immediately agreed Carrick was correct about that. I will tentatively suggest that if the ‘noise’ is red, we can estimate the required gap between the ‘screening’ period and the ‘calibration’ period as being exp(0.1)/exp(R1) intervals where R1 is the lag 1 correlation coefficient for your time series. This interval reduced the correlation between the closest points in the two periods to 10%. More refined calculations would be possible if one stated some more specific requirement. Note that using this rule, if the lag 1 correlation for noise was 0.9, you would need a gap of roughly 22 measurement points between the period during which you screened and the one in which you computed the calibration (m) that permitted you to back out the best estimate of the temperature from something like ‘ring widths.

Continuuing with the same general idea of examining hypothetical effects using synthetic data, a sinusoidal temperature as the “truth” we wish to detect from our proxies (i.e. ‘treenometers’) I’ll compare results where:

- The ‘proxies’ consist of 1000 ‘treenometers’ with R=0.25 relative to the true temperature plus 1000 ‘not-treenometers’. So it’s a case one might imagine would benefit from screening.

- The “noise” in each treenometer has a lag 1 autocorrelation of R1=0.9. This differs from previous examples which all had R1=0. I introduced this to permit discussion of the point Carrick made. Note that having a large lag 1 autocorrelation aggravates the bias introduced by screening.

- In one case I will both screen and calibrate during the same period. This is the method that introduced bias that we’ve all been seeing. In the second case, I will screen during the final uptick but calibrate during a much earlier period. This method will be seen to be unbiased– provided we ignore the information from the proxy reconstruction from the ‘screening’ period and for a period just prior to the ‘screening’ period. (Ignoring information during the screening period is fine because we do have data during that period.)

Biased case: Screening and Calibration period identical

Above, the screening period is shown with vertical black lines; the calibration period is shown with vertical dashed blue lines; these overlap.

Notice that in this case, the green trace which represents what an analyst using this method would report as the estimate of temperature in the past is severely biased. In particular, the peaks and valleys are squashed. If the analyst was unaware that screening did this, they would conclude the reconstruction shows that current temperatures exceed those that occurred in the past — and by a humongonourmouse amount. (Mine you: I am picking my parameters to make the biases visible. The magnitude of the potential bias in any paper will depend on the properties of their treenometer and the details of what the analysis did. But this does show what screening tends to do. If you screen, you should estimate the magnitude of this effect on your results. Better yet, avoid methods that introduce this problem.)

Unbiased: Wide separation between Screening and Calibration period

Following Ed Barbers very good suggestion, I performed the calibration.

Above, the screening period is shown with vertical black lines; the calibration period is shown with vertical dashed blue lines. Based on my rule of thumb described above, for an lag 1 correlation coefficient in the noise of R1=0.9 to avoid appreciable bias the calibration period in which we compute ‘m’ relating tree ring width to temperature should be separated from the edge of the screening period by roughly 22 years. This means my calibration period should be outside the region indicated by the red line. Note, I separated the two by nearly twice that required amount.

When viewing the reconstruction, we now see that for all ‘years’ prior to the one marked by the red line, the screened and unscreened reconstructions match each other and also match the ‘true’ temperature rather well. There is no visible bias. However, after the red line, the screened reconstruction (green) is biased relative to the ‘true’ temperature. But this bias is ok provided we simply ignore information from the screened reconstruction during the ‘screening’ period and the 22 year buffer period prior to the buffer period. Once we do that, we could compare the reconstruction in the past to current temperatures. Both reconstructions would give us the correct result: The temperature in the current period is not an all time record (in this toy problem.)

So, it is possible to use screened data. However, when doing so one must first recognize that screening can introduced bias and select subsequent procedures to avoid introducing that bias.

What’s next

I keep promising that I will show a case where screening can reduce errors in the reconstruction. And later I’ll talk about how we might further improve screening by examining the distribution of the correlation coefficients to identify the ‘not treenometer’ in the batch without simultaneously removing the ‘treenometer’ that contain a signal, but had low correlation coefficients with temperature during the screening period. I promised that earlier, but Ed’s suggestion was a good one, and so I wanted to show it.

Of course: In true climate -blog Gergis fashion, I will claim that I thought of Ed’s suggestion before he mentioned it in comments. But I hadn’t done it, or mentioned it etc. And, in fact, I did in a sort of casual way while doing something like mowing the lawn or exercising at the gym. And then all of you can decide whether you believe I thought of it– just as you can all decide whether those who claim to have thought of such things before the first person who was brave enough to suggest the idea did so in public.

In this case, the person who seems to have suggested this in public first seems to have been Ed. So, I think he deserves credit. (After all, even if I did think of this before he did, if he hadn’t mentioned it, I might have forgetton the idea anyway. That’s what happens to lots of ideas I get while mowing the lawn.)

So you have demonstrated that you can avoid a calibration bias by screening and calibrating independently. It doesn’t appear that the screening had much of a beneficial impact in this case. What is the distribution of good/not-good in the screen output here?

Actually, if you squint hard, there is a very slight benefit to screening here. The unscreened line contains a little more noise and because the noise is red, that noise results in mis-estimating the ±95% range of temperatures in the proxy reconstruction.

To see this, look at the violet and green horizontal lines at the top of the oscillations. Notice the green peaks on avearge match “real” (black dashed) a little better.

I can discuss distributions of what was screened out vs. what was retained later. But for now, this is a fairly powerful method to improve the screening. It’s also easy. So I thought it was better to show this than to discuss the ‘tweaks’ you might do to improve the screening beyond this.

I think it might also make people whose inclination is to believe that screening by correlation must work be willing to see that it is biased if you do it wrong (that is– by screening by correlation and calibrating in overlapping regions.)

How do you estimate the chance that you are just (un)lucky with the proxy and the correlation with temperature is just due to chance?

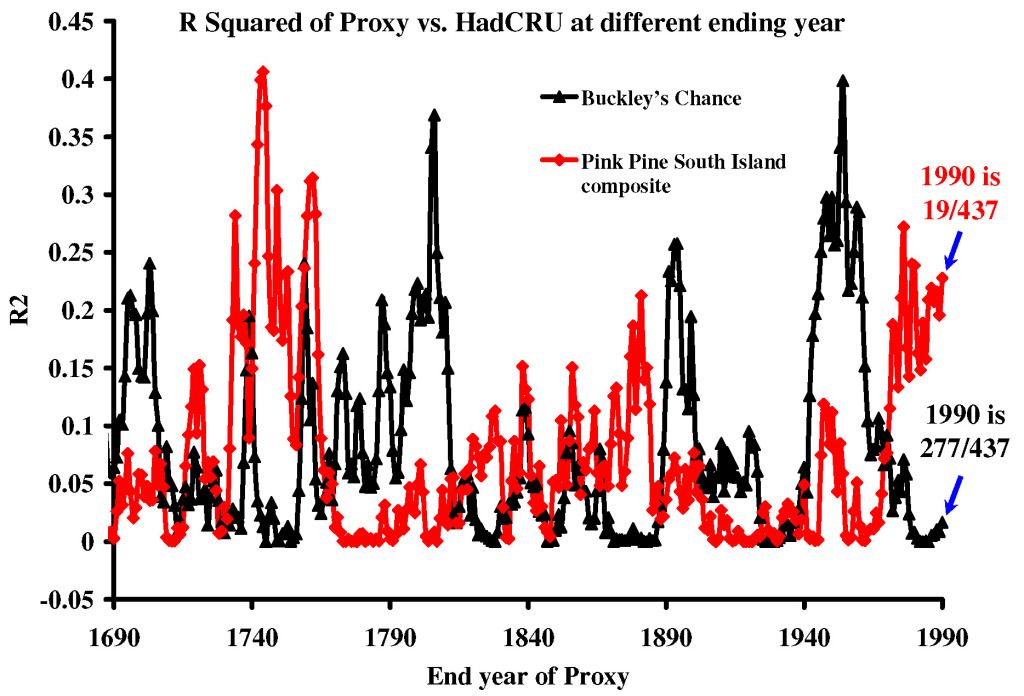

I had a look at the Gergis proxies and here are the R Squared correlations with HadCRU based on end year. There are 437 different starting dates for the 71 year (1920-1990) calibration line-shape.

The end year of 1990 is not always the best by any stretch of the imagination.

http://i179.photobucket.com/albums/w318/DocMartyn/RsquaredofProxiesvsHadCRU.jpg

We can’t do this with you ‘toy’ sine waves, but can we do it on real proxies?

Doc–

For the toy problems, I’m currently just throwing away 56%. I’ll talk about how one might better screen later.

But I think the a better question might be “How do you estimate whether a negative correlation was sufficiently low to justify assuming a tree (or series) contains no temperature signal?”

Remember: All these trees (or proxies) were initially included because someone suspected the should have or might contain signals. They did not just pull out every possible time series for every possible thing in the world going back 400 years and sift. They 67 proxies were examined because someone thought they might (or would) be correlated with temperature.

Lucia, I mentioned in an earlier thread that there look to be discontinuities in the Gergis proxies and that when corrected seems to remove nearly all of the autocorrelation. Status of stationarity needs to be determined before autocorrelation can be calculated. I haven’t had time to delve further unfortunately.

Agreed. The reason I use toy problems is to illustrate the qualitative effect a a particular analytical choice without worry about additional issues that might inject additional uncertainty.

‘lucia

But I think the a better question might be “How do you estimate whether a negative correlation was sufficiently low to justify assuming a tree (or series) contains no temperature signal?‒

That is indeed a much better question and one to ponder.

(*smiling) How long till one applies this method to actual tree data?

MDR–

Do you mean me? Or someone else? I may never apply it because that’s not the main point in showing the potential biases. The purpose in showing biases that arise from screening is:

1) To show they really do exist.

2) To show some circumstances in which they exist and so

3) be able to explain why people who do screen based on correlation need to either fix their method to avoid the bias or estimate the uncertainty introduced by screening when interpreting their data. The latter would involve a) doing a rather complicated calculation to estimate the magnitude of the biase and correct and estimating the uncertainty in their correction and showing the new larger uncertainty on their graphs.

We can get a defensible answer to that question. Of course, since it’s statistics we need to make assumptions. But it can be done.

Lucia:

I think this is a good approach. Again using the spectral method you can get away from strictly red-noise.

If I had to pull a number from my hat (this is a SWAG), use 30 years.

Very nice demonstration.

Sean:

Lucia’s addressed this, but this case was constructed to verify that you don’t introduce bias using Ed Barbar’s method not to demonstrate the putative advantages of screening.

Now you can go back and throw in a certain percentage of “non-temperture proxies” and see how robust your technique is against this.

Lucia,

Very nice.. and I do think Ed came up with the idea first.

.

But what happens when 60% or more of the trees really contain no information at any given point in time about temperature (just reddened noise, no temperature information)? And more importantly, what happens if the specific individual trees which carry temperature information (however noisy that might be) changes over time? (eg. divergence)

.

I don’t think correlation based selection can ever be valid except when the assumption that all the trees respond to temperature is correct. But even if the trees don’t satisfy that assumption, using all the data will generate a valid reconstruction every time.

SteveF–

In my example, 50% of the original sample contain no information about temperature at all. the other 50% contain information — but each is noisy.

Well…. everything goes to heck!

Having some trees that don’t respond to temperature can be ok– provided your screening method doesn’t make it seem like they are responding .

Its usually safer. The analyst is much less likely to trick themselves.

There are at least hypothetical circumstances where screening could be justified. Similarly, even in a lab, there are circumstances where the diagnose a data point as being a contaminated outlier and throw it out.

For example, with LDV, we know that most velocity measurements are from light scattered by a particle passing through a sampling areas. But some “measurements” will be shot noise that happens from time to time. The shot noise will frequently appear to exceed the speed of sound or be many, many, many, many standard deviations outside the spread of all the other data. Say you take 10^4 data points and 1 point is 20 sd’s out there. There are rules you can invoke to justify pitching that data point out. There are things you can do to persuade people it’s ok to pitch some data– and yes, you are doing it based on the data itself.

We could do similar thigns with the population of correlation. The problem is: It’s harder because correlation goes between -1 and 1. But, at least hypothetically there are justifiable ways an analyst could either throw out a tree or — more likely– a stand of trees even under the assumption that individual trees (or stand of trees) response to temperature is invariant over millenia and the behavior during the screening (and calibration) period is typical.

My favorite from my work days was: That result is wrong, run it again. Too which I had several responses, the first of which was: If you already knew the answer, why did you bother to submit a sample? The next was: Submit another sample and I’ll run that, but I won’t analyze another aliquot of the same sample.

When control charting, if you get an out of control data point, you absolutely need to know why that point is outside the range. There are lots of possibilities starting with the process actually being out of control. But you may have underestimated the process variability or there may actually have been a problem with the measurement, contamination during sampling, calibration drift, etc. But the one thing you can’t do is make measurements until you get one that is inside the control limits and use that instead of the out of control point.

Lucia,

Thanks.

Throwing out clear outliers is justifiable under a lot of circumstances, of course, but you usually have solid arguments (like a data point 20 SD from the mean almost certainly isn’t right!) when you do that.

.

What I guess rubs me the wrong way is wading into a cesspool of data and declaring some valid and some not, even though you have absolutely no idea why. It is the lack of understanding of root causes which suggests the entire enterprise is highly doubtful. “CINC” (correlation is not causation)

DeWitt Payne (Comment #98543),

I have essentially identical experience; improper data rejection is rampant across a wide range of industries. It is easier to just run another test than piss off the boss with an out-of-control data point. It is (of course) almost always the boss who is at fault for making the generation of correct (but bad) results have negative consequences.

Another example.

In looking at Icoads data we have the ships location over time.

When the change in location indicates a ship travelling at 1000 knots well, we know something is amiss.

Similarly, when the most influential tree in the world shows 6 sigma growth and plant biologists tell us that’s not physically possible, well we would remove such a tree. Unless, of course, we liked the fact that it corellated well with temperature.

Opps.

SteveF

That rubs me the wrong way. But also, using what amount to self contradictory assumptions or procedures that just don’t work.

Also: not admitting that you just can’t “fish”. The problem with some of the correlation screeing is it resembles fishing a lot more than identifying and tossing outliers from data that collected (or even selected) based on what were considered valid criteria. If you want your results to have a decent connection to reality, if you collect (or select) data based one what you consider valid criteria, afterwards, if you are going to screen for outliers, you test to see if you can prove it’s inconsistent with the hypothesis that it contains a signal.

In the case of somethign like Gergis, if the 67 proxies they started with were chosen because someone thought there was a good reason to expect them to be correlated with temperature, they shouldn’t throw any away unless it is so strongly negative that it is not consistent with containing a signal. In fact– if they were going to screen on R, they should have

*) Under some statistical model for the noise (which would have to be justified based on the data) find the distribution of Rs they would expect a population that was pure noise. (This is easy for white noise. For R computed based on 100 data points in the time series, you expect to get roughly 95% of the data with R’s between t=0.196 and -0.196).

2) Now, since initially they chose these because they expect them to contain signal, and throwing ways anything with a value of R that is even a tiny bit over 0 causes bias without reduding noise, the want to only throw those they are nearly certain are ‘noise’. So, in the first place, that means they should only throw away thigns with R<-0.196. But…

because they have 67 data, even if something is just borderline, they should expect have about 3 or 4 data with R outside the range ±0.196. So, to decide what to pick, they might find the t value associated with something much stricter!

But at least they shouldn’t have thrown anything out unless Rmin was less than -0.196. But instead, they turned this on it’s head and required each to “prove” itself. So, what they did was equivalent to requiring it to be greater that 0.196. That’s nuts!

The latter is the sort of thing you might do if you are doing in the first step of some sort of exploratory data mining. Wallmart might do that if they were fishing to figure out what factors caused people to buy ice cream or something. (Then later, you– or Wallmart– would do a repeat experiment with truly out of sample data to figure out if the stuff you fished for was real.)

Can this method really work though?

“provided the screening time period is different than the time period used to determine the “m†in w=mT+b”

I’ve said this over and over, to little effect, but the point of Gergis’ screening is to make sure she can determine m. If she can’t, she can’t use the proxy. As in can’t.

So screening on another period may have some other virtue, but doesn’t do the job. And it’s not clear what job it does do.

In practice, there aren’t spare periods available. You need every bit of overlap time for calibration and verification. And having a gap just makes it worse.

I have said that there is a real screening fallacy. You lose independence of the information in the screening period. That’s still a problem here.

Yes. This you really have said over and over. And they way she does this is wrong.

I am unaware of your having brought up the notion of using different time period.

Uh… so you are saying this process doesn’t do the job?. But also claiming you’ve said it does to the job over and over? And you are saying both those clearly contradictory things in adjacent paragraphs?! You’ve out done yourself.

But in any case, it’s little surprise that people don’t understand what point you think you are making.

Screening on a different period from the calibration does “do the job” if “the job” is to try to “fish” for the “true treenometers” without biasing your estimate of temperature in the proxy reconstruction. It may not be the best way to do it, but it does do that job.

I realize this is why people might prefer finding a different way. But calibrating and screeing in the same or overlapping periods will result in a reconstruction that is biased relative to reality.

You’ll have to elaborate what you mean by “lose independence of information” and clue me in on what the problem is here. But because if you’ve said this somewhere, I don’t know where that is, I have no idea how to use my googlefoo to find your discussion and anyway, I don’t plan to have spend a lot of time using my google-foo when you could just explain what you mean.

I’m perfectly willing to believe that even if we do what I did above which solve one problem others still remain.

Nick, what do you think of the R2 values for fitting the 1920-1990 calibration period over the range of data?

http://i179.photobucket.com/albums/w318/DocMartyn/RsquaredofProxiesvsHadCRU.jpg

It is quite clear that previous periods of growth in the proxies are a better match for the ‘unprecedented’ warming of the modern era.

Why can’t I see a drop in temperature following Mount Tambora (1815) or Krakatoa (1883) ?

Now call me Dr. Stupid here, but I would have thought the majority of data sets should respond to events in the Southern Hemisphere that resulted in cooling of the Northern Hemisphere. Why can’t I see a 1+ SD event following the Mount Tambora or Krakatoa in any of these wonderful temperature proxies?

Come on Nick, explain for once.

Doc–

Could you explain how you define “R2” for your proxies? In words or algebra. Because I don’t know what that is supposed to mean.

Lucia,

“And you are saying both those clearly contradictory things in adjacent paragraphs?!”

They are not contradictory at all. Gergis actually (in effect) calculates m. Significant values she can use and proceeds. Others she can’t. Screening on a diferent period wouldn’t help that.

But any method will, one way or another, be unable to use a proxy that doesn’t have some observable relation to temperature. There’s no sensible arithmetic you can do. In a PCA method, for example, the proxy will be near orthogonal to the retained eigenvalues. Whether you drop it in advance or not, it will have no influence. That was my earlier point about screening and weighting being little different.

“You’ll have to elaborate what you mean by “lose independence of information†and clue me in on what the problem is here. But because if you’ve said this somewhere, I don’t know where that is,”

It was in my first comment here on the topic. And in my first comment at CA, where it was deemed too obtuse to respond to. But it’s obvious. If you screen on concordance with temperature, you’ll get concordance with temperature in that period. That’s not independent information.

Sorry Lucia, I used the RSQ function in Excel which is the square of the Pearson correlation coefficient through data points of HadCRU 1920-1990 vs the proxies in 71 year blocks.

Doc,

About R2, what Lucia said.

On volcanoes, I earlier linked this paper on the topic.

“Nick,

It was in my first comment here on the topic.

If you screen on concordance with temperature, you’ll get concordance with temperature in that period. That’s not independent information.”

Are you saying that one gets information in the pre-secreened period about line-shape, BUT, that this cannot be calibrated using the relationship between line shape and temperature in the screening period?

Nick, answer the SPECIFIC question. Why can we not observe a large drop, the multi-year recovery in any of the temperature proxies for the two largest volcanic eruptions in the last 1,500 years?

Don’t link to a paper that is speculative bollocks; explaining why your thermometers don’t respond to a temperature signal due to ‘the effects of diffuse radiation’ is just displacement activity.

O.K. So now your trees respond to temperature, water, fertilizer, all changes in the local biosphere and to changes in ‘diffuse radiation’.

Very good.

So corals respond to the sonic booms generated by volcanoes in a manner that exactly the same size as the changes in temperature, but of a different sign. 18O suddenly change their fractionation properties due to dust.

In fact, all proxies ignore volcanic cooling events, but faithfully respond to all other cooling events.

Doc,

No, I’m saying that when you calibrate, you sacrifice independence; it’s part of the deal. Suppose I have five thermometers, and I want to get a very good measure of temp in a room. Then I might take five readings, and maybe I can learn more than I would from one.

But suppose I believe that one of those thermometers is more reliable and calibrate the others to it. Then I might have four better thermometers for other purposes. But there’s no point any more in using them all to measure the temp in the room.

My CA comment on this linked above is here.

Nonesense. We know that because the time periods are short, her computed ‘m’s are biased high relative to the real average value of ‘m’ for the batch she kept. And the reason they are biased high is that she hasn’t merely thrown away bad proxies, she’s kept some bad ones that were accidentally good and thrown away good ones whose noise made them look bad in that period.

If she wants to get an unbiased estimate of the true value of “m” for proxies she screened, she needs to use a different period.

Of course not. But that doesn’t mean you can screen out and then compute ‘m’ using the period you used to screen. It doesn’t work.

Doc,

“Nick, answer the SPECIFIC question.”

Sorry, I don’t have answers for everything. As that paper says, it seems to be a puzzle.

If you want a good measure of temperature in a room use one thermometer calibrated to a known standard within its certified range of operation. You will then be able to quote temperature within a known tolerance. Belief has nothing to do with it.

http://www.npl.co.uk/publications/guides/comment/comment-temperature-guide

Nick–

I’ve looked at both comment you link and you are being obtuse in both.

As for this

This is just stooooopid. Almost unbelievably stooopid (but I’ve know other stoooopid people who have concocted similarly stooooopid idea.)

You calibrate the thermometers in an external calibration. You don’t calibrate them using data from the experiment you are measuring.

If you external calibration says one thermometer is good and the others don’t work, then you can use that.

Lucia,

“But that doesn’t mean you can screen out and then compute ‘m’ using the period you used to screen.”

I’m not sure we aren’t saying the same thing here. What I’m saying is that having in effect fitted m to match the observed temp, it isn’t meaningful extra information about the gradient in that period.

“If she wants to get an unbiased estimate of the true value of “m†for proxies”

But she’s not. She’s trying to calibrate, which is quite different. She needs a best estimate of m for each proxy alone.

Lucia,

“You calibrate the thermometers in an external calibration. You don’t calibrate them using data from the experiment you are measuring. “

Where did I say anything about using data from an experiment? All I’m saying is that you have five scaled thermometers. If you have one that you believe is more accurately scaled, you can calibrate the others to it.

But OK, a more homely example. You have five clocks. The time was set a long time ago and has wandered. You check them all and get some sort of average when you need.

But suppose you believe one has held its time better. So you set the others to match. Reasonable, but you’ve in effect put all your faith in one clock. The others don’t give you independent information.

Doc–

So…. it’s the correlation coefficient between Hadcrut and a proxy computed over 71 years– but then sliding? So is the year shown in your axis the final year? I still don’t know what you are doing. HadCrut is from 1920-1990. Are you saying that for some reason, you took a proxy from say, 1640-1710 and computed the RSQ with HadCrut from 1920-1990? Why? What’s that supposed to tell us?

First: This isn’t remotely what Gergis did.

Second: This doesn’t improve your estimate of the time.

Third: You con’t claim it improved your estimate of the time.

Fourt: I have no idea what you plan to do with these clocks later. But it’s hard for me to imagine a experimentalist who thought this was “reasonable” in most experiments where you needed an objective measure of time.

Nick

First, she’s trying to calibrate her method of figuring out the temperature from the ring widths. To obtain a good calibration, she needs an estimate unbiased estimate of the true value of ‘m’. She will get a better one by computed the ‘m’ over a period that differs from her screening period.

If you would finish your thoughts and to connect “she’s trying to get a calibration” to include “she’s trying to get a calibration to determine Y based on X” you might be able to unbefuddle yourself. When you calibrate, you want a calibration that can be used to obtain an unbiased estimate of Y based on X. For Gergish’s method getting a bunch of ‘m’ cherry picked to keep those that are higher than the correct value and tossing out those that are lower than the correct value is not a good way to get a calibration. It’s bunk!

“lucia

Are you saying that for some reason, you took a proxy from say, 1640-1710 and computed the RSQ with HadCrut from 1920-1990?

Yes

“Why? What’s that supposed to tell us?”

The conclusion was that the modern period was unprecedented, which I take to mean that it has never happened before.

Now if the correlation with the fastest ever temperature rise gives one a better fit to the a proxy some 500 years ago then it means a number of things, such as

1) Such temperature rises have happened, many times, in that local in the past.

2) The proxy may not be responding to temperature.

3) You cannot average proxies in the per-calibration period because some have seen seen big rises in some periods and other have not. Such heterogeneity means that a huge number of proxies need to be acquired.

Doc, It would be fun to reverse all the proxies 🙂 Isn’t there a satirical journal Nurture or Neuter to publish in?

Doc–

I honestly have no idea what we learn from your graph.

Nick:

You sound more and more like Claes every day. I know you really didn’t want to hear that. 😉

My theory is this is some cognitive disorder that numericists develop from writing too many Runge-Kutta routines where they suddenly have an “ah ha!” moment and think, even though they’ve never taken any data, they are now experts at measurement theory. /stinker

Whether your statement is true depends whether all of your clocks have the same temporal resolution—-

Suppose your “standard” updates every 1-second (e.g., GPS pulse-per-second), and your “secondary clocks” update on each CPU cycle (CPU counters). Let’s suppose these secondary clocks are associated with some type of digitized data stream.

The secondary standards obviously provide you auxiliary information (e.g., microsecond accurate time-stamp for events), but they remain accurate because they are synchronized to the GPS clock.

Interesting how you can get independent information in spite of leaning on one time standard, heh?

I would like to see tree ring series that span the MWP and LIA to the present, screened against the MWP and LIA. Then see what the series have to say about modern temps.

OK, maybe there are trees that at least are still alive that experienced the LIA. 🙂

Or maybe some dead ones of the same species in the same area could be used to hit both the MWP and LIA. That is a current practice.

Re: Carrick (Comment #98574)

Interesting point. In ocean profiling we often use a combination of sensors which (allegedly) measure the same quantity. A typical example would be a macro-conductivity (slow response, good stability) and a micro-conductivity (fast response, bad stability) sensor, where the microconductivity cell is typically “calibrated” to the macro unit. There is independent information from both cells, and using them together you can infer both a background state and the local turbulent dissipation. I’m reasonably sure that Nick was not thinking in such concrete terms, but such potential differences between measurement devices do seem relevant to the proxy selection problem.

Carrick,

“You sound more and more like Claes every day.”

Oddly enough, we used to do similar stuff. We pretty much had two FEM-based PDE solvers in the market. At one stage, almost the only two.

Suppose your “standard†updates every 1-second

No, I don’t suppose anything like that. I’m thinking clockwork. 1850.

Lucia

First: This isn’t remotely what Gergis did.

I’m talking simply about the effect of using a refence for calibration.

Second: This doesn’t improve your estimate of the time.

That’s my point

Third: You con’t claim it improved your estimate of the time.

Exactly

Fourt: I have no idea what you plan to do with these clocks later.

Tell the time. I have a big house (in this example).

Nick:

Boring example then. Not anything to learn from it. /outahere

Here is McShane and Wyner making the same “obtuse” point:

“Second, the blue curve closely matches the red curve duing the period 1902 AD to 1980 AD because this period has served as the training data and therefore the blue curve is calibrated to the red during it (note also the red curve is plotted from 1902 AD to 1998 AD). This sets up the erroneous visual expectation that the reconstructions are more accurate than they really are.”

Oliver:

Thanks… I borrowed this one from my own experience. (That’s how I timestamp with my sensors.)

As you can imagine, I could go on and on about that one (like how the main controlling variable for the secondary reference is ambient surface atmosphere temperature, interesting how that comes back into play here).

There is a direct correspondence actually. Ice cores have poor [temporal] resolution but are very good temperature proxies, whereas trees are poor temperature proxies but have much better temporal resolution.

So you can imagine a network where the “primary” temperature information comes from temperature proxies (and long-duration real temperature measurements) that you “infill” using secondary lower quality proxies. This is actually what Moberg (2005) did, and how he avoided the descaling issues associated with Mann 2008.

(You can argue over whether he selected the right proxies for his “primary” network, but implementation details not relevant to the question of whether the methodology is sound.)

Lucia,

“For Gergish’s method getting a bunch of ‘m’ cherry picked to keep those that are higher than the correct value “

I don’t see that that is true. She chooses by significance of m, not by value. Or, if you prefer, by correlation ρ. In fact m is a dimensioned property of each proxy. Different proxies can have different units.

I think the bias has it’s origin in the purpose or motivation of the works. If the purpose of the work was knowledge of climatic (temperature)conditions of the past, and you suspects a proxy (trees growth rate) may hold useful information for you, the first priority would be to find out HOW that proxy reacts to change in temperatures or other climatic changes. In finding out HOW, (tree)proxies not correlated with a pre-defined expectation will be not less useful as one correlated.

When one can document HOW a proxy or group of proxies responds, one might gain some useful knowledge of the past. The very heart of the problem is confirmation bias, and circular reasoning. When one sets out to show that the earth is in an unprecedented climatic condition, as real climate scientists does, the bias is already out of the starting blocks.

This is a distinction without a difference. Picking by correlation ρ and then computing ‘m’ in the same period where you screened by correlation makes your errors be correlated in a way whose computed value of ‘m’ is larger than the true value for that tree.

I don’t know why you don’t see this is true. But it is true. Roman showed the linear algebra. I’ve showed simple examples. You could, if you wish run examples yourself and see that doing it this way, you pick the trees at least in part based on the error arising from statistical imprecision not their true m’s.

Do you not understand that the sample values of ‘m’ or ‘R’ computed over a finite span are not the “one true m or R”? (You seem to understand that well enough when you objected to computing them over shorter values.) Do you not see that in the applications, if you use the average ‘m’ computed this way, the reconstructed values for historic temperatures are wrong?

I”m not getting where you are missing the obvious, but you are.

Nick said

“Where did I say anything about using data from an experiment? All I’m saying is that you have five scaled thermometers. If you have one that you believe is more accurately scaled, you can calibrate the others to it.”

No you can’t! That kind of in-test recalibration is strictly forbidden in experiments. There’s no way to know in a test that the one you think is right is actually right. Instrumentation drift requires recalibration in a cal lab, NOT in test. You can identify what you think to be a bad instrument, but you cannot recalibrate it without going back to a refereed set of data.

Lucia, #98588

Yes, I don’t see it, and I may be missing something. The reason is that each m is computed individually, and I don’t see why high correlation should bias the estimate. It’s just a regular regression, and I believe the normal beta is an unbiased estimator.

What Roman said was that m is the product of ρ and the proxy sd, so it’s not just proportional. He did say that it’s the same if normalized. But there’s no reason for Gergis to normalize – she isn’t using CPS, as you (I think) and Roman are.

As I see it, the basic task is to get some kind of average of the proxies. You and Roman normalize and average – CPS. That is a weighted average, by inverse sd, and penalizes high sd. Furthermore you peek at the whole data period in deriving that. So a proxy that shows a big MWP, say, has a higher sd and gets downweighted.

Gergis (I think) converts each proxy to a temp base and then averages. That’s why she needs the individual m values, and can’t proceed without them. But then the basis for combining seems more rational, as it doesn’t then require weighting. And it doesn’t peek at pre-calibration data.

Slightly out of sequence, but I was reading McShane and Wyner, statisticians writing in Annals of Appl Stats, and I read (p 12):

“Alternatively, the number of proxies can be lowered through a threshold screening process [Mann et al. (2008)] whereby each proxy sequence is correlated with its closest local temperature series and only those proxies whose correlation exceeds a given threshold are retained for model building. This is a reasonable approach, but, for it to offer serious protection from overfitting the temperature sequence, it is necessary to detect “spurious correlations.—

My emphasis, of course. Spurious correlation is where they get into unit roots.

More reading of McS&W. Their Lasso method uses randomised block holdouts, rather like described here. I don’t think they screen on that basis, but their extensive discussion might be helpful.

Nick

No. I did not weight by inverse sd. I could– I have that bit coded in at the end of my script but the curves are not weigthed by sd. They are only screened. (Weighting by individual sd’s would make little difference. That’s not a problem.)

Roman is right. The normalization by s.d. isn’t important. It’s not the feature we are discussing.

You’ll still get a biased final result if your method is such that the errors in computing your conversion factor do not have a mean of zero. It’s just that the bias is introduced slightly differently.

By computing the calibrations in a different period from the screening you avoid the difficulty arising from the fact that screening ensures that the errors in computing the calibration factor are biased on the same direction.

Good gracious. The “but” clause is explaining that while it is reasonable to screen you have to be careful because the screening can result in picking out cases where some passed merely because of noise. That is: They are not going to explain that if you are not careful you bias the result.

It is amazing that you think this represents a rebuttal to all of us telling you that screening can bias the result!!

Those who want to download the paper can find it here

http://arxiv.org/abs/1104.4002v1

Read further and find

McShane and Wyner then go on to discuss the various ways climatescientists have tried to get around the problems introduced by screening and explain why they don’t work. And you think they are saying problems are not introduced by screening? Wow!

I mean….Here’s what they say about the validation and verification proceedure

Nick,

McS&W conclude that the true uncertainty is much wider than most published reconstruction methods have suggested, and that certain methods lead to substantial loss of variance in the reconstruction period.

.

Near the start of their paper they say something like: we base our analysis on the assumption that the paleo experts know what they are doing WRT proxies, since we have no way of knowing if that is in fact the case. Their analysis focuses on the statistical issues, not the underlying assumptions.

.

So they implicitly assume that proxy series like tree rings always carry a temperature signal of the same magnitude, both before and during the calibration period; the screened “good” trees were always good and the rejected “bad” trees were always bad. I think that assumption is not justified for multiple reasons, as we have already discussed more than once. IMO, the entire rational for data snooping based data selection is simply flawed. Use all the data, and you solve all the problems… including both the statistical issues like loss of variance and the need to make very questionable assumptions.

Lucia,

“while it is reasonable to screen you have to be careful “

They aren’t saying there’s anything wrong with screening. They are saying that you have to set the significance level in the light of unit root issues – ie an AR(n) correction isn’t good enough.

“it corrupts the model validation process:”

You’ve highlighted that, but it just means what it says – if you have used all the overlap for calibration, there isn’t any data to validate with. It doesn’t mean there is a bias. I’m not sure why they called it a subtle reason.

And

“Here’s what they say about the validation and verification proceedure “

Yes, again validation, not reconstruction. And they are just talking about specific choices for placement of validation periods. Again nothing to do with selection causing bias.

SteveF,

Yes, of course M&W are famously critical of error estimates. And others have criticised them.

My point here is just that they say they have no problem with selection by correlation cutoff.

Nick,

“My point here is just that they say they have no problem with selection by correlation cutoff.”

And my point is that M&W say up front that they do not know enough about proxies to know if the underlying assumptions the reconstructions are based on are justified. Their analysis examines only statistical issues, not the rational for the entire exercise.

Where do they say they have ‘no problem’ it? They discuss numerous problems with it. In the very quote you post the end with “but, for it to offer serious protection from overfitting the temperature sequence, it is necessary to detect “spurious correlations.—.

That is is a recognition the technique can result in selection of spurious correlations which you must detect. That’s at least one problem not “no problem”. And they discuss more problems.

As far as I can tell McShane and Wyner say:

1) Screening by correlation can introduce biases. (This is what my posts have been saying.)

2) If you do it wrong, those biases can result in proxies reconstructions that are biased. (This is what my posts have said.)

3) This means you have to carefully create a screening process to either avoid the bias (or account for it in your uncertainty analysis. (This is what my posts have said.)

4) Some specific methods that involve screening result in biases. (This is what I say– and I discuss on in particular.)

You seem to be wanting to rebut the claim that a particular method of screening (that happens to be used in climate sicence ( is biased by saying that some other method might not be.

And then, you seem to want to suggest that my showing a a particular method means I am claiming that all possible ways of screenign are biased (which I have certainly not claimed. But even worse you seem to be wanting to disprove the my demonstration that shows that when screening is used and applied using ‘method A’ you get a biased result but if you use ‘method B” you do not get a biased result represents my claiming that screening can never be used. Or that it is always biased! That is just amazing.

I’ve clearly shown here that the method I say is unbiased is… uhm…. unbiased. The title: “Screening: An Unbiased Method” clealry indicates I think that it’s possible to overcome the problem inherent in screenign and correlating over the same period. I am clearly not claiming that screening can never, ever, every be used.

Why you would think that McShane ans Wyner showing that screening can result in bias but also showing some methods either eliminat it or reduce the biase is any sort of “rebuttal” to the idea that screening by correlation and computing the calibration during the same period will give you incorrect proxy reconstructions!

Your comments are achieving iconic ‘idiot’ status. Seriously.

More evidence they don’t have “no problem”. Of their own reconstruction (‘model’) they write.

So they criticize features of their own model! To suggest they have “no problems” with these features is nuts.

SteveF:

Regardless, even given that Nick misinterpreted what they said and isn’t expected to understand the nuances of it anyway because he’s a numerical scientist with no particular experience with experiment, this would still be just an appeal to prior authority to counter a factual demonstration that a problem exists.

On a similar vein we can blow off Nick’s assertions such as:

We can start out with more knowledge than he currently possesses, Nick is simply talking out of his a**, and of course has never tested this assertion, in fact doesn’t have the slightest idea what he’s talking about as he’s read little of the literature relevant to the topic other than the Gerald North “winged report” and the M&W article that he only partly groks, but whatever … he would be very ready to excoriate anybody else were they to do the same.

mt posted an update on RomanM’s ClimateAudit thread showing his reconstructions (real and synthetic data).

For whomever—the major volcanic episodes are highly visible in these reconstructions. Whether that means they are tracking temperature is a separate issue, but the relevant point is there’s a signal, it’s climate related, and it is attenuated using the screened-by-correlation method.

Seriously Nick?

–

Ed’s equation did not include the error term above because he assumes the error to be 0. That is what “regular” regression theory assumes. That is the condition which must be true for “regular” regression to yield an unbiased estimator. Correct?

–

Here is Roman’s equation which includes the error term which ED assumes to be 0:

Ave(Bk) = B + Ave(Be,k )

–

So “Ave(Be,k)” must be 0 in order for Ave(Bk) to be unbiased and for this to be a “regular” regression. Correct? Here is what Roman has to say about the effect of correlation screening:

I don’t think I need to add anything to that. Nick, why don’t you explain to us (with regression math – not with arm waving references to kriging and M&W) why Roman and Lucia are wrong and why correlation screening gives us a “regular” regression where we can have an expectation of Ave(Be,k ) = 0

Or he can do the experiment himself, then admit he’s wrong. Which I might add would be a first for this thread.

Effect of correlation-based screening on mean slope.

Wrong is wrong and Nick is that on this.

Quick question. Are the proxies used in the Gergis paper available as a single collection somewhere?

Brandon, yep. Here:

http://www.climateaudit.info/data/gergis/gergis2012australasia.xls

Thanks Carrick! Now I just need to remember how I was reading xls files into R (I always hate I/O).

By the way, is anyone else thrown when they see proxy series like Palmyra have century-long gaps in their data?

Actually, unless I’m missing something, that file only contains the 27 proxies Gergis kept after screening. Carrick, don’t tell me you’re joining in on the teamspeak where something isn’t “used” in a paper if it’s screened out!

(I kid.)

Brandon, sorry it’s just the 27 that Gergis released. See her comments and Nick’s mentally challenge defense of her decision to only archive part of her data over on the climateaudit threads.

LL,

Yes, I think there is a lot that is, if not wrong, inappropriate in Roman’s math. Start with this:

“Suppose that we form the average of all the slope estimates.”

which is the lead-in to the B_k equation that you quoted.

But why would you form that average? Or, even more cogently, how would you form it. The proxies may well have different units, and then so would B. Gergis has a mix of ring width and δ18O.True, Roman did start with “Suppose that we have a homogeneous set of proxies”. Bit like a spherical cow.

I keep coming back to this issue that in the Gergis approach, the proxies are only aggregated after they have been converted to a temperature scale via the regression, using each individual B.

Roman says that “the slope is a multiple of that correlation”. Well, GMST is a multiple of GDP – the factor is (GMST/GDP). What you need to justify his statements is that it be a constant multiple. And it isn’t; it’s multiplied by the proxy standard deviation. That, among other things, turns a non-dimensional object into a dimensional one. Not something that can be just arm-waved away.

While I understand that Gergis did not feel that she had the right to publish certain datasets without others’ permission, I wonder about three things:

1) Why weren’t the rejected proxies even listed in the supplemental information? This would seem to be a minimal item of documentation.

2) Some (probably the majority) of the rejected proxies are already archived. Why didn’t Gergis include them in the spreadsheet archived with the paper? Just put them on a different page from those used in the PCR.

3) [and hypothetically…] What would have happened if one of the “private” datasets had passed her screening? It would now be an essential part of the reconstruction, no more quibbling about the meaning of “used”. Which would the authors consider more important – providing complete inputs to the PCR or protecting the data?

HaroldW (Comment #98665),

1 and 2 – Because that would give ammunition to the skeptics… which is verboden.

.

3 – She would have gotten the needed permissions.

Nick

How? Well, dealing with units is easy enough. If it’s simply understood that proxies have been scaled to all have the standard deviation over the instrument record:

scaled_proxy_i=proxy_i * sd_Temperture/sd_proxy_i

The scaled proxies now all have units “Temperature” and you can easily just average over them.

I didn’t divide by sd_proxy_i because I generated them to all have the same standard deviation and also have the same as the Temperature.

The fact is: the batch of ‘m’s are biased.

Lucia,

“The fact is: the batch of ‘m’s are biased.”

You keep going back to that, despite Nick’s best efforts at obfuscation.

Nick –

“Suppose that we have a homogeneous set of proxies. Bit like a spherical cow.” So perhaps you can enlighten us on why differences between proxies affect the argument that the slope obtained by screening is biased high. If I double (or halve) a proxy, the literal value of the slope (dProxy/dTemp) over the reference interval would change, but the reconstructed temperature would remain the same. This is true of any affine transformation of the proxy. Including multiplying by the standard deviation.

You’re correct *in general* that averaging proxies is combining apples and oranges; one needs to convert to common units (typically, inferred temperature anomaly). However, in Roman’s case it’s quite clear that the proxies really are all “apples” and averaging them is perfectly legitimate.

Lucia,

Again your response reflects consequences of the failure here to specify what the selection fallacy actually is. M&W describe very explicitly the Gergis step that you criticise and they say

“This is a reasonable approachâ€

Now if selection by observed correlation is itself improper, as has been loudly proclaimed, there is no way that statisticians would say that it is a reasonable approach.

But then you go on to list all their issues about validation etc as somehow related to the scereening fallacy. Sure, M&W have lots of issues about how proxy reconstruction is done and validated. But a generic problem with screening isn’t one of them.

Their caveat dealt with “spurious correlationâ€. They aren’t objecting to screeening on correlation in principle. They are just saying that you have to make sure that it really is correlation. If you have data without unit root issues, their caveat goes away.

Nick,

You persist in ignoring the clause after “but” in that sentence.

This is neither here nor there. It is biased.

Uhhh… and with respect to what your persistent attempts to insist I am wrong– are you once again claiming I am objecting “in principle? I’m not. I’m objecting to specific methods of implementing. That is: I’m objecting to certain practices that involve screening.

No Nick. That is not all they are saying in the full paper. It’s true in that particular sentence, they don’t manage to spit out all the possible ways things go wrong. I’m not sure they were trying to say that much in that sentence.

But it’s all rather unimportant because if they thought the biase introduced when screening and computing ‘m’ in the same period is just somehow ok and not to be mentioned, quantified, attended or ever criticized then they were wrong.

As far as I can tell, they never made such a ridiculous claim. You are trying to put it into their mouths in the deluded notion that if they said it, that would somehow magically turn it into the truth. It wouldn’t. If they said what you think they said, then they were wrong.

Nick,

“They aren’t objecting to screeening on correlation in principle.”

True, they are not, because (as they say right at the beginning of their article) they are not in a position to evaluate if the assumptions that underlay the whole enterprise are valid.

.

I am saying that the assumption that the specific selected trees behave the same in both the correlation/selection period and in much earlier periods is not justified, if only based on the well known ‘divergence problem’ of the late 20th century. As far as I can tell, Lucia has never said that, and she seems to have done all her analyses based on the assumption that the ‘selected’ trees have the same temperature signal long before the correlation/selection period as during the correlation/selection period.

“Nick Stokes

The proxies may well have different units, and then so would B. Gergis has a mix of ring width and δ18O.”

Bad, bad boy Nick. The δ18O Vostok data only goes back to 1775, they ignored the deuterium record of the same period, they ignored the Law Dome data which is much longer and more detailed.

Compare the Vostok deuterium record

http://cdiac.esd.ornl.gov/ftp/trends/temp/vostok/vostok.1999.temp.dat

with the truncated data used by Gergis.

Historical Isotopic Temperature Record from the Vostok Ice Core

Jouzel, J., C. Lorius, J.R. Petit, C. Genthon, N.I. Barkov, V.M. Kotlyakov, and V.M. Petrov. 1987. Vostok ice core: a continuous isotope temperature record over the last climatic cycle (160,000 years). Nature 329:403-8.

Re: Nick Stokes #98663

Nick are you suggesting that the Gergis regression method is not scale invariant?

Nick:

Of course his general statement has been verified using Monte Carlo analyses, so we know the general statement to be true.

Believe whatever you choose to “believe” to be true. That’s how reality works, right?

Es macht mir nichts aus.

HaroldW

“If I double (or halve) a proxy, the literal value of the slope (dProxy/dTemp) over the reference interval would change, but the reconstructed temperature would remain the same.”

That’s pretty much my point. The B values are factors that convert a proxy to temp. Once you’ve made the conversion, you can average the result. But what sense is there in averaging the factors?

It’s not a coincidence that Roman does it, though. It’s my initial Q that prompted his post – biased relative to what? That’s going to involve some notion of average.

Lucia says: “Well, dealing with units is easy enough. ” Yes, you can form a weighted average with dimensioned weights. That’s what is done in CPS, using sd. But there is an infinite variety of weighted averages you can dream up. Why is dividing by sd different to any other of the myriad of weightings? It isn’t even as if you think that the proxies are random variables. No-one talks about the sd of GMST as having any physical meaning.

My point above was that each B comes from an individual linear regression. For that, it’s an unbiased estimator, regardless of whether ρ is high or low.

Nick:”My point above was that each B comes from an individual linear regression. For that, it’s an unbiased estimator, regardless of whether Ï is high or low.”

Yes, each individual estimated B is unbiased. Some are high, some are low. So far we agree. When you throw out the low ones, though, the remaining ones are those which are high. The reconstruction uses the too-high ones. Which results in compressing the reconstruction (and regression to the mean in the reference interval).

.

When you use all the proxies, the reconstruction is unbiased.

LL,

“Nick are you suggesting that the Gergis regression method is not scale invariant?”

I believe it is scale invariant – though it is not particularly well described. If you form a B (or m) for each proxy by regression and then convert to T, that is an operation in each proxy’s units. The result is on a common scale and you can aggregate.

Effectively you use dP/dT in place of sd(P)/sd(T) in CPS. I think it is better justified, providing you have a significant estimate.

HaroldW

“When you throw out the low ones”

Again, you aren’t throwing out the low ones; you are throwing out the insignificant ones. There’s no reason to believe that high or low ρ biases the regression beta.

“When you use all the proxies, the reconstruction is unbiased.”

Back to my question – relative to what? And what is the meaning of “all”? What was special about that short list of 62?

Lucia

“are you once again claiming I am objecting “in principle? I’m not. I’m objecting to specific methods of implementing. That is: I’m objecting to certain practices that involve screening.”

So we need a statement of what the “sceening fallacy” is. If it’s only “specific methods of implementing” screening, what are they?

Nick Stokes (Comment #98676)

> Lucia, Again your response reflects consequences of the failure here to specify what the selection fallacy actually is.

Frowny-face emoticon:

🙁

Nick –

Gergis screens on the correlation coefficient estimated over the reference period. As has been shown several times, if you take a series with a moderate SNR, the correlation coefficient is strongly related to the slope. Selecting on correlation DOES select for the higher slopes. Yes, it also has the positive advantage of throwing out series which have no temperature dependence whatsoever. But it also throws out perfectly good series in which the “noise” happens to reduce the correlation coefficient (and the slope).

.

Let’s try an analogy. You’re interested in measuring the height of the average man. You set up a device which measures the height of passersby. However, it turns out that the device is being triggered by dogs, children, etc. — things you don’t want to include in your height estimate. [In case it escapes you, the dogs &c are analogous to the non-temperature-sensitive proxies.] You modify your device to trigger only when the object is above 6 feet tall, which quite satisfactorily eliminates false triggers. Your technique indicates that the average man is 6’2″ tall. Every measurement is correct. But the result is wrong.

Re: Nick Stokes #98657

If the Gergis regression method is scale invariant, then how does the timing of transformation to temperature somehow affect the expected value for Ave(Be,k)? You are claiming that Ave(Be, k) is unbiased (expected value of 0) and that lack of a common scale is the reason.

Nick

Once again, Nick is resorting to the “before you said apples were fruit. Now watermelons. And cherries too? Can you tell me what the screening fallacy is?!”

Look Nick: People have told you. Numerous times. Stop this.

Nick

A) You the ones with low ρ are the insignificant ones. You are trying to make a distinction with no difference. There is reason to believe this biases the regression data.

Relative to the the actual temperatures in the past– which is what you are trying to predict.

Of course the 62 my have been badly picked, but presumably they were picked based on some sort of belief they would contain a signal. That’s what’s special about them.

Lucia, here’s my take: Nick will continue to argue (in bad faith, IMO) against *anything* that may tarnish the reputation of the team and the “consensus” for as long as he can still type. I’m not sure it’s a valid use of your time to continue to engage, given the deliberate obtuseness and demonstrated dishonesty (“Will no one give me a definition!? I keep asking!”) on this issue.

Other than a lack of candor on motive, I don’t see a great deal of difference between Nick and [pick the best correlation of the 2 nearest grid cells 🙂 ] Karl Rove or James Carville. IMO, you’re being played by a talented spinner who has a lot of time, but absolutely no desire for the truth or moving the discussion forward. Just advancement of the cause.

Nick,

Above Lucia wrote “Stop this.”

That is a clear imperative statement. Maybe you should pay heed. As Jim Croce noted in song many years ago, it is unwise to pull on superman’s (or woman’s) cape.

TerryMN,

I have been trying (for a very long time) to give Nick the benefit of the doubt, but I must admit that these recent threads have convinced me that he really is not trying to advance understanding, only throw up diversions and obfuscations. I do not claim to understand his motivations, but I am pretty sure that engaging him serves very little purpose, since his focus seems to be redefining every issue to be a question of “how many angels are dancing on the head of a pin”, rather than the substantive facts being discussed. His tireless defense of data snooping methodology, which has been shown (by Lucia, Roman, and others) to clearly introduce bias, is impressive for its energy, but ultimately a waste of his time, and more importantly, everyone else’s time as well. I am done spending time on Nick.

Here nick,

go explain to mt

http://climateaudit.org/2012/06/17/screening-proxies-is-it-just-a-lot-of-noise/#comment-339012

Lucia,

“Of course the 62 my have been badly picked, but presumably they were picked based on some sort of belief they would contain a signal. That’s what’s special about them.”

Gergis included all the proxies within a lat/lon region that came from a listing in Neukom and Gergis 2011. This incidentally, for those curious, tells you which ones were rejected. The selection of those is described thus:

“In this study we assess SH climate proxy records of potential use in high-resolution climate reconstructions covering the last 2000 years. Each record must:

* extend prior to 1900

* be calendar dated or have at least 70 age estimates in the 20th century

* extend beyond 1970 to allow sufficient overlap with instrumental records

* be accessible through public data bases or upon request from the original authors”

Basically everything conveniently available. Not even temperature proxies – climate proxies. Some, like the callitris data, were part of a drought study.

There’s nothing special about that 62.

Steven,

mt is using Roman’s program which, like Lucia’s, is using CPS – ie standardizes on sd. And for that, yes, it is possible to get some bias for a lot of proxies with high S/N. I actually agree with Lucia that it depends on your method. Gergis method, which does not I think standardize on sd, would not be affected by that. Maybe something else.

But SteveF calls it data snooping, and there’s lots else where people say it’s “baby food statistics” wrong etc. That’s a very different proposition.

In fact, in CPS as done here, you divide by the sd for the whole period. That is truly looking at the actual data you are trying to predict (pre-cal), so bias is possible.

HaroldW,

“Selecting on correlation DOES select for the higher slopes.”

It would be possible to have a set of proxies where each had different units. You could still select by correlation coefficient. But are you selecting the larger slopes – what would that even mean with different units?

“Gergis method, which does not I think standardize on sd, would not be affected by that. Maybe something else.”

Well, when you show me the synthetic tests that Gergis did to insure that her method does not introduce a bias or reduce variance or narrow uncertainties, or when you can point to a peer reviewed article in a statistical journal that discusses her method, then your opinion of what her method may or may not do will be interesting. Until such time a cherry is fruit.

Steven

“when you can point to a peer reviewed article in a statistical journal that discusses her method”

I did. McShane and Wyner.

Which explains the method is poor.

NIck

Mine does not standardize on sd. Please see above where I told you that.

This is silly. It’s still going to be affected by the fact that the errors she uses in the individual ‘m’s are biased high. It’s not going to matter when she converts– they are biased high.

Nick Stokes (Comment #98706)

> I did. McShane and Wyner.

Another frowny-face emoticon:

🙁

“McShane and Wyner” isn’t a shaman’s totem, it’s a 44-page article. In claiming a long article as support, it’s usual to provide a link, and quote the relevant passage.

I can’t do the latter, but, curiously, right off the bat, M&W offers help to Nick in grappling with the screening fallacy. Final sentence of the Abstract:

M&W’s implication is that the much wider standard error range produced by their model is appropriate, i.e. correct. Unsurprisingly, these authors devote attention to this point in the body of their paper. In turn, this implies that the tight uncertainty bands generated by RealClimate-approved reconstructions, MBH98 through Gergis12, are inappropriate, i.e. wrong.

The past few Blackboard threads are strewn with comments that address this concept. Here is an early one.

Upthread in #98962, HaroldW returned, again, to this notion of unacknowledged bias.

I’d restate the final sentence: the result isn’t what’s wrong as much as the associated error measurement — implied or explicit — is fallacious. The challenge for HaroldW’s estimator is to collect the key data, then analyze them so as to calculate a correct measure of uncertainty (e.g. standard deviation). In the example given, that might be

“Height of the average man is 6’2″ (with the area under the skewed curve equaling 68.3% being bounded by 2’6″ and 6’6″).”

For almost all purposes, this extremely broad estimate would also be a useless estimate.

Team boosters seem to view the requirement to correctly determine uncertainty intervals in the same fashion that Victorians looked at uncovered female legs. Never missing an opportunity to avert their gaze.

Amac,

Sorry, I should have linked to the comment above. It produced some responses. My contention is that M&W say that it is a reasonable method, given some care with spurious correlation (unit roots etc). I don’t think they would have described a cherry picking method that way.

Lucia,

Mine does not standardize on sd.

Well, the sd is set to 1 in your case.

“”Team boosters seem to view the requirement to correctly determine uncertainty intervals in the same fashion that Victorians looked at uncovered female legs. Never missing an opportunity to avert their gaze.””

Thanks for the classic AMac. But, I do think you need to include Nick in your grouping. Though lately his foil has been a bit blunt lately, at least he provides us some humour.

This concern of CI’s has been going on as far back as I can remember. That was one of the items, or lack there of that got me started on studying reconstructions per attribution in AR4. Though this is all fine and good, the real paydirt is to now take what you can demonstrate here, and show how it crushes attribution in Chapter 9, AR4. They really should have taken Mosher advice about just models. Wait, they couldn’t do that because they had to admit modelling was a circular argument. Though perhaps they should be given credit for recognizing the circularity, and the weakness of the absence of proof is proof of absence argument.

Nick

Actually… no….. The equation used to generate the proxies has a sd=1 in the limit that a proxy has an infinite number of points. But the sample sd over the period of the reconstruction isn’t 1. I have a distribution of sds, and I could show them.

I do have a section at the end of my code that standardizes based on sd. The results are noisier– but not much noisier. But anyway, they are diffierent.

Nick – “But are you selecting the larger slopes – what would that even mean with different units?”

Now you’re just being obtuse. It has nothing to do with units. Whatever the units of a given proxy, higher correlation coefficients are associated with higher slopes.

.

Nick, it’s relatively simple to make a synthetic proxy with an SNR typical of the Gergis proxies. White noise, [reasonable] red noise, it’s not going to matter. Simulate the proxy over 70 years. Compute the correlation coefficient & slope estimate. Run a Monte Carlo — calculate the expected value of the slope estimate conditioned on the correlation coefficient being above a threshold (say 0.2 or so), and the unconditioned value.

.

Several other people have run code, produced graphs. Yet after a week, you, who have equal facility with code, haven’t chosen to show any contrary quantitative arguments, instead resorting to silly verbal arguments such as “selection is done on correlation, not slope”. You’re too smart not to have absorbed the points of the demonstrations by Roman, Lucia, mt. At this point, you’re just playing some sort of game. I don’t know what game, but you know what? You win.

Nick Stokes (Comment #98711)

Thanks for the link.

> My contention is that M&W say that it is a reasonable method.

By all means, let’s nominate M&W as authority figures for this discussion. M&W10 isn’t just a two-sentence paragraph with the phrase “reasonable approach” applied to “[lowering] the number of proxies… through a threshold screening process [Mann et al. (2008)],” with the caveat that “it is necessary to detect ‘spurious correlations.’â€

For instance, two paragraphs down:

“As can be seen in Figures 5 and 6, both the instrumental temperature record as well as many of the proxy sequences are not appropriately modeled by low order stationary autoregressive processes. The dependence structure in the data is clearly complex and quite evident from the graphs.”

Also refer to my quote of M&W10 upthread at #98710. Or re-read the Abstract.

Victorian-style, you are averting your gaze from the very awkward statistical forest sketched by M&W, focusing instead on a lonely but comforting tree.

Nick

This overlooks the issue of bias. That’s true no matter how many quotes you try to get from people. The bias is demonstrable.

AMac –

I accept your well-stated correction to my analogy above. But following the practice of Gergis et al. 2012, the CI will instead be estimated by averaging various subsets of the screened measurements, and will conclude that average height is 6’2″ +/- 1″ (2SE). Also, the graph that will be published in the IPHC (Intergovernmental Panel on Height Change) report will show a dot at 6’2″ and no error bars. 😉

Amac,

It would really help if you (and John) could give some attention to the topic of debate here. It isn’t whether the CI’s are too large, or whether M&W have general gripes about proxy reconstruction. Or even about whther the noise is well modelled by stationary autoregeressive processes. It’s about whether selecting a subset of proxies by correlation is a reasonable thing to do. I cited M&W who addressed that specific point, explicitly, and said yes.

HaroldW,

“Now you’re just being obtuse. It has nothing to do with units.”

Suppose I have 3 proxies:

1. B=3 W/K, ρ=.1

2. B=2 kg/K, ρ=.2

3. B=4 m/K, ρ=.3

So I choose those with Ï>0.15. Am I selecting the ones with larger slope B? What would that mean?

Nick Stokes (Comment #98719) —

Frowny face. 🙁

Your implicit definition of the screening fallacy is too constricted. Per HaroldW supra, you also aren’t paying attention the toy problems contributed by Lucia, RomanM, and mt, exploring whether issues with selection exist in the real world of published paleotemperature reconstructions. They do.

It’s an unproductive avenue for you to argue against this notion: “selecting a subset of proxies by correlation is always and necessarily an unreasonable thing to do“. That’s a straw man. For instance, In their back and forth, Lucia, SteveF, and Carrick have agreed that selection by correlation could produce an unbiased estimate of paleotemp — under the right circumstances.

When do these favorable circumstances obtain? How would scientists and readers know that these underlying assumptions are valid for, e.g. Mann08 or Gergis12? A line picked from M&W10 does not answer these questions. A quote from an authority doesn’t trump an experiment.

But you know all this. It’s well-covered ground.

I’ll be offline for a few days, but interested to see how the discussion progresses from here.

Nick, your stubborness simply entices mockery. On this and other threads we have discussed the possible effects. Your continued effort to mis-direct attention from the fact that this bias can occur and that it was the authors’ duty(ies) to develop unbiased methodology and develop reasonably accurate CI’s is pathetic. It is pathetic, because you argue semantics, and yet not a single graph or mathematical construct has been forth coming to invalidate Lucia’s and others work. In fact, I am excited by what they have done here and think it should be developed and published. As far as M&W go, you have once again gone to ridiculous lengths to avoid, what they did actually write, and essentially try to make what they wrote different from what they actually wrote. Your claim about it not being CI’s is another bit of distraction. Lucia has shown that one of the effects by incorrectly biasing, if you wanted to account for it, could be done with CI’s but why do it when such would show you that you could use a better method! Yes, and M&W explicitly pointed out that it could contain errors, and it has been shown here at the Blackboard, that it does cause errors. Having known errors as being reasonable is just stupid. It has less explanatory power. Science is supposed to about increasing the explanatory power of a method. You seem to think science should go backwards.

HaroldW,

“Yet after a week, you, who have equal facility with code, haven’t chosen to show any contrary quantitative arguments”

That’s not really true. I ran Roman’s code and showed that his bias was no more or less than you would get with random selection of the same magnitude in his pseudo-proxy example.

I have actually been tinkering. I think I can account quantitatively for the bias shown in Roman’s white noise examples. A little more work needed there. I’m trying a non-CPS method which may be equivalent to Gergis’ PCA usage.

I’m doing this with a suspicion that CPS may be part of the problem. And it really shouldn’t be used. CPS is one way of tackling overfitting; selection by correlation (as mentioned by M&W) is another. It doesn’t really make sense to use both.

Was screening a paleo dataset on the MWP and LIA a dumb idea or did Nick hijack the thread?

Nick: